Terraform is an infrastructure configuration language. It supports the declarative, stateful definition of abstractions ranging from compute resources, server configuration, certificates, secrets, and much more. In addition to a powerful set of CLI commands, the configuration language itself provides several powerful abstractions that can be used to structure complex projects as required.

This article is a compact explanation of the Terraform language. You will learn all about variables, expressions, functions, and lifecycle meta-methods. The goal is to uncover aspects of the language that help you to increase the effectivity when working with large sets if resources. Most of this information stems from the official documentation, but it’s curated to a compendium.

The technical context for this article is Terraform v1.4.6, but it is applicable to newer versions as well.

Variables

When you repeat the same values over and over in your Terraform projects, you should define them as variables: Defined once, used everywhere. Variables are versatile data holder that can be reused in your Terraform project. Like in programming languages, variables can be defined implicitly or explicitly, they have a type, they can be used in expressions and functions, and they even have scope.

Types

There are three simple types, defined as literals or with same-named type keywords:

- string:

"t2.small", a sequence of characters or numbers enclosed by hyphens - number:

2, a verbatim number - bool:

true,false, truth values with verbatim values

In addition, several complex types exist, that hold same or different simple types or nested collection times. They are defined with literals as well, or with type constructors:

- list:

["t2.small", "t2.large"], a collection of same-type objects, enclosed in square brackets, where elements are access based on an index value starting at 0, likelist[0] - map:

{"small" = "t2.small", "large"= "t2.large"}, an object consisting of key-value pairs enclosed by curly braces, keys are of type string and values can have any type, member access is expressed asmap["small"] - set:

toset(["t2.small", "t2.large"]), a list with unique members, cannot be constructed, but is created by using thetoset()function on a list - object:

{ami: "ami-8135831", instances: 2}A structural, optionally nested type that declared various properties with different types, and the properties themselves are used verbose like inobject.instances - tuple:

["ami-8135831", 2], a list with values of different types, elements are accessed by using an index, e.g.tuple[0]

Definition

Variables can be implicitly declared by using their definition syntax, or they can be defined explicitly with the variable keyword. Here is how:

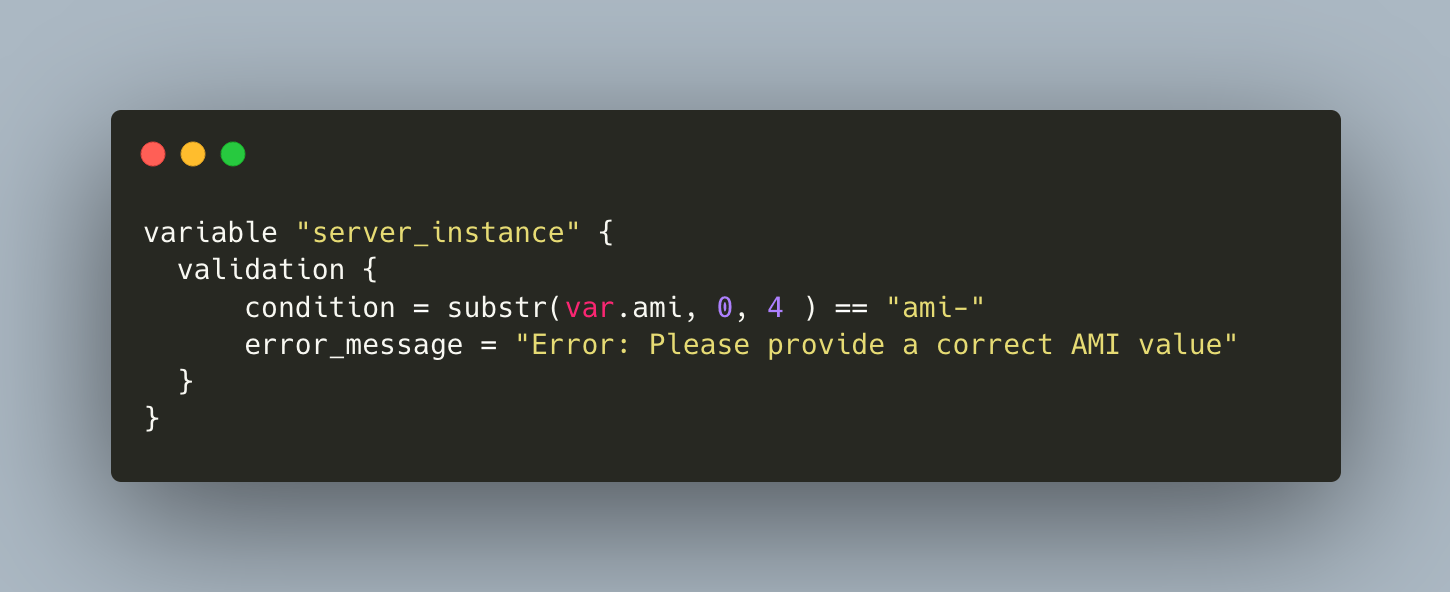

type: Define this variable type, either with one of the type keywordsbool,number,string, or with a type constructor forlist,set,map,objectandtuple.description: A text describing the intention of the variablesensitive: will prevent that the variables value is printed in log statements when using Terraform (but it will still be clear text in local state files)nullable: Defines that this variable can have a null value as wellvalidation: Defines a rule that the variables values need to adhere. The validation itself is expressed as a configuration block with the two keywordsconditionanderror_message. The condition is a Terraform expression that needs to evaluate to a boolean value, and the message is shown to the user in the case that the condition is not met. Here is an example to check that the name of an AWS instance conforms to a certain syntactic pattern:

variable "server_instance" {

validation {

condition = substr(var.ami, 0, 4 ) == "ami-"

error_message = "Error: Please provide a correct AMI value"

}

}

Scope

Variables have global scope in scripts. However, the concrete value of a variable can be overwritten.

The evaluation order of variables is this:

- Environment variables

- All environment variables prefixed with

TF_VAR_, such asTF_VAR_ami=ami-0e0287b26addd9ff8

- All environment variables prefixed with

- Files

*.tffiles containingvariableblocks (auto loaded)*.tfvarsor*.tfvars.jsoncontaining JSON syntax variables (auto loaded)*.auto.tfvarsor*..autotfvars.jsoncontaining JSON syntax variables (auto loaded)

- Command Flags

- Passed to the terraform command as

-var "ami=ami-0e0287b26addd9ff8" *.*containing JSON syntax, loaded by applying-var-fileto the terraform command

- Passed to the terraform command as

Type Operators

For the simple types, different operators exist. See the operators documentation for a complete overview.

Arithmetic

Arithmetic operations of addition, subtraction, multiplication, and division are expressed with their symbols.

instances = limit/2

buffer = limit - instances

Logical

Logical operations can be applied to numbers and to strings.

1 > 2

1 < 2 && 41 > 40

Comparison operators can be applied to all types.

"t2.small" == "t2.small"

42 == 42

42 != 42

Functions

Terraform provides several built-in functions that are helpful in complex projects with large resource inventories. The official documentation list the following groups:

- Numeric

- String

- Collection

- Encoding

- Filesystem

- Date and Time

- Hash and Crypto

- IP Network

- Type Conversion

The following sections contains selected functions for objects that you will work most commonly on.

Numeric

max (1,34,42) // => 42

min (1,34,42) // => 1

floor(10.9) // => 10

ceil(10.9) // => 11

String

split(" ", "aws-ami instance create") // => ["aws-ami", "instance", "create"]

upper("aws-ami instance create") // => AWS-AMI INSTANCE CREATE

chomp('Hello Terraform\r\n') // => 'Hello Terraform'

format('%s-%s:%d', 'Hello', 'Terraform', 42) // => 'Hello-Terraform: 42'

join(':', ['hello', 'terra', 'form'] ) // => 'hello:terra:form'

Collection

variable "workload-type" {

type = object

default = {

small = "t2.small"

large = "t2.large"

}

description = "Default EC2 Instance categories"

}

length (var.list) // => 3

index(var.list, "aws-ami") // => 0

contains(var.list, "aws-ami") // => true

keys(var.workload-type) // => [ "small", "large" ]

Encoding

base64encode('Hello Terraform') // => SGVsbG8gVGVycmFmb3J

jsondecoded("{\"instance\": \"t2.medium\"}") // => { "instance" = "t2.medium" }

Expressions

Expressions are statements in the Terraform configuration language that operate on and modify variables, resources, or modules. The following sections group related expressions into their primary goals, and they also mention to which objects they are applicable. These sections do not show all Terraform expressions - see the complete expression overview.

Relationships

implicit dependencies (modules, resources)

These do not require a specific syntax; they are realized whenever a resource/module refers to the values of another one.

For example, when you create a new AWS instance, you want to tie it to a specific network that you created, and reference the networks’ id from a variable of the instance:

resource "aws_instance" "node" {

ami = "ami-a1b2c3d4"

instance_type = "t2.medium"

network_interface {

network_interface_id = aws_network_interface.node_default.id

}

}

depends_on (modules, resources)

This keyword defines an explicit relationship between different resources. The dependent resource will only be created or modified when all Terraform operations on the parent resource are completed.

For example, when you create an AWS instance, you want to refer to an IAM policy that influences the instances operation:

resource "aws_instance" "node" {

ami = "ami-a1b2c3d4"

instance_type = "t2.medium"

depends_on = [

aws_iam_role_policy.example

]

}

Explicit dependencies should be used sparingly. Terraform operations such as planning and applying are slower for explicit dependencies, because the operations on all dependent resource need to be fully finished first.

Iterations

for_each (modules, resources)

When collections of items are processed, the keywords count and for_each provide some control about the looping behavior of the resource creation.

Consider the case that you have a list of server names, and each name should be used to create a dedicated instance. Basically, you invoke the following:

resource "aws_instance" "node" {

for_each = toset(var.aws_instances)

ami = var.node_ami

instance_type = var.node_instance_type

tags = {

name = each.key

}

}

In this example, the for_each declaration consumes a set of aws_instance names to create multiple resources. Inside the resource definition, you can use each.key to refer to the name of a particular item. If the iterated set are not strings but objects, then then use each.value to get a reference to it, and then access its attributes.

count (modules, resources)

Instead of consuming a list of items, you can turn a resource definition into a list-like object by using the count meta-arguments.

Basically, you define the number of items what will be created. Like the former example, the same aws_instance creation can be achieved with the following declaration:

resource "aws_instance" "node" {

count = 3

ami = var.node_ami

instance_type = var.node_instance_type

tags = {

name = format("node-$s", count.index)

}

}

The count.index variable refers to the current item number.

Expression Reusability

locals (resources, data, provider, provisioner)

An interesting feature that allows to form complex expressions and reference to them via a name. This comes as close as possible to a function definition, but it cannot be called with arguments. The expression itself can refer to variables and even to resources, making it dynamic. Similar to variables, locals also have a scope, as they can be defined globally or inside specific other expressions.

Here is an example for defining a set of tags, with static values, and a locals block that uses defined variables.

resource "aws_instance" "master" {

// ...

tags = local.common_tags

}

locals {

common_tags = {

cluster = "k8s-central"

data_center = "aws"

}

locals {

name_suffix = format("%s-%s", var.project, var.environment)

}

}

dynamic (resources, data, provider, provisioner)

This expression shares the applicability of locals for defining names for complex expressions, but they can only be used for iteration and inside other objects.

Consider the following example in which aws instances are created, and the dynamic block defines an iteration for nesting firewall rules inside the server:

variable "ingress_ports" {

value = [22,443]

}

resource "aws_instance" "master" {

// ...

dynamic "ingress" {

iterator = port

for_each = var.ingress_ports

content = {

from_port = port.value

to_port = port.value

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

}

}

Lifecycle

lifecycle (resources)

This expression enables fine-grained control about resource creation. It is itself a block definition inside a resource that defines the following arguments:

create_before_destroy: Some resources cannot be modified after creation, e.g. when the provider API is to limited. In this case, all resource modifications lead to their destruction and recreation - in exactly this order. But whencreate_before_destroyis set to true, the new resource will be created first.prevent_destroy: A boolean variable. When set to true, then allterraform planorterraform applycommands will not complete, but show an error, when it would destroy this particular resource.ignore_changes: Once a resource is created, it may be changed by processes outside of Terraform, e.g. if the cloud provider makes configuration changes. With this argument, a list of resource attributes can be specified that Terraform ignores when calculating changes. Here is an example:

ignore_changes = [ tags, region ]

replace_triggered_by: This keyword defines a weak implicit relationship between managed resource. This argument is a list of other resource names, and if one of those resources is changed, then the defining resource will be replaced.

replace_triggered_by = [ aws_ecs_service.svc.id ]

preconditionandpostcondition: This argument enables the creation of complex rules that need to be validated before or after the variable creation. It consists of acondition, a Terraform expression that needs to evaluate to a boolean value, and anerror_messagethat will be printed if the condition check fails. When a precondition fails, the resource will not be created. If the postcondition fails, then any other dependent resource will not be created.

resource "worker_node" {

# ...

lifecylce {

precondition {

condition = self.tags["CloudEnvironment"] == "AWS"

error_message = "Error: Can only create worker node in AWS"

}

}

}

Provisioners

provisioner (resources)

Provisioners are a versatile tool to define programmable behavior on resources. When a resource is created or destroyed, you can run arbitrary bash scripts. As stated in the Terraform documentation, the primary use case for this is to extract information from a resource and persist it in other parts of a system. However, you exploit the scripts and, well, implement any behavior.

The provisioner configuration can be declared inside a variable, for which it will be specific, or globally, which will use the same defined behavior whenever this provisioner is used inside a resource.

There are three provisioner types: local-exec for local execution, remote-exec for remote execution, or null-resource, a special type, the provisioner is treated as a normal resource, but you need to define when it will run with triggers expression.

A provisioner configuration block has these attributes:

when: Provisioners are only run once, during creation. However, you can also usewhen = destroyto define a destroy-time behavior.on_failure: Defined as either tocontinueresource creation or tofailand stop the creation of other resources.connection: A block itself, required for the typeremote-exec, with several specific attributes. See below.

Here is an example for local execution of a simple script: Grab an AWS instance’s IP and store it in a file.

resource {

provisioner "local-exec" {

when = created

command = "echo ${aws_instance.webserver.public_ip} >> /tmp/ip.txt"

}

}

connection (resources)

This configuration block defines how the machine running Terraform connects to a remote machine that the resource represents. It consists of several keywords and can be customized to use a proxy or even a bastion server. The most frequently used are shortly explained here, but also take a look at the connection arguments documentation.

type: The connection typessh: Use a SSH connectionwinrm: Use a winrm connectionfile: Create filesuser-data: Used for some resources of AWS or Alibaba cloudmetadata: For Google Cloud and Oracle Cloudvsphere_virtual_machine: For interacting with vSphere VMs

user: The name of the user for whom the connection is establishedhost: The hostname to which the connection is establishedport: The port to usepassword: authentication via passwordprivate_key: authentication with a private key file

A connection configuration can either be embedded inside a resource or inside the provisioner block itself. In the first case, it will be specific to the resource only. In the second case, it will be used whenever the provisioner is invoked.

Inside the provisioner, you can use the special keyword self to refer to the parent resource in which it is defined.

Here is an example for executing a bash script on a remote resource. Notice that you also need to define a connection block.

resource "aws_instance" "node" {

//...

connection {

type = "ssh"

user = "root"

private_key = tls_private_key.generic-ssh-key.private_key_openssh

host = self.ipv4_address

}

provisioner "remote-exec" {

scripts = [

"./bin/01_install.sh",

"./bin/02_kubeadm_init.sh"

]

}

provisioner "local-exec" {

command = <<EOF

rm -rvf ./bin/03_kubeadm_join.sh

echo "echo 1 > /proc/sys/net/ipv4/ip_forward" > ./bin/03_kubeadm_join.sh

ssh root@${self.ipv4_address} -o StrictHostKeyChecking=no -i .ssh/id_rsa.key "kubeadm token create --print-join-command" >> ./bin/03_kubeadm_join.sh

EOF

}

}

Conclusion

This article uncovered essential aspects about the Terraform configuration language. It revealed an astonishing complexity: Variables with types and values, numeric, boolean and string functions, and several expressions exists. But when should you use these features? For me, that’s a question of complexity scaling, driven by your Terraform project. When you want to manage extensive inventories of more than 100 items, then elaborate data management for your inventory is crucial, functions and expressions become a necessity to make things manually. On the other hand, I suggest you take this article as the first step to deeply learn the Terraform language, discover even more features that are helpful for your project.