Terraform is an infrastructure provisioning tool. At its core, you define a declarative manifest of resources that represent concrete computing instances, system configuration such as networks, firewalls, and infrastructure configuration. Terraform adheres to the concept of immutable infrastructure: Resources should match the state as expressed in the manifests, and any difference between the concrete objects and the manifests are resolved by changing the conrete object or recreate it.

When Terraform projects grow, so does the complexity of managing reusable manifests and propper configuration. To help with this, two concepts can be used: Workspaces and modules. In a nutshell, workspaces encapsulate configuration and state for the same manifests, and modules encapsulate parametrized manifests that are grouped together to create connected infrastructure objects. This article explains workspaces and modules in detail, and will also help you to understand when, and when not to use, these concepts.

The technical context for this article is Terraform v1.4.6, but it is applicable to newer versions as well.

Workspaces

A workspace is similar to a namespace: It has a dedicted name and an encapsualted state. In any Terraform project, the workspace that you start with is refered as the default workspace. The Terraform CLI than offers the following operations to manipulate workspaces:

workspace new: Creates a new workspaceworkspace show: Shows the currently active workspaceworkspace list: Shows all availabke workspacesworkspace select: Switch to a new workspaceworkspace delete: Delete a workspace

Workspaces should be used to provide multiple instances of a single configuration. This means that you want to reuse the very same manifests to create the vers samy resources, but provide a different configuration for these resources, for example to use a different image for a cloud server.

Example

To really understand how workspaces can be involved to configure your infrastructure, we will walk through an example. You want to deploy cloud VMs to create a Kubernetes cluster and have two seperate environments that you want to configure: staging and production. In staging, you use less powerful VMs, and the names of the nodes should reflect to which environment they belong. The cloud of my choice is Hetzner, which I covered in more detail in earlier article.

Let’s create the namespaces first and select the staging.

$> terraform workspace new staging

Created and switched to workspace "staging"!

You're now on a new, empty workspace. Workspaces isolate their state,

so if you run "terraform plan" Terraform will not see any existing state

for this configuration.

$> terraform workspace new production

Created and switched to workspace "production"!

$> terraform workspace select staging

Switched to workspace "staging".

The configuration of all servers will be defined in a variable of type map. Then, the resource will consume the variable and use the for_each metaexpression.

variable "cloud_server_config" {

default = {

"controller" = {

server_type = "cpx11"

image = "debian-11"

},

"worker1" = {

server_type = "cx21"

image = "debian-11"

},

"worker2" = {

server_type = "cx21"

image = "debian-11"

}

}

}

resource "hcloud_server" "controller" {

for_each = var.cloud_server_config

name = each.key

server_type = each.value.server_type

image = each.value.image

location = "nbg1"

ssh_keys = [hcloud_ssh_key.primary-ssh-key.name]

}

This infrastructure is ready to be provisioned:

terraform plan

Terraform will perform the following actions:

# hcloud_server.controller["controller"] will be created

+ resource "hcloud_server" "controller" {

//...

}

# hcloud_server.controller["worker1"] will be created

+ resource "hcloud_server" "controller" {

//...

}

# hcloud_server.controller["worker2"] will be created

+ resource "hcloud_server" "controller" {

//...

}

# hcloud_ssh_key.primary-ssh-key will be created

+ resource "hcloud_ssh_key" "primary-ssh-key" {

//...

}

# tls_private_key.generic-ssh-key will be created

This configuration does not distinguish the workspace at all: Provisioning for staging and for production environments would be the very same. Let’s change this.

First, we will create a meta config variable that holds the configuration items with values specific to the workspace names.

variable "cloud_server_meta_config" {

default = {

"server_type" = {

"controller" = {

staging = "cx21"

production = "cx31"

}

"worker" = {

staging = "cpx21"

production = "cpx31"

}

},

"image" = {

staging = "debian-11"

production = "ubuntu-20.04"

}

}

}

With this, the previous cloud_server_config variable does not hold any more inforamtion except the server names. Therfore, we change it to a set of strings.

variable "cloud_server_config" {

type = set(string)

default = ["controller", "worker1", "worker2"]

}

Finally, the servers will now access the meta config varible to read the values for the specific envrionments. We use the lookup function to query the meta env, and pass in the environment variable.

resource "hcloud_server" "instance" {

for_each = var.cloud_server_config

name = format("%s-%s", each.key, terraform.workspace)

server_type = each.key == "controller" ? lookup(var.cloud_server_meta_config.server_type.controller, terraform.workspace) : lookup(var.cloud_server_meta_config.server_type.worker, terraform.workspace)

image = lookup(var.cloud_server_meta_config.image, terraform.workspace)

location = "nbg1"

}

Let’s see which infrastructure objects would be created in the staging env:

$> terraform workspace select staging

$> terraform plan

Terraform will perform the following actions:

# hcloud_server.controller["controller"] will be created

+ resource "hcloud_server" "controller" {

+ image = "debian-11"

+ name = "controller-staging"

+ server_type = "cx21"

//...

}

# hcloud_server.controller["worker1"] will be created

+ resource "hcloud_server" "controller" {

+ image = "debian-11"

+ name = "worker1-staging"

+ server_type = "cpx21"

//...

}

# hcloud_server.controller["worker2"] will be created

+ resource "hcloud_server" "controller" {

+ image = "debian-11"

+ name = "worker2-staging"

+ server_type = "cpx21"

//...

}

This looks good. Now let’s check which resources would be created in the production env.

$> terraform workspace select production

$> terraform plan

Terraform will perform the following actions:

# hcloud_server.controller["controller"] will be created

+ resource "hcloud_server" "controller" {

+ image = "ubuntu-20.04"

+ name = "controller-production"

+ server_type = "cx31"

//...

}

# hcloud_server.controller["worker1"] will be created

+ resource "hcloud_server" "controller" {

+ image = "ubuntu-20.04"

+ name = "worker1-production"

+ server_type = "cpx31"

//...

}

# hcloud_server.controller["worker2"] will be created

+ resource "hcloud_server" "controller" {

+ image = "ubuntu-20.04"

+ name = "worker2-production"

+ server_type = "cpx31"

//...

}

Plan: 3 to add, 0 to change, 0 to destroy.

As you see, the nodes are named with the suffix -production, the Ubuntu image is used, and different server types are defined.

This is how workspaces can be used. Talking about state, if you use local state, the state directory contains the workspaces as subdirectories, like shown here:

terraform.tfstate.d

├── production

│ ├── terraform.tfstate

│ └── terraform.tfstate.backup

└── staging

├── terraform.tfstate

└── terraform.tfstate.backup

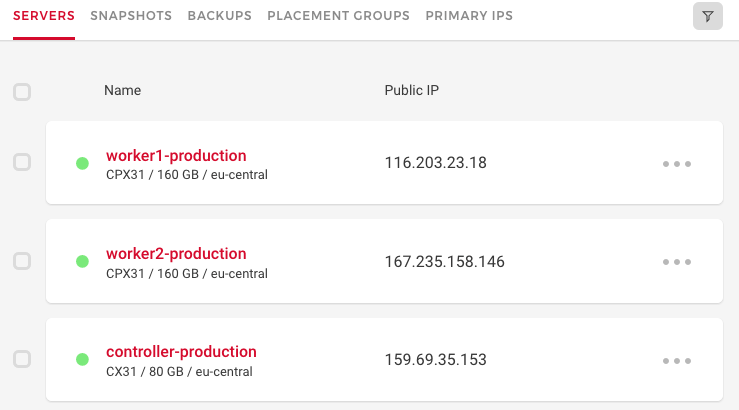

And the servers are available!

Modules

Terraform modules provide coherent sets of parameterized resource descriptions. They are used to group related resource definitions to define architectural abstractions in your project, for example defining a control plane node with dedicated servers, VPC and databases.

There are three kinds of modules. The root module are all resource definitions inside your main directory. Child modules are defined with the module keyword. The third type, nested modules, should be avoided because declaration and maintenance is harder - a flat module structure of only root module with child modules is better.

Modules can be defined locally or used from a private or public registry. Sind they are fully self-contained, they need to explicitly define their providers, their variables, and the resources.

Example

As before, to better understand modules, let’s use them in the very same example project. We will create two separate modules for the controller and the worker, and define them in such a way that all required variables are to passed from he root modules

First, let’s create the modules directory and modules files. Create the following directories and files:

├── modules

│ ├── controller

│ │ ├── controller.tf

│ │ ├── provider.tf

│ │ └── variables.tf

│ └── worker

│ ├── provider.tf

│ ├── variables.tf

│ └── worker.tf

In the provider.tf, the Hetzner Cloud provider needs to be configured:

terraform {

required_providers {

hcloud = {

source = "hetznercloud/hcloud"

version =">=1.36.0"

}

}

}

provider "hcloud" {

token = var.hcloud_token

}

The controller and the worker definitions is structurally similar, so let’s just see how the controller is defined. Its resource definition is the same, but all input are variables:

resource "hcloud_server" "controller" {

for_each = toset(var.instances)

name = format("%s-%s", each.key, var.environment)

server_type = var.server_type

image = var.image

location = var.location

}

For each variable that you want to pass to a child module, the child needs to explicitly define this variable. Otherwise, you will get an error message like Error: Unsupported argument. An argument named "server_type" is not expected here.

So, the variables.tf looks like this:

variable "server_type" {

type = string

}

variable "instances" {

type = list

}

variable "location" {

type = string

}

variable "environment" {

type = string

}

variable "primary_ssh_key_name" {

type = string

}

variable "hcloud_token" {

type = string

}

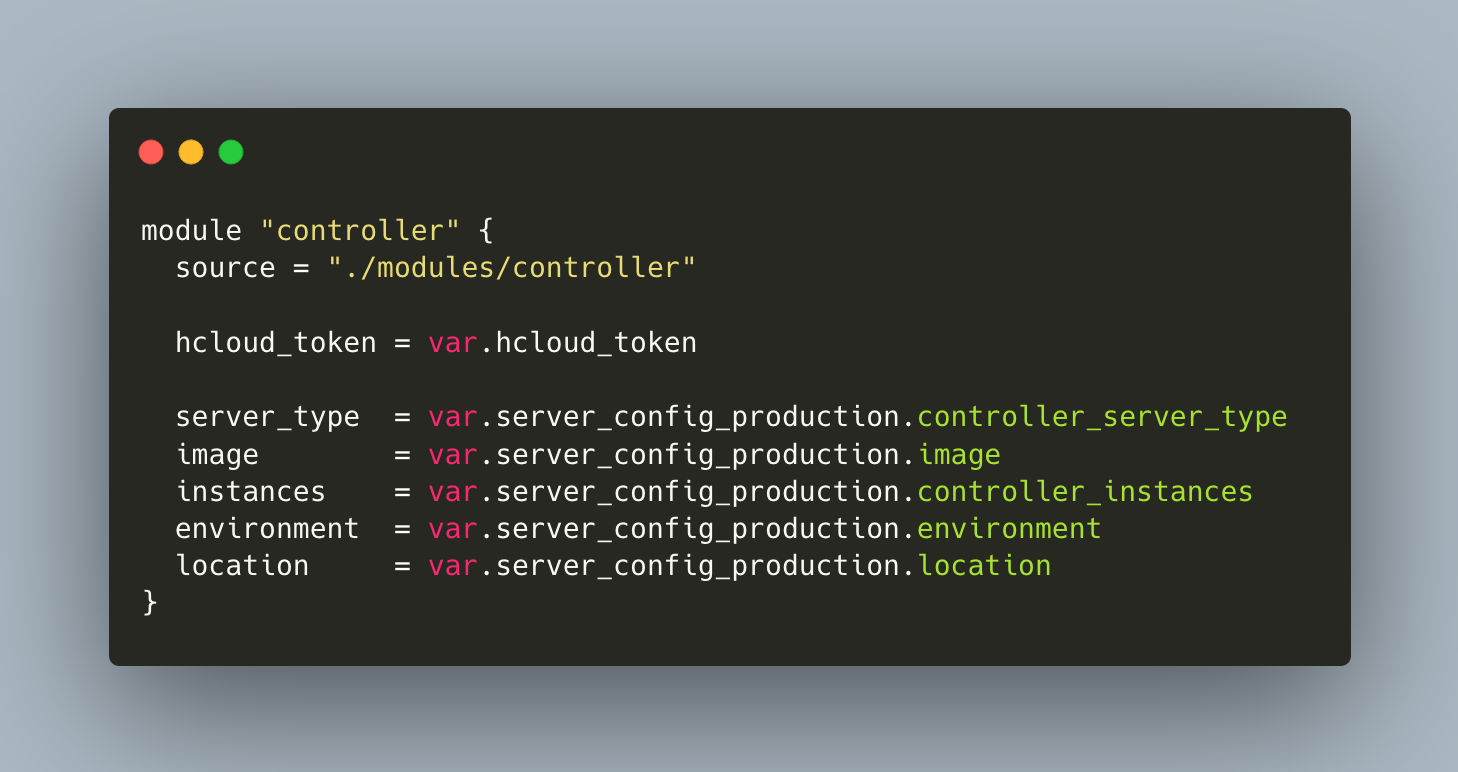

The module definition is completed, now we need to use it from the root module. In the main.tf file, define this:

variable "server_config_production" {

default = ({

environment = "production"

location = "fsn1"

server_type = "cx31"

worker_server_type = "cpx31"

image = "ubuntu-20.04"

instances = ["controller"]

worker_instances = ["worker1", "worker2", "worker3", "worker4"]

})

}

module "controller" {

source = "./modules/controller"

hcloud_token = var.hcloud_token

server_type = var.server_config_production.controller_server_type

image = var.server_config_production.image

instances = var.server_config_production.controller_instances

environment = var.server_config_production.environment

location = var.server_config_production.location

}

When terraform validate reports no errors, you then need to run init again so that Terraform will configure the modules.

terraform init

Initializing modules...

- controller in modules/controller

- worker in modules/worker

Initializing the backend...

Initializing provider plugins...

- Finding latest version of hashicorp/hcloud...

- Reusing previous version of hashicorp/tls from the dependency lock file

- Reusing previous version of hetznercloud/hcloud from the dependency lock file

- Using previously-installed hashicorp/tls v4.0.4

- Using previously-installed hetznercloud/hcloud v1.36.0

That’s it. Now we can plan and apply the changes:

terraform plan

Terraform used the selected providers to generate the following execution plan. Resource

actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

+ resource "hcloud_server" "controller"

+ resource "hcloud_server" "worker"

#...

Plan: 5 to add, 0 to change, 0 to destroy.

module.worker.hcloud_server.worker["worker4"]: Creating...

module.worker.hcloud_server.worker["worker1"]: Creating...

module.worker.hcloud_server.worker["worker2"]: Creating...

module.worker.hcloud_server.worker["worker3"]: Creating...

module.worker.hcloud_server.worker["worker2"]: Creation complete after 8s [id=25524452]

module.worker.hcloud_server.worker["worker4"]: Creation complete after 8s [id=25524451]

module.worker.hcloud_server.worker["worker1"]: Creation complete after 8s [id=25524448]

module.worker.hcloud_server.worker["worker3"]: Creation complete after 8s [id=25524449]

module.controller.hcloud_server.controller["controller"]: Creation complete after 9s [id=25524450]

Conclusion

In this article, you learned about Terraform workspaces and modules, two methods that help to work with more complex projects. Following an example for creating cloud computing server in the Hetzner cloud, you saw both methods applied. In essence, workspaces provide namespaces for resource creation with dedicated states. Use them when you want to manage basically the same resource, but with different configuration, such as in a staging and production environment. Modules are fully self-contained, grouped resource definitions that represent parts of a complex infrastructure. Use them to separate your projects into logically related parts, such as a module for creating the control plane environment, one for its nodes, and others. To better understand how workspaces and modules are used, this article also showed an example for seperating development and production resources.