Terraform is an infrastructure provisioning tool. From a declarative resource description, concrete infrastructure objects are created. In my last articles, you learned about Terraform projects, i.e.how to configure providers and resources and the recommended file structure. Also, you learned about the Terraform workflow consisting of a one-time initialization, followed by iterative phases of writing, planning, and applying.

This article complements this introduction by giving a complete overview about additional Terraform commands. To give a better orientation for which activity or during which workflow steps the commands are used, I grouped them as this: Workflow (and sub-phases), project maintenance (updates, validation, workspaces), and miscellaneous commands. To show the commands application, a Terraform project that creates an S3 bucket is used as the context.

The technical context for this article is Terraform v1.4.6, but it is applicable to newer versions as well.

Running Example: S3 Bucket on the AWS Cloud

The context of all Terraform commands shown in this article is a project that creates an AWS S3 Bucket and uploads HTML file to it. The project has two workspaces, staging and production.

The overall file structure is this:

aws_s3_tutorial

├── files

│ └── index.html

├── main.tf

├── terraform.tf

└── terraform.tfstate.d

├── production

└── staging

The default provider configuration is this:

//terraform.tf

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 4.33.0"

}

}

}

provider "random" {}

provider "aws" {

region = "eu-central-1"

}

provider "aws" {

region = "us-east-1"

alias = "us"

}

And the resource definition is:

//main.tf

resource "aws_s3_bucket" "html_container" {

bucket = "${random_pet.bucket_prefix.id}-html-container"

}

resource "random_pet" "bucket_prefix" {}

resource "aws_s3_object" "document" {

bucket = aws_s3_bucket.html_container.id

key = "html-container"

source = "files/index.html"

}

Group 1: Workflow Commands

1.1 Project Initialization

At the beginning of each project, you run terraform init, which consumes all resource files to determine and download the required providers.

For example, when using a provider for generating SSH keys and connecting to AWS, the output is this:

terraform init

Initializing the backend...

Initializing provider plugins...

- Reusing previous version of hashicorp/aws from the dependency lock file

- Reusing previous version of hashicorp/random from the dependency lock file

- Using previously-installed hashicorp/aws v4.37.0

- Using previously-installed hashicorp/random v3.4.3

Terraform has been successfully initialized!

1.2 Planning

plan

The main operation in this phase. When called without any parameters, it will create a so-called speculative plan, which is a one-at-a-time representation of the changes.

terraform plan

Terraform used the selected providers to generate the following execution

plan. Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

# aws_s3_bucket.html_container will be created

+ resource "aws_s3_bucket" "html_container" {

+ acceleration_status = (known after apply)

+ acl = (known after apply)

+ arn = (known after apply)

#...

# aws_s3_object.document will be created

+ resource "aws_s3_object" "document" {

+ acl = "private"

+ bucket = (known after apply)

+ bucket_key_enabled = (known after apply)

#...

# random_pet.bucket_prefix will be created

+ resource "random_pet" "bucket_prefix" {

+ id = (known after apply)

+ length = 2

+ separator = "-"

}

Plan: 3 to add, 0 to change, 0 to destroy.

You can also use plan -out=file to persist the concrete changes in a binary file, which can be consumed later by the apply command

refresh

This command updates the state data according to the actual state of the remote resources.

terraform refresh

random_pet.bucket_prefix: Refreshing state... [id=happy-antelope]

aws_s3_bucket.html_container: Refreshing state... [id=happy-antelope-html-container]

aws_s3_object.document: Refreshing state... [id=html-container]

1.3: Creating and Destroying Resources

import

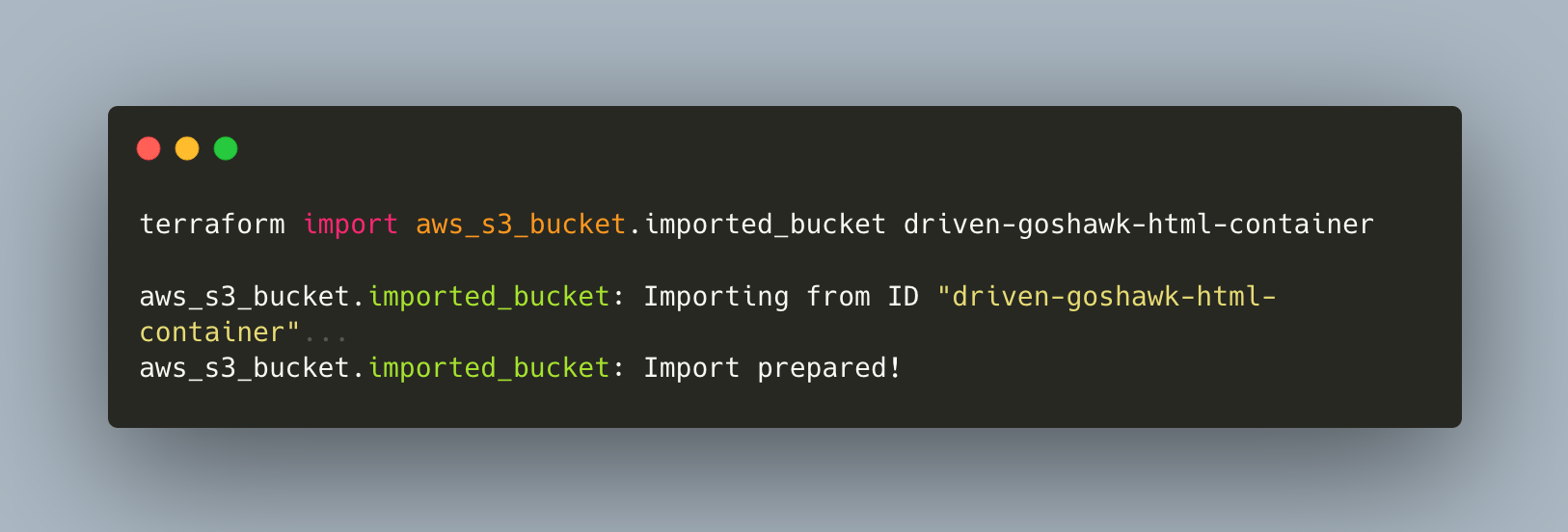

This command is used to reuse existing objects in the local representation. It associates a resource object with an existing infrastructure object. The concrete import statement is dependent on the provider and the particular resource.

Most commonly, any infrastructure object of a provider has an internal ID, and with this, you can import the resource. In other cases, it might be the name of the entity, such as a particular domain name or a firewall rule name.

To import, you first need to define the resource in your Terraform project, and then associate a remote resource with it. Terraform kindly reminds you if you forgot this:

terraform import aws_s3_bucket.imported_bucket driven-goshawk-html-container

Error: resource address "aws_s3_bucket.imported_bucket" does not exist in the configuration.

Before importing this resource, please create its configuration in the root module. For example:

resource "aws_s3_bucket" "imported_bucket" {

# (resource arguments)

}

When the resource is defined, an import looks like this:

terraform import aws_s3_bucket.imported_bucket driven-goshawk-html-container

aws_s3_bucket.imported_bucket: Importing from ID "driven-goshawk-html-container"...

aws_s3_bucket.imported_bucket: Import prepared!

Prepared aws_s3_bucket for import

aws_s3_bucket.imported_bucket: Refreshing state... [id=driven-goshawk-html-container]

Import successful!

The resources that were imported are shown above. These resources are now in your Terraform state and will henceforth be managed by Terraform.

apply

This command creates all remote resources. You can call this command with or without a plan file.

Without a plan file, it will call plan again, show the speculative plan, and ask you to apply it. In this case, it’s important to use a backend that provides state-locking, or else another process might change the state beforehand, resulting in errors.

terraform apply

aws_s3_bucket.html_container will be created

aws_s3_object.document will be created

random_pet.bucket_prefix will be created

Plan: 3 to add, 0 to change, 0 to destroy.

Do you want to perform these actions?

Terraform will perform the actions described above.

Only 'yes' will be accepted to approve.

Enter a value:

Alternatively, you supply the plan file. This is especially recommended when working in teams, because the plan is based on a specific and unique state. When, between the time of creating the plan and trying to apply it, another team member performed any other state modification, you will see this error message:

terraform apply html_container_plan.bin

╷

│ Error: Saved plan is stale

│

│ The given plan file can no longer be applied because the state was changed

│ by another operation after the plan was created.

If the plan file can be applied:

terraform apply html_container_plan.bin

random_pet.bucket_prefix: Creating...

random_pet.bucket_prefix: Creation complete after 0s [id=happy-antelope]

aws_s3_bucket.html_container: Creating...

aws_s3_bucket.html_container: Creation complete after 2s [id=happy-antelope-html-container]

aws_s3_object.document: Creating...

aws_s∏3_object.document: Creation complete after 0s [id=html-container]

Finally, you can also create resources selectively with apply -target=NAME.

terraform apply -target=aws_s3_bucket.html_container

Plan: 2 to add, 0 to change, 0 to destroy.

╷

│ Warning: Resource targeting is in effect

│

│ You are creating a plan with the -target option, which means that the result

│ of this plan may not represent all of the changes requested by the current

│ configuration.

│

│ The -target option is not for routine use, and is provided only for

│ exceptional situations such as recovering from errors or mistakes, or when

│ Terraform specifically suggests to use it as part of an error message.

╵

destroy

As its name suggest, this command deletes all managed resources. Just as with apply, you can consume a plan file to have more control about this command, and you can apply selective destroys with the -target=name flag.

Here is an example when called without a plan - it will print the changes and ask for confirmation.

terraform destroy

random_pet.bucket_name: Refreshing state... [id=casual-dolphin]

aws_s3_bucket.html_container: Refreshing state... [id=casual-dolphin-kops-state]

Terraform used the selected providers to generate the following execution

plan. Resource actions are indicated with the following symbols:

- destroy

Terraform will perform the following actions:

# aws_s3_bucket.html_container will be destroyed

- resource "aws_s3_bucket" "html_container" {

- arn = "arn:aws:s3:::casual-dolphin-kops-state" -> null

- bucket = "casual-dolphin-kops-state" -> null

- bucket_domain_name = "casual-dolphin-kops-state.s3.amazonaws.com" -> null

#...

# random_pet.bucket_name will be destroyed

- resource "random_pet" "bucket_name" {

- id = "definite-squid" -> null

- length = 2 -> null

- separator = "-" -> null

Group 2: Project Maintenance

2.1 Information

These commands provide a quick introspection into the project.

providers

Performs a syntax check, and if no errors are encountered, show details about the provider configuration.

terraform providers

Providers required by configuration:

.

├── provider[registry.terraform.io/hashicorp/aws] ~> 4.33.0

└── provider[registry.terraform.io/hashicorp/random]

You can also use this command to create a lock file with the most recent versions of the providers, or as specified in the terraform file. See here:

terraform providers lock

- Fetching hashicorp/aws 4.37.0 for linux_amd64...

- Retrieved hashicorp/aws 4.37.0 for linux_amd64 (signed by HashiCorp)

- Fetching hashicorp/random 3.4.3 for linux_amd64...

- Retrieved hashicorp/random 3.4.3 for linux_amd64 (signed by HashiCorp)

- Obtained hashicorp/aws checksums for linux_amd64; All checksums for this platform were already tracked in the lock file

- Obtained hashicorp/random checksums for linux_amd64; All checksums for this platform were already tracked in the lock file

Success! Terraform has validated the lock file and found no need for changes.

The content of the file .terraform.lock.hcl is this:

# This file is maintained automatically by "terraform init"

# Manual edits may be lost in future updates

provider "registry.terraform.io/hashicorp/aws" {

version = "4.37.0"

hashes = [

"h1:fLTymOb7xIdMkjQU1VDzPA5s+d2vNLZ2shpcFPF7KaY=",

// ...

]

}

provider "registry.terraform.io/hashicorp/random" {

version = "3.4.3"

hashes = [

"h1:tL3katm68lX+4lAncjQA9AXL4GR/VM+RPwqYf4D2X8Q=",

"zh:41c53ba47085d8261590990f8633c8906696fa0a3c4b384ff6a7ecbf84339752",

//...

]

}

version

Prints information about the Terraform binary itself.

terraform version

Terraform v1.4.6

on linux_amd64

+ provider registry.terraform.io/hashicorp/aws v4.33.0

+ provider registry.terraform.io/hashicorp/random v3.4.3

Your version of Terraform is out of date! The latest version

is 1.3.4. You can update by downloading from https://www.terraform.io/downloads.html

2.2 Validation

Validation commands can be used in all workflow stages.

validate

Parse all *.tf files and check if they are syntactically valid.

When all files are ok:

Success! The configuration is valid.

If not, a detailed error message is shown:

╷

│ Error: Unsupported block type

│

│ on main.terraform line 7:

│ 7: ressource "aws_s3_object" "document" {

│

│ Blocks of type "ressource" are not expected here. Did you mean "resource"?

2.3 State Manipulation

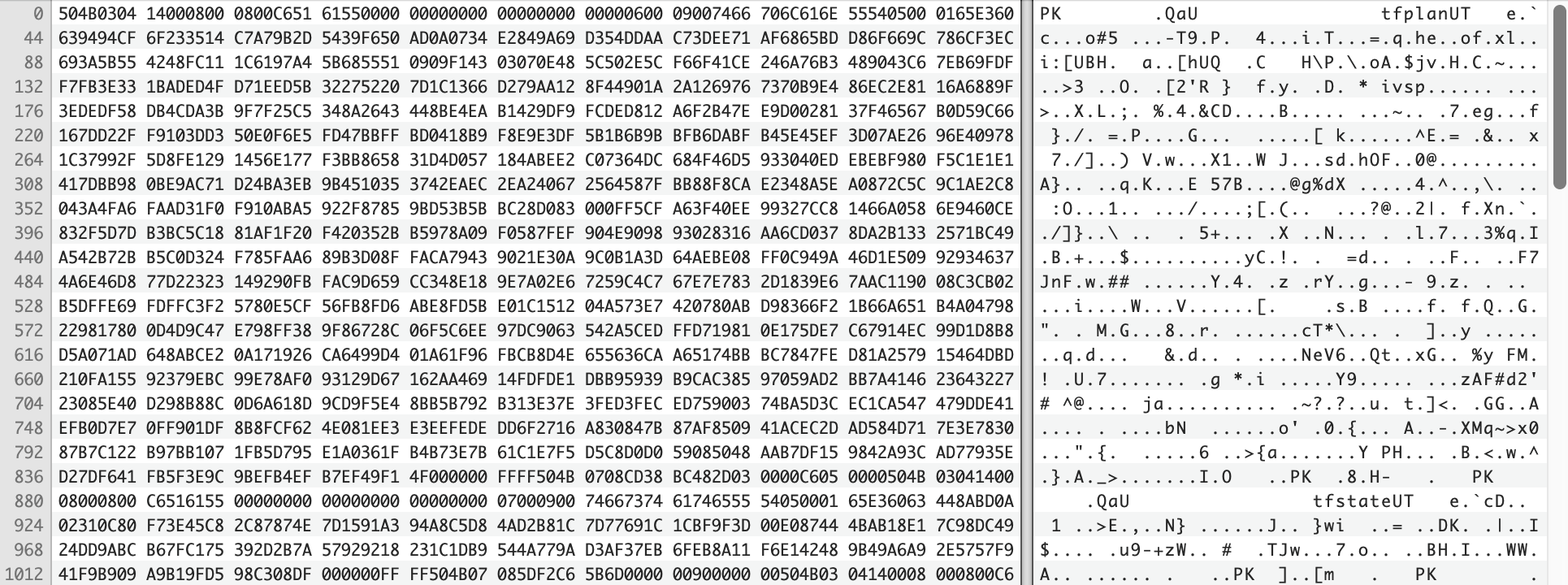

State is essential in Terraform, which is reflected by several commands.

state

Provides fine grained control to read, update, and delete the state. It has several subcommands, here is a brief description of each:

Show

list: Flat list of resource types and their namesshow (-json): prints the current state, optionally in JSON formatshow RESOURCE: prints the current state of the specified resourceoutput: Print all stored outputs

Update the state

pull: Refresh and print the state of a specific resourcerm: Delete resources in the state file (the resources themselves will not be destroyed)

Migrate state storage from local file to remote state

state mv SOURCE DESTINATION: Move a resource from one state to anotherpush: Update a remote state from the local state filereplace-provider: Change the state provider

taint

A deprecated command to mark a resource such that Terraform cannot ensure the resources actual state is the desired state.

terraform taint aws_s3_bucket.html_container

Resource instance aws_s3_bucket.html_container has been marked as tainted.

Then, during the next state refreshing or apply command, you will see that the resource will be replaced:

# aws_s3_object.document must be replaced

-/+ resource "aws_s3_object" "document" {

~ bucket = "master-dragon-html-container" -> (known after apply) # forces replacement

~ bucket_key_enabled = false -> (known after apply)

~ content_type = "binary/octet-stream" -> (known after apply)

~ etag = "7b16b45a89ae8a557d8c3cead7c2844e" -> (known after apply)

~ id = "html-container" -> (known after apply)

+ kms_key_id = (known after apply)

- metadata = {} -> null

+ server_side_encryption = (known after apply)

~ storage_class = "STANDARD" -> (known after apply)

- tags = {} -> null

~ tags_all = {} -> (known after apply)

+ version_id = (known after apply)

# (4 unchanged attributes hidden)

}

The official documentation states that taint should not be used directly anymore. Instead, a selective apply should be used:

terraform apply -replace=NAME

untaint

The opposite command unmarks the resource, state refresh commands and apply no longer show that the resource will be replaced:

terraform untaint aws_s3_bucket.html_container

Resource instance aws_s3_bucket.html_container has been successfully untainted.

2.4 Modules and Workspaces

get

With this command, you can update the locally installed module to a newer version, respecting eventually configured resource constraints.

terraform get

Downloading registry.terraform.io/terraform-aws-modules/iam/aws 5.5.5 for iam_account...

- iam_account in .terraform/modules/iam_account/modules/iam-account

workspace

Workspaces enable defining different values for variables and they manage a different state for the same resource configuration. Think of it as providing a context for your resources, like a staging and production environment.

The same named command enables the creation, usage and deletion of Terraform workspaces.

terraform workspace list

* default

terraform workspace new staging

Created and switched to workspace "staging"!

terraform workspace new production

Created and switched to workspace "production"!

terraform workspace select staging

Switched to workspace "staging".

When you create resources in these workspaces, you can see that the state is recorded in different files (when using the local provider).

.

└── terraform.tfstate.d

├── production

│ └── terraform.tfstate

└── staging

└── terraform.tfstate

force-unlock

In some cases, the state governed within a workspace might get locked, and you cannot modify the remote resources anymore. With this command, you can force an unlock

terraform force-unlock -help

Manually unlock the state for the defined configuration.

This will not modify your infrastructure. This command removes the lock on the

state for the current workspace. The behavior of this lock is dependent

on the backend being used. Local state files cannot be unlocked by another

process.

Group 3: Miscellaneous Commands

fmt

Formats all *.tf files

Should be called with -diff -recursive to traverse all directories and detail the applied changes.

terraform fmt -diff -recursive

outputs.tf

--- old/outputs.tf

+++ new/outputs.tf

@@ -1,3 +1,3 @@

output "aws_bucket_id" {

- value = aws_s3_bucket.html_container.bucket_domain_name

+ value = aws_s3_bucket.html_container.bucket_domain_name

}

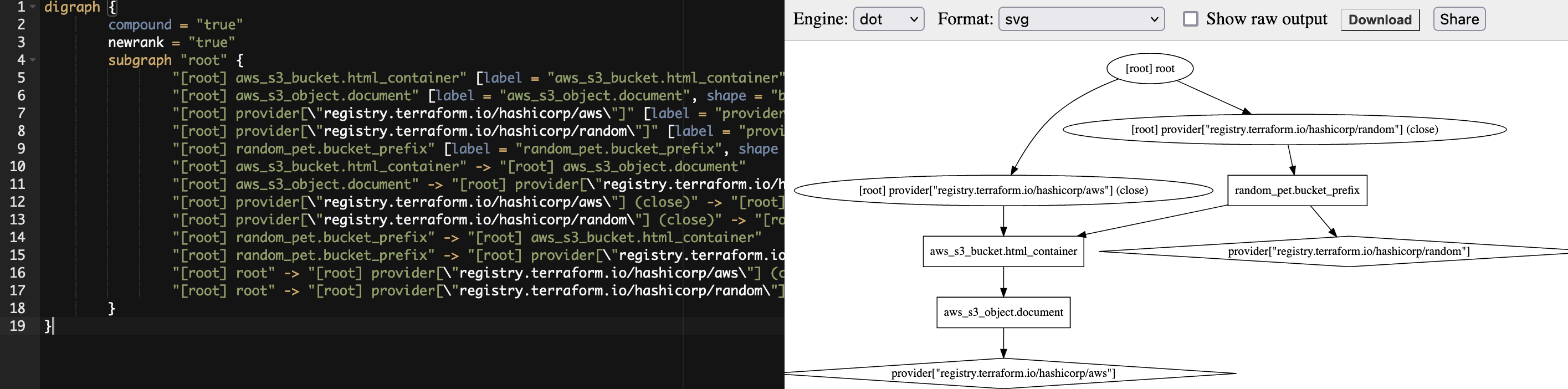

graph

This command creates a graph file in the DOT graph description format. It can be used to visualize the changes that different other commands create: plan, plan with refresh only, plan with destroy, and apply.

terraform graph -type=plan-destroy > graph.dot

The graph file looks as follows:

digraph {

compound = "true"

newrank = "true"

subgraph "root" {

"[root] aws_s3_bucket.html_container" [label = "aws_s3_bucket.html_container", shape = "bo

...

You can view this file with the Graphviz binary or an online tool like Graphviz Viewer.

console

Opens an interactive terminal that loads all resource definitions and enables the user to explore several functions of the Terraform language.

terraform console

> toset([1,2,2,3])

toset([

1,

2,

3,

])

login

In addition to the Terraform CLI binary, Terraform Cloud is a platform providing advanced capabilities for working in teams and run Terraform commands. The login command creates an API token that is locally stored so that subsequent commands are executed against the cloud.

terraform login

Terraform will request an API token for app.terraform.io using your browser.

If login is successful, Terraform will store the token in plain text in

the following file for use by subsequent commands:

~.terraform.d/credentials.tfrc.json

Do you want to proceed?

Only 'yes' will be accepted to confirm.

logout

This command removes the Terraform Cloud API token.

terraform logout

No credentials for app.terraform.io are stored.

Conclusion

This article provided a detailed and complete overview to all Terraform commands. The commands are grouped into a) workflow phase (plan, apply, destroy), b) project maintenance (print version, validate, perform updates), and c) miscellaneous (create graphical representation of changes, format file). To facilitate learning, I showed all commands applied in the context of creating an AWS S3 bucket.