It’s been more than a year working on my robot RADU. I could not get all of its goals fulfilled, and sadly, the robot is not autonomous. The journey itself was intricate, challenging, mostly fun with some rough edges. This article summarizes the project, recaps the goals and their fulfillments, and presents the hard lessons learned. It’s not easy to talk about failure, but I hope that you can take some knowledge from this description and make other decisions when working on similar projects.

Project Outline Recapped

My robot projects started in 2021, and the first article of the series was published in March 2021. I have been steadily working on it, with some breaks where I pursued side-project that were related and/or inspired by this overall project.

Overall, I defined six different phases that should build the robot step-by-step. Here is what I achieved.

Note: The phase names I use here are not the originally ones, but they better reflect what each phase is about.

Software & Hardware Essentials

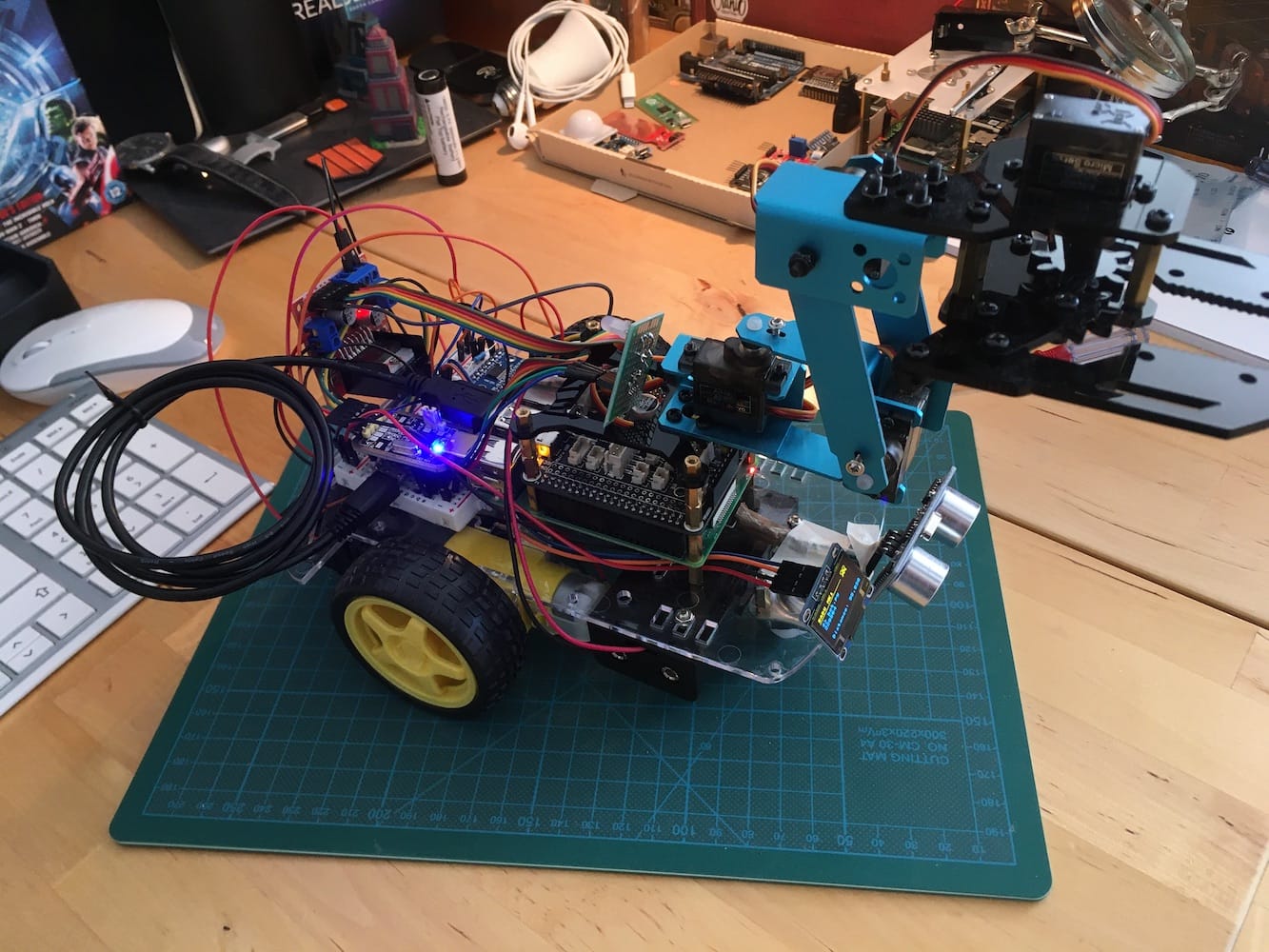

In this phase, researching about hardware and software for robotics set the foundations of the projects. This phase was very insightful and inspiring: Leaning about microcontrollers and single board computers, investigating robotics hardware such as actuators and sensor, and frameworks for robotic projects literally opened a new world to me. Based on the understanding, this bill of materials defines the hardware of my robot: A wheeled chassis, L293 motor shields, distance sensors, controlled by a combination of Arduino and Raspberry Pi.

Robot Prototype

Following the exploration phase I started to build the robot. Once I got all the components, I began to build RADU M1 from scratch. The chassis with 4 wheels, a motor controller, and the Arduino. The Arduino provided a simple command-line interface - see this blog post about a generic serial terminal - that activated the motors. With this foundation, the other sensors were added: Distance sensor, a LED Matrix, and an IR receiver. This phase concluded with a moveable, IR controlled robot I was very happy. Furthermore, working with Microcontrollers in general was very interesting and I wrote 17 blog articles to cover various topics.

Robot Simulation

With the first manually controllable prototype, and all the essential hardware going, the next phase should add the software suitable to achieve higher level goals. From the initial investigation stemming, this phase was all about using ROS, the Robot Operating System.

ROS is a an open-source robotics middleware developed for over 15 years. The ROS architecture is logical and convincing as a solution to such complex systems. ROS processes are started as Nodes on different devices, connected via LAN, WLAN or serial. Nodes publish well-defined messages in topics, and exchange information via a publish/subscribe model. With these building blocks, different aspects of a robot are realized and combined: Collecting and interpreting sensor data, reading the robots position and velocity, issuing commands for moving and navigation.

A ROS robot typically starts as a simulation because you can test the complete software stack before installing it on the robot. My basic robot model was easy to make - see my ROS URDF tutorial, but to have a model with all physical properties was very challenging as documented in migrating URDF from ROS1 to ROS2.

Environmental Localization, Mapping and Navigation

To understand the simulation aspect of a ROS robot, I started to researchg how to combine Gazebo simulation with ROS and learn the different components of the ROS navigation stack. I understood the required ROS concepts and nodes, and was ready to realize the self-navigation, but met a crucial technical problem.

Environmental Visualization

In the simulation, the robot detects obstacles with a 3D point cloud sensor or a Lidar. Considering the available hardware and price, I decided to get the Intel Real Sense D435 camera. It has perfect form factor for small scale robots, and an actively developed ROS software stack to read image and pointcloud data from this device. I had no trouble stacking this camera to my robot. But when I accessed the camera via ROS, all streamed images gave me the impression of being "sloppy".

The sloppy image perception lead to several investigations: Checking the Raspberry Pi WIFI Capabilities, systematically comparing the speed of image and point cloud data transmissions in ROS. Eventually, I needed to switch back from ROS2 to ROS1 to get the camera working at all!

This was a crucial turning point: I could not get the motivation to redo the simulation in ROS1, and could not see the benefit of using the simulation at all when I could write the control software with pure Python and Micropython!

To find the fun again in my robot project, RADU evolved from using an Arduino to a Raspberry Pico for controlling the motors and the distance sensor. And after investigating gamepad controlls and implementing wheel and arm movements, the robot worked and was fun to move around.

Ultimately, I abandoned ROS altogether.

Pickup & Delivery Robot

Technically I did not arrive at this stage. But although the bandwidth problem persisted, I Investigated my robots design and how to evolve it to serve as a platform for this goal. To kickstart his, I purchased and tested two robot kits. One is a robotic gripper, for which I wrote custom Python script for sending movements commands. This gripper could be attached on top of RADU. The other Kit is an all-in-one solution: A robot tank chassis with a gripper. For this Kit, I investigate the supplied software stack, made tweaks, and converted it to being operable with ROS. I also added the Realsense camera to it and used for the late stage testing of mapping and navigation.

Painful Lessons Learned

Reflecting upon this project, what are the painful lessons learned?

First of all, I underestimated the complexity of ROS. Although for ROS1 you find many examples, the tried and tested approach to get something done always involved to test the nuances of each example, study launch files and parameters, read error messages. It’s a time-consuming effort to get something run. In the very late stages of the project, when the bandwidth problem became an unsolvable obstacle, I stumbled upon others reporting of similar difficulties:

Okay, here is where hacky stuff starts. I spent 3 days trying different drivers and approaches and nothing worked - as soon as I would try accessing two streams simultaneously the Kinect would start timing out as you can see in Screenshot 3. I tried everything: better power supply, older commits of libfreenect and freenect_stack ...

In additions, it’s very disheartening when you read about other projects that worked with the same setup - Realsense 435 on Raspberry Pi 4 - and could not find a solution too.

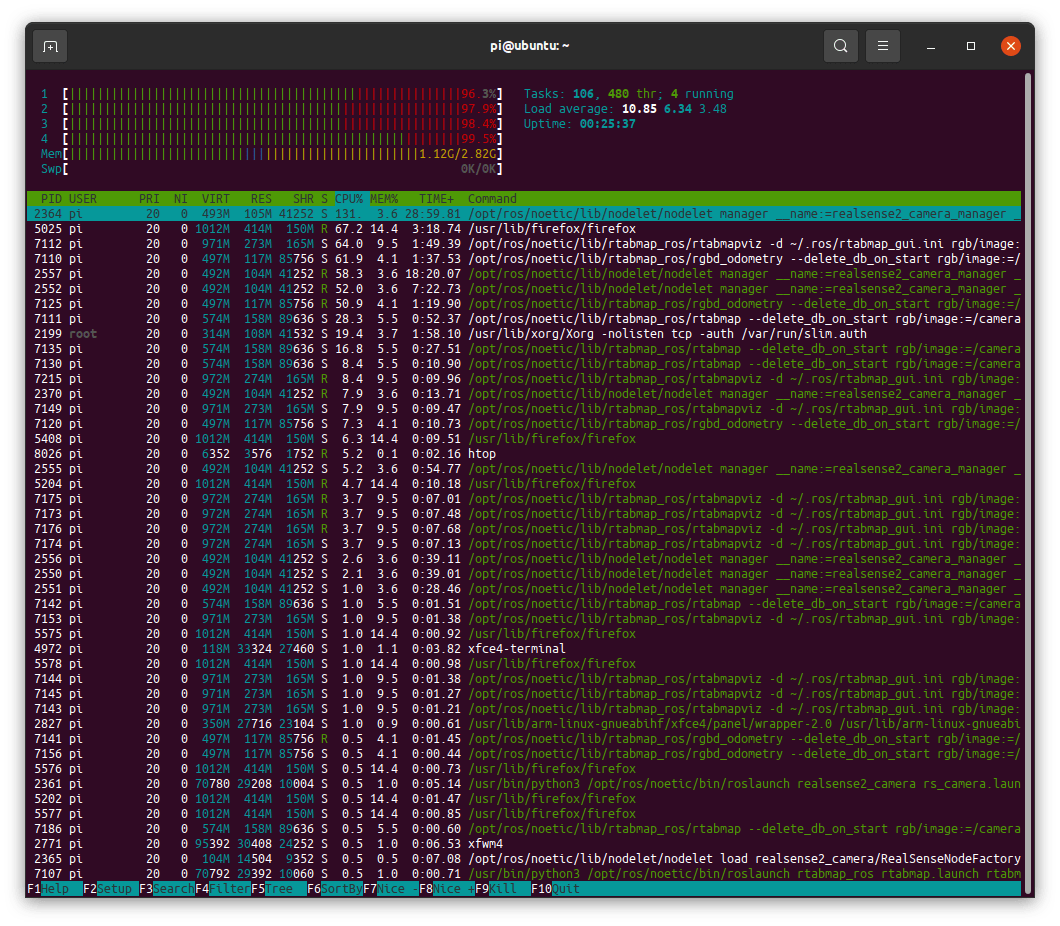

Second, using ROS on a small-scale computer will provide problems. I choose the Raspberry Pi 4 because of its form factor for building a small, autonomous robot. But the hardware constraints does not go well with the rather resource hungry ROS. Just very late in the project, occupied with the bandwidth problem, I saw just how much resources are required. For example, the ROS node for collecting raw image data and point clouds alone used about 40% if the Raspberry Pi 4 CPUs. When trying to launch a GUI on the Raspberry Pi 4, and starting the image data collection alongside with the rtabmap node leads to 100% CPU utilization.

Third is the bandwidth problem. A great amount of time was spent with investigating why transferring image data from the raspberry Pi to my Linux workstation is so slow. Unfortunately, I started at the very wrong side of the problem: Investigating and optimizing the software stack, including Ubuntu Server vs. Raspberry Pi OS, compiling different versions of the Realsense library and ROS nodes, and tuning parameters could not help with the very sloppy image transfer. An ethernet cable fixed all this. But a small factor robot driving around and dragging a 10m ethernet cable behind him? Then I addressed the Wi-Fi speed problem. Changed the Wi-Fi router, added Wi-Fi repeaters and access points, made speed measurements between 2.4 GHz Wi-Fi and 5Ghz Wi-Fi, and even used an external USB Wi-Fi Adapter with an antenna. The maximum transfer speed between the Raspberry Pi4 and my Linux workstation is 20Mb/s. Too less for transporting the image data, and even when using only a few topics, ROS still showed extensive drops in topic publication frequency and bandwidth

Measurement of Success

It was an ambitious and audacious goal to build an autonomous, pickup & deliver robot from scratch. I could not fulfill the ultimate goal, but still see a great measure of access. At the end of this project, I have a solid understanding and practical experience with the microcontrollers Arduino and Raspberry Pico, as well as the programming skills to write sensor interfacing software with C/C++ and Python. I know how to connect microcontrollers with sensors per manual wiring, and made pleasant experiences with soldering. Continuing form there, I learned and practiced using ROS for robotic projects, learned the tools RVIZ and gazebo, wrote custom ROS nodes to listen, and made a fully functional simulation. Finally, I build two prototypes of the robot, and worked with two robotic kits to make them ROS compatible.

Additional projects were kicked-off too: Programming microcontroller in general, and the Raspberry Pico specifically for which I even wrote a shift register library. The strong fascination for Arduino and Raspberry Pi culminated in exploring Home Automation and adding custom temperature and humidity sensors_, which will be shown on future articles.

Future of Robotics Project

So, is there a future for this goal? While writing these lines, new ideas came: Exchange the Raspberry Pi 4 to a Compute Model and get Wi-Fi 6 running, or turn the Raspberry Pi 4 into an Wi-Fi access point itself to solve the speed equation, or to change the camera setup. But after over a year, I'm reluctant to spend more time.

Is there a simpler way to get an autonomous robot running? The most pleasant experiences in this project were the manual assembling and programming of RADU MK2: Adding the motor, a display, the distance sensor, writing a Pico Python programming for sensor controlling, and using the Raspberry Pi just for receiving commands. There are many inspiring Raspberry Pi or Arduino based robot projects. Could is use one of these, and add a camera sensor that recognizes its environment, and then add autonomous behavior? From scratch, without using ROS?

What remains are the leanings, the experiences, and the unbroken fascination with microcontrollers. So, what will become of the autonomous robot? I leave this goal as it is - unfinished but ended. From today, I will also reduce the blog publishing schedule down to one article every second week.