Docker is a great tool for creating portable containers that run your application. Containers are built, then pushed to a registry, and from there pulled to the machines that run the applications. But do you know that each machine has an architecture? And that the architecture that build the image is responsible for setting the images architecture?

I didn't really understand this issue until I wanted to use my Raspberry Pi as a testing environment for my applications. I was building my images as always on my MacBook, and then pushed them to my private docker registry. When the application was deployed on the Raspberry Pi, ot never started, the container kept crashing. The only hint given is this error message: exec format error. This error means that the images architecture is different than the target machine, and therefore the image cannot run.

The obvious solution is to build the images on the machines on which they run. The better solution is to build images that can run on multiple architectures. This article will show you both approaches so that you can make an educated choice when you encounter a similar problem.

Solution 1: Use Multiarch Images

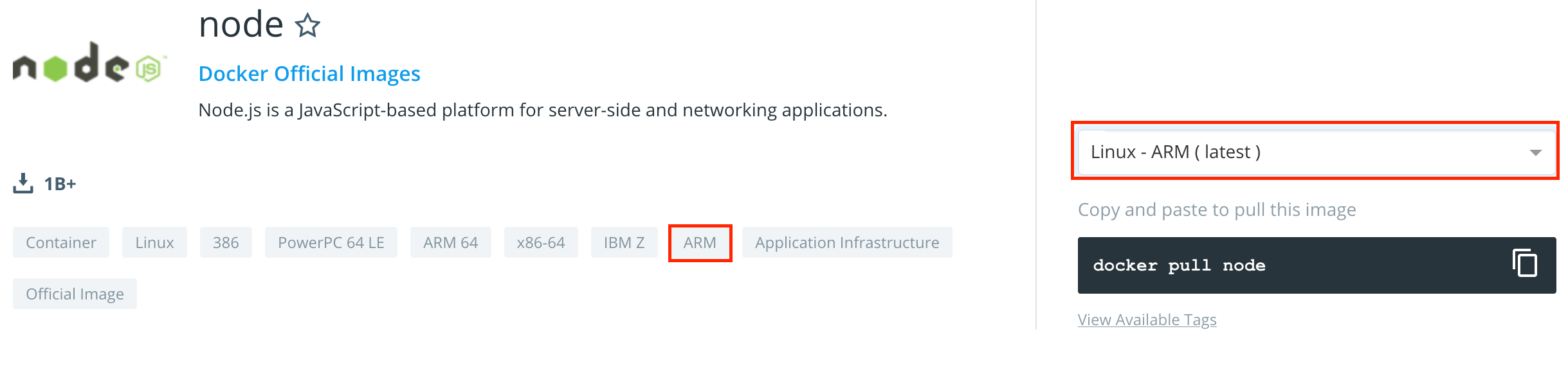

Most Docker base image support multiple architectures. Let’s consider the Docker NodeJS base image for running NodeJS application. You see the tags arm and x86_64.

Now, if you use this image, the Docker daemon provides information about the machine on which you build. And inside the Dockerfile, this information is used during the build process to build the image for this very same architecture. These declarations are the reason why the image, build on amd/x64, will not run on arm, and vice versa.

RUN ARCH= && dpkgArch="$(dpkg --print-architecture)" \

&& case "${dpkgArch##*-}" in \

amd64) ARCH='x64';; \

ppc64el) ARCH='ppc64le';; \

s390x) ARCH='s390x';; \

arm64) ARCH='arm64';; \

armhf) ARCH='armv7l';; \

i386) ARCH='x86';; \

*) echo "unsupported architecture"; exit 1 ;; \

esac \

...

curl -fsSLO --compressed "https://nodejs.org/dist/v$NODE_VERSION/node-v$NODE_VERSION-linux-$ARCH.tar.xz" \

If you use such an image, but want to support different architectures, you will need to do the following steps:

- Provide one machine per architecture

- Synchronize the application source code and configuration between all machines

- Execute build commands on all machines

- Push the image with a specific image name, like

app-arm, or image tag, likeapp:0.1-armto a Docker registry - In your deployment config, use the specific image name or tag

Solution 2: Use Architecture-Specific Base Image

Another solution is to use images that are specifically created for a target architecture. Consider this image for Alpine Linux on arm.

You can use this image to build your application for the target architecture. This requires the following steps:

- Add a separate Dockerfile per target architecture

- Push the image with a specific image name, like

app-arm, or image tag, likeapp:0.1-armto a Docker registry - In your deployment config, use the specific image name or tag

Because you do not need to synchronize the code between different machines, this solution is better than the first one. But you need to duplicate Dockerfile instructions, and you need to differentiate the correct images in your deployment configurations.

Solution 3: Use Multiarch Build

Multiarch builds is a Docker feature that allows you to build one image that runs on any number of target architectures. How does it work? A Docker image consists of multiple layers. In a multiarch image, some layers will be architecture-specific, and others will contain generic steps. A manifest file for each architecture instructs the docker daemon how to assemble the image for executing it.

Following this official Docker blog post, you enable multiarch builds with these steps:

- Install Docker Community Edition version 2.0.4.0 or higher

- Enable experimental features in Docker, and restart the Docker Community Edition

- Create a new multiarch builder by executing

docker buildx create --name multiarch - Bootstrap the new builder

docker buildx use mybuilder && docker buildx inspect --bootstrap

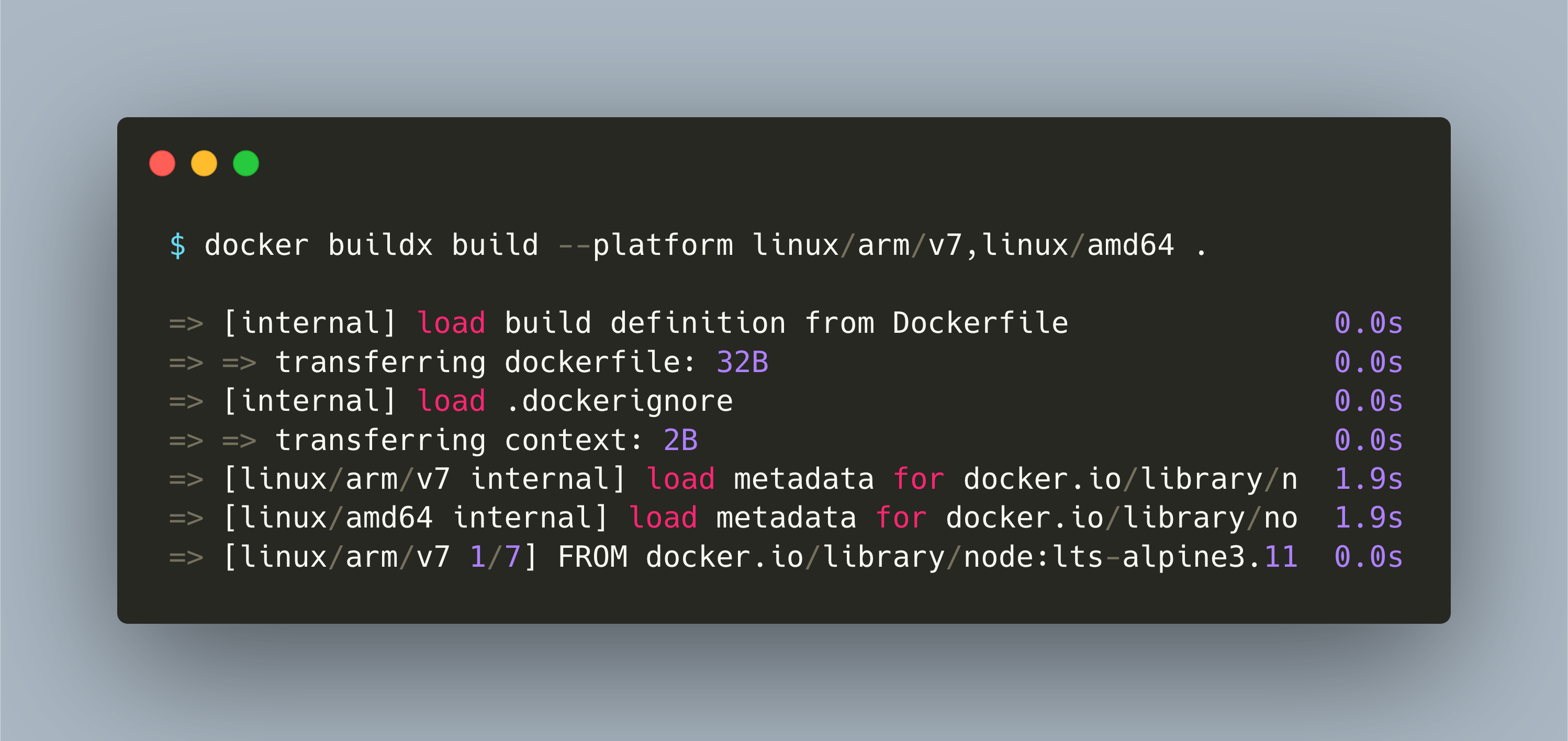

Now you can execute multiarch builds with the command docker buildx build. This command works just like docker build, but it has a new parameter called --plattform. Here you specify the target architectures for the build.

Lets see a multiarch build in action. Note that you need to have access to your own private or a public registry for using the produced image.

» docker buildx build \

-f build/Dockerfile --platform linux/arm/v7,linux/amd64 \

-t my-registry.com/app-multiarch \

--push .

> [internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 32B 0.0s

=> [internal] load .dockerignore 0.0s

=> => transferring context: 2B 0.0s

=> [linux/arm/v7 internal] load metadata for docker.io/library/n 1.9s

=> [linux/amd64 internal] load metadata for docker.io/library/no 1.9s

=> [linux/arm/v7 1/7] FROM docker.io/library/node:lts-alpine3.11 0.0s

=> => resolve docker.io/library/node:lts-alpine3.11@sha256:45694 0.0s

=> [internal] load build context 0.0s

=> => transferring context: 246B 0.0s

=> CACHED [linux/arm/v7 2/7] WORKDIR /etc/lighthouse-api 0.0s

=> [linux/amd64 1/7] FROM docker.io/library/node:lts-alpine3.11@ 0.0s

=> => resolve docker.io/library/node:lts-alpine3.11@sha256:45694 0.0s

=> CACHED [linux/amd64 2/7] WORKDIR /etc/lighthouse-api 0.0s

=> [linux/arm/v7 3/7] COPY package.json . 0.0s

=> [linux/amd64 3/7] COPY package.json . 0.1s

=> [linux/arm/v7 4/7] RUN npm i 154.2s

=> [linux/amd64 4/7] RUN npm i 24.4s

=> [linux/amd64 5/7] COPY app.js . 0.3s

=> [linux/amd64 6/7] COPY src/ ./src 0.0s

=> [linux/amd64 7/7] RUN ls -la 0.1s

=> [linux/arm/v7 5/7] COPY app.js . 0.4s

=> [linux/arm/v7 6/7] COPY src/ ./src 0.0s

=> [linux/arm/v7 7/7] RUN ls -la 0.2s

=> exporting to image 28.5s

=> => exporting layers 8.6s

=> => exporting manifest sha256:12f256c909736f60a3c786079494e3fd 0.0s

=> => exporting config sha256:e6a5e06df9589029aa12891b6dcca850c4 0.0s

=> => exporting manifest sha256:e42c0b401ae1fcd06c2cc479e05b3b1d 0.0s

=> => exporting config sha256:39764e9d27b4b38669a7a1545e384338e8 0.0s

=> => exporting manifest list sha256:09920f1fe291b0fa47692580617 0.0s

=> => pushing layers 18.7s

=> => pushing manifest for my-registry.com/app-multiarch 1.2s

A multiarch build consists of roughly three steps:

- Download the base images manifests for each architecture

- Create a thread per architecture, copy executions context and run all instructions from the

Dockerfile - Assemble the resulting manifest files, and push all layers to the registry.

Compared to the other approaches:

- No dedicated machines for building

- Configure and build the application on only one machine

- No separate Dockerfile per architecture

- No specific image name or image tag

- No changes to deployment configurations

Conclusion

Docker multiarch builds are the recommended solution. You do not need to change anything in your build configuration. You do not need dedicated build machines per target architecture. And you don’t need to differentiate image names per target architecture. Just deploy the same image, with the same name, to your staging and production environments. Just setup the docker buildx command and you are ready to go multiarch.