With Spacy, a sophisticated NLP library, differently trained models for a variety of NLP tasks can be used. From tokenization to part-of-speech tagging to entity recognition, Spacy produces well-designed Python data structures and powerful visualizations too. On top of that, different language models can be loaded and fine-tuned to accommodate NLP tasks in specific domains. Finally, Spacy provides a powerful pipeline object, facilitating mixing built-in and custom tokenizer, parser, tagger and other components to create language models that support all desired NLP tasks.

This article introduces Spacy. You will learn how to install the library, load models, and apply text processing and text semantics tasks, and finally how to customize a spacy model.

The technical context of this article is Python v3.11 and spacy v3.7.2. All examples should work with newer library versions too.

Spacy Library Installation

The Spacy library can be installed via pip:

python3 -m pip install spacy

All NLP tasks require a model to be loaded first. Spacy provides models build on different corpora and for different languages as well. See the full list of models. In general, the models can be distinguished into the size of its corpora, which leads to different results during NLP tasks, and the technology used to build the model, which is an unknow internal format or transformer-based models, such as Berta.

To load a specific model, following snippet can be used:

python -m spacy download en_core_web_lg

NLP Tasks

Spacy supports the following tasks:

- Text processing

- Tokenization

- Lemmatization

- Text Syntax

- Part-of-speech tagging

- Text Semantics

- Dependency Parsing

- Named Entity Recognition

- Document Semantics

- Classification

Furthermore, Spacy supports these additional features:

- Corpus Management

- Word Vectors

- Custom NLP Pipeline

- Model Training

Text Processing

Spacy applies text processing essentials automatically when one of the pretrained language model is used. Technically, text processing happens around a configurable pipeline object, an abstraction similar to the SciKit Learn pipeline object. This processing always begins with tokenization, and then adds additional data structures that enrich the information of parsed text. All of these tasks can be customized too, e.g. swapping the tagger component. The following descriptions focus only on the built-in features when using pre-trained models.

Tokenization

Tokenization is the first step and straightforward to apply: Apply a loaded model to a text, and tokens emerge.

import spacy

nlp = spacy.load('en_core_web_lg')

# Source: Wikipedia, Artificial Intelligence, https://en.wikipedia.org/wiki/Artificial_intelligence

paragraph = '''Artificial intelligence was founded as an academic discipline in 1956, and in the years since it has experienced several waves of optimism, followed by disappointment and the loss of funding (known as an "AI winter"), followed by new approaches, success, and renewed funding. AI research has tried and discarded many different approaches, including simulating the brain, modeling human problem solving, formal logic, large databases of knowledge, and imitating animal behavior. In the first decades of the 21st century, highly mathematical and statistical machine learning has dominated the field, and this technique has proved highly successful, helping to solve many challenging problems throughout industry and academia.'''

doc = nlp(paragraph)

tokens = [token for token in doc]

print(tokens)

# [Artificial, intelligence, was, founded, as, an, academic, discipline

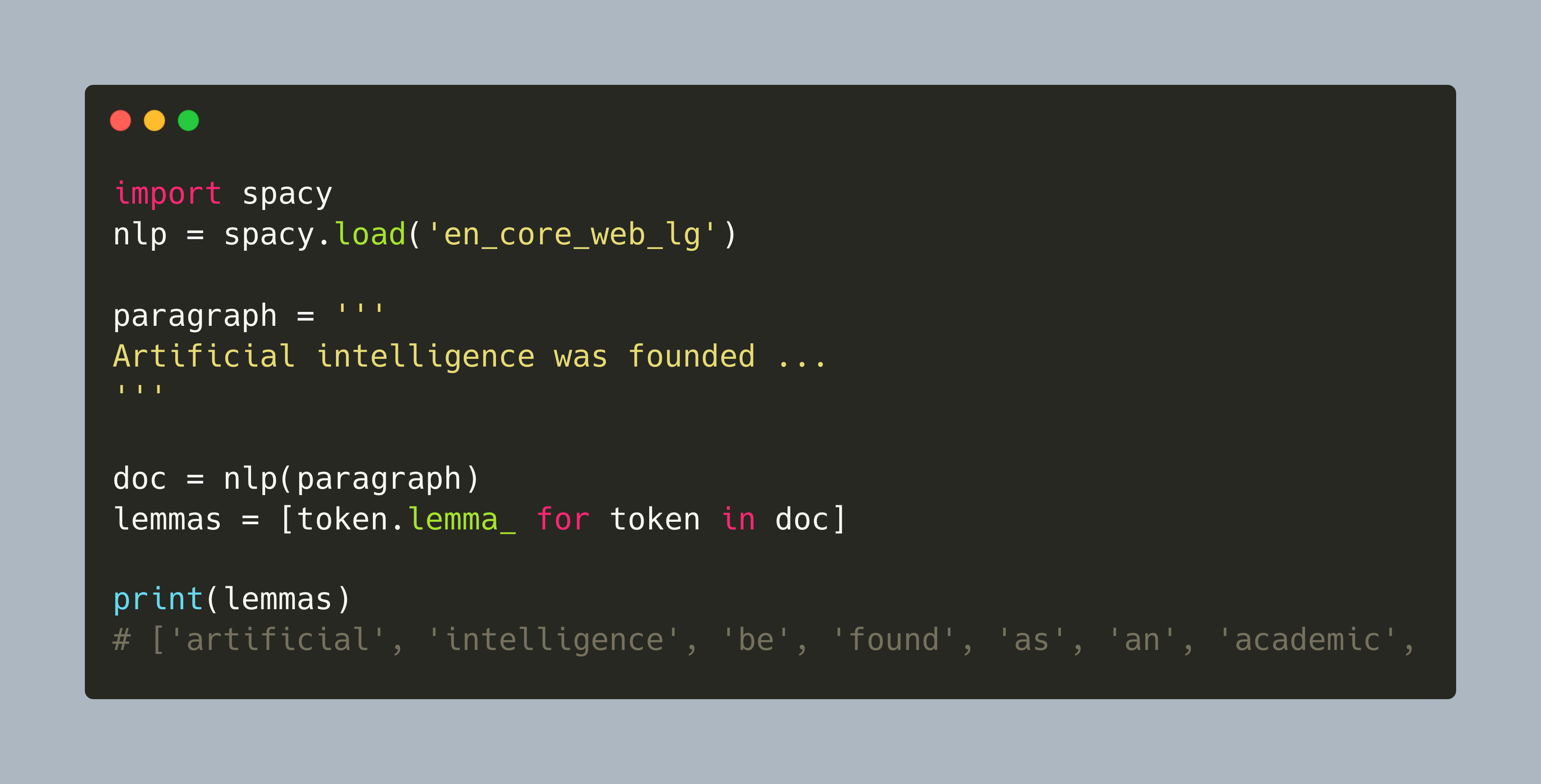

Lematization

Lemmas are generated automatically; they are properties of the tokens.

doc = nlp(paragraph)

lemmas = [token.lemma_ for token in doc]

print(lemmas)

# ['artificial', 'intelligence', 'be', 'found', 'as', 'an', 'academic',

The configurable component applies lemmatization either on rules or lookup. To see which mode the built-in model uses, execute the following code:

lemmatizer = nlp.get_pipe("lemmatizer")

print(lemmatizer.mode)

# 'rule'

There is no stemming in Spacy.

Text Syntax

Part-of-Speech Tagging

In Spacy, part-of-speech tags come in two flavors. The POS property is an absolute category to which a token belongs, characterized as a universal POS tag. The TAG property is a more nuanced category that builds upon the dependency parsing and named entity recognition.

Following table lists the POS classes.

| Token | Description |

|---|---|

| ADJ | adjective |

| ADP | adposition |

| ADV | adverb |

| AUX | auxiliary |

| CCONJ | coordinating conjunction |

| DET | determiner |

| INTJ | interjection |

| NOUN | noun |

| NUM | numeral |

| PART | particle |

| PRON | pronoun |

| PROPN | proper noun |

| PUNCT | punctuation |

| SCONJ | subordinating conjunction |

| SYM | symbol |

| VERB | verb |

| X | other |

For the TAG classes, I could not find a definitive explanation in the documentation. However, this Stackoverflow thread hints that the TAG refer to classes used in academic papers about dependency parsing.

To see the POS and TAG associated with the tokens, run the following code:

doc = nlp(paragraph)

for token in doc:

print(f'{token.text:<20}{token.pos_:>5}{token.tag_:>5}')

#Artificial ADJ JJ

#intelligence NOUN NN

#was AUX VBD

#founded VERB VBN

#as ADP IN

#an DET DT

#academic ADJ JJ

#discipline NOUN NN

Text Semantics

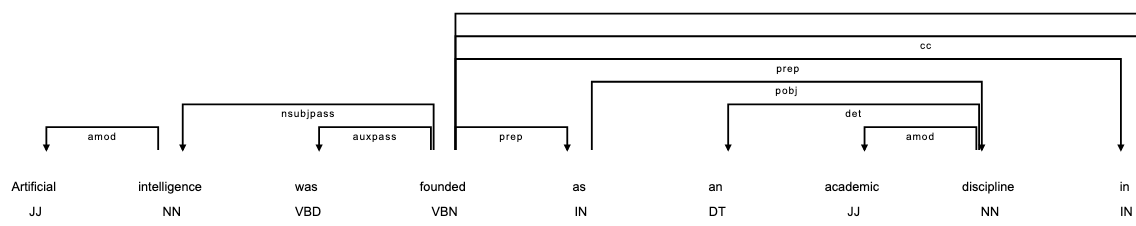

Dependency Parsing

Dependency parsing checks the contextual relationships of words and chunks of words. This step greatly enhances the machine readably semantic information from a text.

Spacy provides both a textual representation as well as a graphical representation of the dependencies.

doc = nlp(paragraph)

for token in doc:

print(f'{token.text:<20}{token.dep_:<15}{token.head.text:<20}')

# Artificial amod intelligence

# intelligence nsubjpass founded

# was auxpass founded

# founded ROOT founded

# as prep founded

# an det discipline

# academic amod discipline

# discipline pobj as

# in prep founded

To render those relationships graphically, run the following commands.

from spacy import displacy

nlp = spacy.load("en_core_web_lg")

displacy.serve(doc, style="dep", options={"fine_grained": True, "compact": True})

It will output a structure as shown here:

Note that the capabilities of this processing step are limited to the language models original training corpus. Spacy offers two ways to enhance the parsing. First, models can be training from scratch. Second, recent releases of Spacy provide transformer models, and these can be fine-tuned to work with a more domain specific corpora as well.

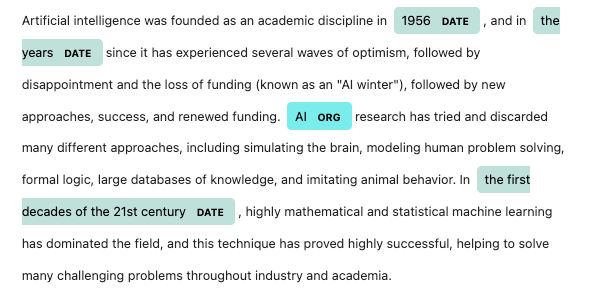

Named Entity Recognition

Entities inside a text refer to persons, organizations or objects and can be detected by Spacy for a processed document.

Recognized entities are part of a parsed document and can be access by the ents property.

doc = nlp(paragraph)

for token in doc.ents:

print(f'{token.text:<40}{token.label_:<15}')

# 1956 DATE

# the years DATE

# AI ORG

# the first decades of the 21st century DATE

Alternatively, they can be visualized.

Similar to the dependency parsing, the result of this step is very dependent on its training data. For example, if used on book texts, it might fail to identify the name of its characters. To help with this case, a custom KnowledgeBase object can be created, which will be used to identify possible candidates for named entities during the text processing.

Document Semantics

Classification

Spacy does not include classification or categorization algorithms by itself, but other open-source projects extend Spacy for executing machine learning tasks.

Just to show one example: The Berttopic extension is an out-of-the-box document classification projects that even provides a visual representation.

This project is installed by running pip install "bertopic[spacy]". Applying this project to a set of 200 articles gives the following result:

import numpy as np

import pandas as pd

from bertopic import BERTopic

X = pd.read_pickle('ml29_01.pkl')

docs = X['preprocessed'].values

topic_model = BERTopic()

topics, probs = topic_model.fit_transform(docs)

print(topic_model.get_topic_info())

# Topic Count Name

# -1 30 -1_artificial_intelligence_machine

# 1 22 49_space_lunar_mission

Additional Features

Corpus Management

Spacy defines a Corpus object, but it is used to read JSON or plaintext files for training custom Spacy language models.

The only property of all processed texts that I could find in the documentation is vocab, a lookup table for all words encountered in the processed text.

Text Vectors

For all built-in model of the category md or lg, word vectors are included. In Spacy, individual tokens, spans (user-defined slices of a documents), or complete documents can be represented as vectors.

Here is an example:

nlp = spacy.load("en_core_web_lg")

vectors = [(token.text, token.vector_norm) for token in doc if token.has_vector]

print(vectors)

# [('Artificial', 8.92717), ('intelligence', 6.9436903), ('was', 10.1967945), ('founded', 8.210244), ('as', 7.7554812), ('an', 8.042635), ('academic', 8.340115), ('discipline', 6.620854),

span = doc[0:10]

print(span)

# Artificial intelligence was founded as an academic discipline in 1956

print(span.vector_norm)

# 3.0066288

print(doc.vector_norm)

# 2.037331438809547

The documentation does not disclose which specific tokenization method is being used. The non-normalized tokens have 300 dimensions, which could hint at that the FastText tokenization method is being used:

token = doc[0]

print(token.vector.dtype, token.vector.shape)

# float32 (300,)

print((token.text, token.vector))

#'Artificial',

# array([-1.6952 , -1.5868 , 2.6415 , 1.4848 , 2.3921 , -1.8911 ,

# 1.0618 , 1.4815 , -2.4829 , -0.6737 , 4.7181 , 0.92018 ,

# -3.1759 , -1.7126 , 1.8738 , 3.9971 , 4.8884 , 1.2651 ,

# 0.067348, -2.0842 , -0.91348 , 2.5103 , -2.8926 , 0.92028 ,

# 0.24271 , 0.65422 , 0.98157 , -2.7082 , 0.055832, 2.2011 ,

# -1.8091 , 0.10762 , 0.58432 , 0.18175 , 0.8636 , -2.9986 ,

# 4.1576 , 0.69078 , -1.641 , -0.9626 , 2.6582 , 1.2442 ,

# -1.7863 , 2.621 , -5.8022 , 3.4996 , 2.2065 , -0.6505 ,

# 0.87368 , -4.4462 , -0.47228 , 1.7362 , -2.1957 , -1.4855 ,

# -3.2305 , 4.9904 , -0.99718 , 0.52584 , 1.0741 , -0.53208 ,

# 3.2444 , 1.8493 , 0.22784 , 0.67526 , 2.5435 , -0.54488 ,

# -1.3659 , -4.7399 , 1.8076 , -1.4879 , -1.1604 , 0.82441 ,

Finally, Spacy offers an option to provide user-defined word vectors for a pipeline.

Custom NLP Pipeline

The reference model for pipelines is included in the pretrained language models. They encompass the following:

- Tokenizer

- Tagger

- Dependency Parser

- Entity Recognizer

- Lemmatizer

The pipeline steps need to be defined in a project-specific configuration file. The full pipeline for all of these steps is defined as follows:

[nlp]

pipeline = ["tok2vec", "tagger", "parser", "ner", "lemmatizer"]

This pipeline can be extended. For example, to add a text categorization step, simply append the pipeline declaration with textcat, and provide an implementation.

There are several pipeline components that can be used, as well as the option to add custom components that will interact with and enrich the document representation achieved by Spacy.

Also, external custom models or model components can be incorporated into Spacy, for example exchanging the word-vector representation or using any transformer-based models which is based on PyTorch or the external transformers library.

Model Training

Based on the pipeline object and an extensive configuration file, new models can be trained. The Quickstart guide includes a UI widget in which the desired pipeline steps and local file paths can be created interactively. The training data needs to have the form of Python data objects that include the desired properties that should be trained. Here is an example from the official documentation for training named entity recognition.

# Source: Spacy, Training Pipelines & Models, https://spacy.io/usage/training#training-data

nlp = spacy.blank("en")

training_data = [

("Tokyo Tower is 333m tall.", [(0, 11, "BUILDING")]),

]

For the training data itself, several converters are supported, such as JSON, Universal Dependencies, or Named Entity Recognition formats like IOB or BILUO.

To facilitate training, a Corpus object can be used to iterate over JSON or PlainText documents.

Summary

Spacy is a state-of-the art NLP library. By using one of the pre-trained models, all basic text processing tasks (tokenization, lemmatization, part-of-speech tagging) and text semantics tasks (dependency parsing, named entity recognition) are applied. All models are created through a pipeline object, and this object can be used to customize any of these steps, for example providing custom tokenizer or swap the word-vector component. Additionally, you can use other transformer-based models, and extend the pipeline with tasks for text classification.

Previous: Python NLP Library: NLTK