Flair is a modern NLP library. From text processing to document semantics, all core NLP tasks are supported. Flair uses modern transformer neural networks models for several tasks, and it incorporates other Python libraries which enables to choose specific models. Its clear API and data structures that annotate text, as well as multi-language support, makes it a good candidate for NLP projects.

This article helps you to get started with Flair. Following its installation, you will learn how to apply text processing and text syntax task, and then see the rich support for text and document semantics.

The technical context of this article is Python v3.11 and Flair v0.12.2. All examples should work with newer versions too.

Installation

The Flair library can be installed via pip:

python3 -m pip install flair

The installation can take up to 30 minutes because several other libraries need to be installed as well. Also, when working with sequencers, taggers or datasets, additional data will need to be downloaded.

NLP Tasks

Flair supports all core NLP tasks, and provides additional features to create word vectors and to train custom sequencers.

- Text Processing

- Tokenization

- Lemmatization

- Syntactic Chunking

- Text Syntax

- Part-of-Speech Tagging

- Text Semantics

- Semantic Frames Parsing

- Named Entity Recognition

- Document Semantics

- Sentiment Analysis

- Language Toxicity Analysis

Furthermore, Flair supports these additional features:

- Datasets

- Corpus Management

- Text Vectors

- Model Training

Text Processing

Tokenization

Tokenization is applied in Flair automatically. The basic data structure Sentence wraps any-length text and generates tokens.

from flair.data import Sentence

# Source: Wikipedia, Artificial Intelligence, https://en.wikipedia.org/wiki/Artificial_intelligence

paragraph = '''Artificial intelligence was founded as an academic discipline in 1956, and in the years since it has experienced several waves of optimism, followed by disappointment and the loss of funding (known as an "AI winter"), followed by new approaches, success, and renewed funding. AI research has tried and discarded many different approaches, including simulating the brain, modeling human problem solving, formal logic, large databases of knowledge, and imitating animal behavior. In the first decades of the 21st century, highly mathematical and statistical machine learning has dominated the field, and this technique has proved highly successful, helping to solve many challenging problems throughout industry and academia.'''

doc = Sentence(paragraph)

tokens = [token for token in doc]

print(tokens)

# [Token[0]: "Artificial", Token[1]: "intelligence", Token[2]: "was", Token[3]: "founded", Token[4]: "as", Token[5]: "an", Token[6]: "academic", Token[7]: "discipline",

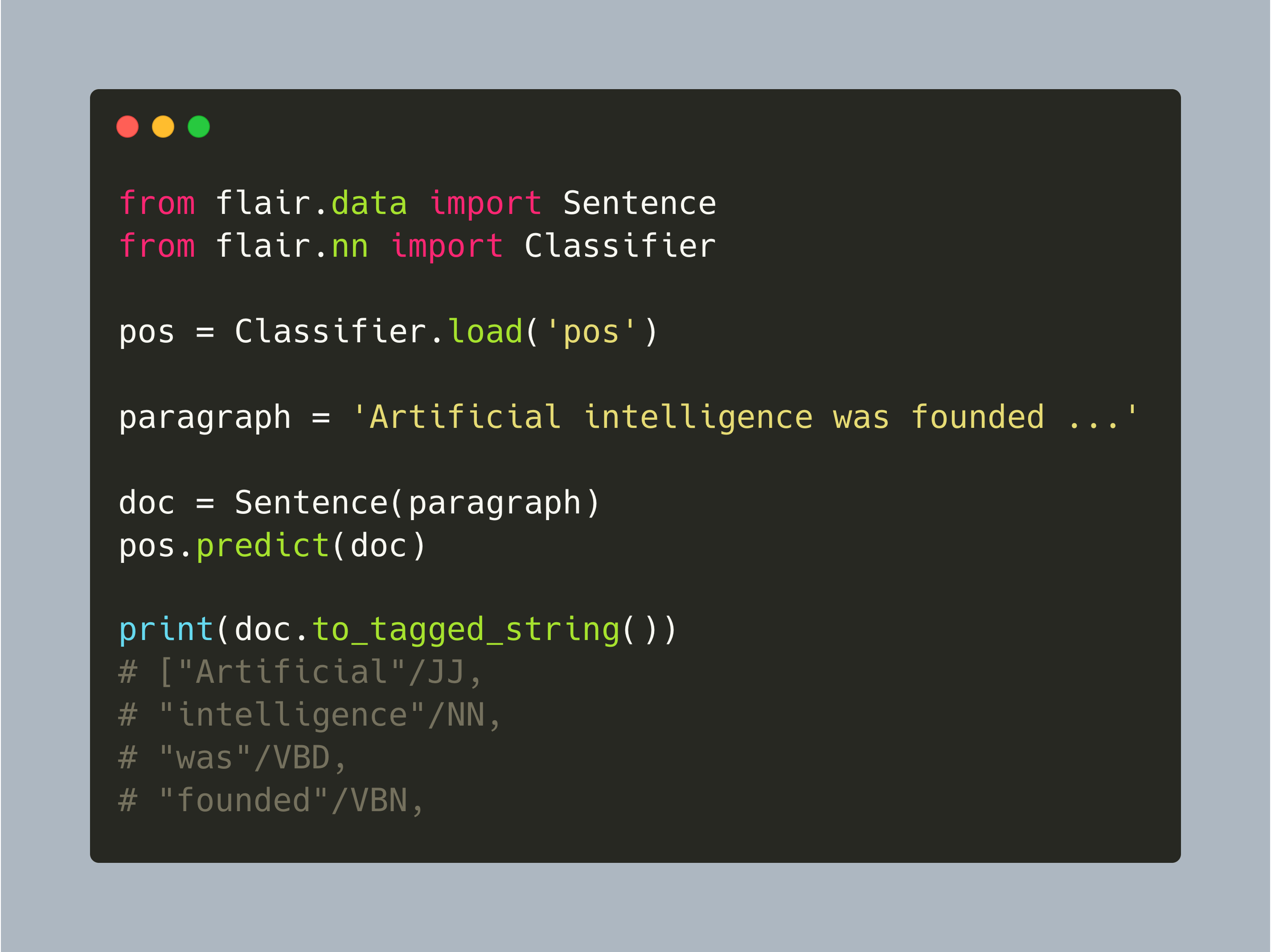

Part-of-Speech Tagging

Detecting additional syntactic (and semantic) information in texts involved the usage of Classifiers, a Flair-specific data structure that incorporates pre-defined transformer models for specific tasks.

For POS tagging, Flair provides 14 different models, supporting English, German, Portuguese and more languages.

The basic, English language classifier pos defines these types:

| ADD | |

|---|---|

| AFX | Affix |

| CC | Coordinating conjunction |

| CD | Cardinal number |

| DT | Determiner |

| EX | Existential there |

| FW | Foreign word |

| HYPH | Hyphen |

| IN | Preposition or subordinating conjunction |

| JJ | Adjective |

| JJR | Adjective, comparative |

| JJS | Adjective, superlative |

| LS | List item marker |

| MD | Modal |

| NFP | Superfluous punctuation |

| NN | Noun, singular or mass |

| NNP | Proper noun, singular |

| NNPS | Proper noun, plural |

| NNS | Noun, plural |

| PDT | Predeterminer |

| POS | Possessive ending |

| PRP | Personal pronoun |

| PRP$ | Possessive pronoun |

| RB | Adverb |

| RBR | Adverb, comparative |

| RBS | Adverb, superlative |

| RP | Particle |

| SYM | Symbol |

| TO | to |

| UH | Interjection |

| VB | Verb, base form |

| VBD | Verb, past tense |

| VBG | Verb, gerund or present participle |

| VBN | Verb, past participle |

| VBP | Verb, non-3rd person singular present |

| VBZ | Verb, 3rd person singular present |

| WDT | Wh-determiner |

| WP | Wh-pronoun |

| WP$ | Possessive wh-pronoun |

| WRB | Wh-adverb |

| XX | Unknown |

The following snippet shows how to use the POS sequencer:

from flair.data import Sentence

from flair.nn import Classifier

pos = Classifier.load('pos')

# SequenceTagger predicts: Dictionary with 53 tags: <unk>, O, UH, ,, VBD, PRP, VB, PRP$, NN, RB, ., DT, JJ, VBP, VBG, IN, CD, NNS, NNP, WRB, VBZ, WDT, CC, TO, MD, VBN, WP, :, RP, EX, JJR, FW, XX, HYPH, POS, RBR, JJS, PDT, NNPS, RBS, AFX, WP$, -LRB-, -RRB-, ``, '', LS, $, SYM, ADD

doc = Sentence(paragraph)

pos.predict(doc)

print(doc.to_tagged_string())

# ["Artificial"/JJ,

# "intelligence"/NN,

# "was"/VBD,

# "founded"/VBN,

# "as"/IN,

# "an"/DT,

# "academic"/JJ,

Syntactic Chunking

Chunking is the process of extracting coherent sets of tokens that have a distinct meaning, for example noun phrases, prepositional phrases, adjective phrases and more.

The chunk classifier is used for this task. Here is an example:

from flair.data import Sentence

from flair.nn import Classifier

chunk = Classifier.load('chunk')

# SequenceTagger predicts: Dictionary with 47 tags: O, S-NP, B-NP, E-NP, I-NP, S-VP, B-VP, E-VP, I-VP, S-PP, B-PP, E-PP, I-PP, S-ADVP, B-ADVP, E-ADVP, I-ADVP, S-SBAR, B-SBAR, E-SBAR, I-SBAR, S-ADJP, B-ADJP, E-ADJP, I-ADJP, S-PRT, B-PRT, E-PRT, I-PRT, S-CONJP, B-CONJP, E-CONJP, I-CONJP, S-INTJ, B-INTJ, E-INTJ, I-INTJ, S-LST, B-LST, E-LST, I-LST, S-UCP, B-UCP, E-UCP, I-UCP, <START>, <STOP>

doc = Sentence(paragraph)

chunk.predict(doc)

print(doc.to_tagged_string())

# ["Artificial intelligence"/NP,

# "was founded"/VP,

# "as"/PP,

# "an academic discipline"/NP,

# "in"/PP,

# "1956"/NP,

# "and"/PP,

# "in"/PP,

# "the years"/NP,

Text Semantics

Semantic Frames Parsing

Semantic framing is an NLP technique that marks sequences of tokens with its semantic meaning. This is helpful for determining the sentiments and the topic of a sentence.

Just as before, semantic framing is used by loading a specific classifier. Although this feature is marked as experimental, at the time of writing this article using flair v0.12.2, it worked fine.

frame = Classifier.load('frame')

# SequenceTagger predicts: Dictionary with 4852 tags: <unk>, be.01, be.03, have.01, say.01, do.01, have.03, do.02, be.02, know.01, think.01, come.01, see.01, want.01, go.02, ...

doc = Sentence(paragraph)

frame.predict(doc)

print(doc.to_tagged_string())

# ["was"/be.03, "founded"/found.01, "has"/have.01, "experienced"/experience.01, "waves"/wave.04, "followed"/follow.01, "disappointment"/disappoint.01,

Named Entity Recognition

Named entities are persons, places, or dates inside a sentence. Flair provides different NER models.

Let’s compare the default ner with the larger ner-ontonotes-fast.

#Source: Wikipedia, Artificial Intelligence, https://en.wikipedia.org/wiki/Artificial_intelligence

paragraph = '''

In 2011, in a Jeopardy! quiz show exhibition match, IBM's question answering system, Watson, defeated the two greatest Jeopardy! champions, Brad Rutter and Ken Jennings, by a significant margin.

'''

ner = Classifier.load('ner')

# SequenceTagger predicts: Dictionary with 20 tags: <unk>, O, S-ORG, S-MISC, B-PER, E-PER, S-LOC, B-ORG, E-ORG, I-PER, S-PER, B-MISC, I-MISC, E-MISC, I-ORG, B-LOC, E-LOC, I-LOC, <START>, <STOP>

doc = Sentence(paragraph)

ner.predict(doc)

print(doc.get_spans('ner'))

# [Span[5:7]: "Jeopardy!" → MISC (0.5985)

# Span[12:13]: "IBM" → ORG (0.998)

# Span[18:19]: "Watson" → PER (1.0)

# Span[28:30]: "Brad Rutter" → PER (1.0)

# Span[31:33]: "Ken Jennings" → PER (0.9999)]

With the ner model, all persons and the organization is recognized.

ner = Classifier.load('ner-ontonotes-fast')

# SequenceTagger predicts: Dictionary with 75 tags: O, S-PERSON, B-PERSON, E-PERSON, I-PERSON, S-GPE, B-GPE, E-GPE, I-GPE, S-ORG, B-ORG, E-ORG, I-ORG, S-DATE, B-DATE, E-DATE, I-DATE, S-CARDINAL, B-CARDINAL, E-CARDINAL, I-CARDINAL, S-NORP, B-NORP, E-NORP, I-NORP, S-MONEY, B-MONEY, E-MONEY, I-MONEY, S-PERCENT, B-PERCENT, E-PERCENT, I-PERCENT, S-ORDINAL, B-ORDINAL, E-ORDINAL, I-ORDINAL, S-LOC, B-LOC, E-LOC, I-LOC, S-TIME, B-TIME, E-TIME, I-TIME, S-WORK_OF_ART, B-WORK_OF_ART, E-WORK_OF_ART, I-WORK_OF_ART, S-FAC

doc = Sentence(paragraph)

ner.predict(doc)

print(list(doc.get_labels()))

# [Span[1:2]: "2011"'/'DATE' (0.9984)

# Span[12:13]: "IBM"'/'ORG' (1.0)

# Span[18:19]: "Watson"'/'PERSON' (0.9913)

# Span[22:23]: "two"'/'CARDINAL' (0.9995)

# Span[24:25]: "Jeopardy"'/'WORK_OF_ART' (0.938)

# Span[28:30]: "Brad Rutter"'/'PERSON' (0.9939)

# Span[31:33]: "Ken Jennings"'/'PERSON' (0.9914)]

With ner-ontonotes-fast, numbers, date, and even Jeopardy are recognized.

Document Semantics

Sentiment Analysis

Flair's sentiment analysis is typically applied to a sentence, but by wrapping a whole text in the Sentence data structure, it can be applied to a whole text as well. It will output a binary classification of positive or negative for a sentence.

#Source: Wikipedia, Artificial Intelligence,https://en.wikipedia.org/wiki/Artificial_intelligence

sentiment = Classifier.load('sentiment')

doc = Sentence(paragraph)

sentiment.predict(doc)

print(doc)

# Sentence[124]: "Artificial intelligence was founded ..." → POSITIVE (0.9992)

Language Toxicity Analysis

Flair provides a model for detecting language toxicity, but in German only. It was trained on a specific dataset downloadable from the Heidelberg university,

The following snippet detects the usage of offensive language.

paragraph = '''

Was für Bullshit.

'''

toxic_language = Classifier.load('de-offensive-language')

doc = Sentence(paragraph)

toxic_language.predict(doc)

print(list(doc.get_lables()))

# Sentence[16]: "Was für Bullshit" → OFFENSE (0.9772)

Additional Features

Datasets

Flair includes several datasets and corpus, see the complete list.

Some of these datasets were used to train models for the Flair-specific tasks, for example for NER or relation extraction. Other datasets are the GLUE language benchmark and text collections.

Here is an example how to load a text classification datasets for detecting emotions in Reddit posts.

import flair.datasets

data = flair.datasets.GO_EMOTIONS()

print(data)

len(data.train)

# 43410

data.train[42000]

# This is quite common on people on such forums. I have a feeling they are a tad sarcastic." → APPROVAL (1.0); NEUTRAL (1.0)

Corpus Management

In Flair, the Corpus object represents documents prepared for training a new tagger or classifier. This object consists of tree distinct collections called train, dev and test, and each contains Sentence objects.

Text Vectors

Flair support different vectorization schemes: Pretrained word vectors, like glove, and word vectors from different transformer models, loaded via the transformers library, is enabled.

Let’s see how to tokenize a paragraph with these two methods.

from flair.embeddings import WordEmbeddings

embeddings = WordEmbeddings('glove')

doc = Sentence(paragraph)

embeddings.embed(doc)

for token in doc:

print(token)

print(token.embedding)

# Token[0]: "Artificial"

# tensor([ 0.3455, 0.3144, -0.0313, 0.6368, 0.2727, -0.6197, -0.5177, -0.2368,

# -0.0166, 0.0344, -0.1542, 0.0435, 0.7298, 0.1112, 1.3430, ...,

# Token[1]: "intelligence"

# tensor([-0.3110, -0.4329, 0.7773, -0.3112, 0.0529, -0.8502, -0.3537, -0.7053,

# 0.0845, 0.8877, 0.8353, -0.4164, 0.3670, 0.6083, 0.0085, ...,

And for the Transformer embeddings:

from flair.embeddings import TransformerWordEmbeddings

embedding = TransformerWordEmbeddings('bert-base-uncased')

doc = Sentence(paragraph)

embedding.embed(doc)

for token in doc:

print(token)

print(token.embedding)

# Token[0]: "Artificial"

# tensor([ 1.0723e-01, 9.7490e-02, -6.8251e-01, -6.4322e-02, 6.3791e-01,

# 3.8582e-01, -2.0940e-01, 1.4441e-01, 2.4147e-01, ...)

# Token[1]: "intelligence"

# tensor([-9.9221e-02, -1.9465e-01, -4.9403e-01, -4.1582e-01, 1.4902e+00,

# 3.6126e-01, 3.6648e-01, 3.7578e-01, -4.8785e-01, ...)

Furthermore, instead of individual tokens, complete documents can be vectorized as well with document embeddings.

Model Training

Flair includes functions to train new models that can be used as sequence taggers or text classifiers. It provides loading of datasets, model definition, training configuration and execution. For most of these steps, the transformers library is used.

Here is an example from the official documentation to train a model on the Cornell Corpus for Part-of-Speech tagging.

# Source: FlairNLP, How model Training works in Flair, https://flairnlp.github.io/docs/tutorial-training/how-model-training-works#example-training-a-part-of-speech-tagger

from flair.datasets import UD_ENGLISH

from flair.embeddings import WordEmbeddings

from flair.models import SequenceTagger

from flair.trainers import ModelTrainer

# 1. load the corpus

corpus = UD_ENGLISH().downsample(0.1)

print(corpus)

#Corpus: 1254 train + 200 dev + 208 test sentences

# 2. what label do we want to predict?

label_type = 'upos'

# 3. make the label dictionary from the corpus

label_dict = corpus.make_label_dictionary(label_type=label_type)

print(label_dict)

# Dictionary created for label 'upos' with 18 values: NOUN (seen 3642 times), VERB (seen 2375 times), PUNCT (seen 2359 times), ADP (seen 1865 times), PRON (seen 1852 times), DET (seen 1721 times), ADJ (seen 1321 times), AUX (seen 1269 times), PROPN (seen 1203 times), ADV (seen 1083 times), CCONJ (seen 700 times), PART (seen 611 times), SCONJ (seen 405 times), NUM (seen 398 times), INTJ (seen 75 times), X (seen 63 times), SYM (seen 60 times)

# 4. initialize embeddings

embeddings = WordEmbeddings('glove')

# 5. initialize sequence tagger

model = SequenceTagger(hidden_size=256,

embeddings=embeddings,

tag_dictionary=label_dict,

tag_type=label_type)

print(model)

# Model: "SequenceTagger(

# (embeddings): WordEmbeddings(

# 'glove'

# (embedding): Embedding(400001, 100)

# )

# (word_dropout): WordDropout(p=0.05)

# (locked_dropout): LockedDropout(p=0.5)

# (embedding2nn): Linear(in_features=100, out_features=100, bias=True)

# (rnn): LSTM(100, 256, batch_first=True, bidirectional=True)

# (linear): Linear(in_features=512, out_features=20, bias=True)

# (loss_function): ViterbiLoss()

# (crf): CRF()

# )"

# 6. initialize trainer

trainer = ModelTrainer(model, corpus)

# 7. start training

trainer.train('resources/taggers/example-upos',

learning_rate=0.1,

mini_batch_size=32,

max_epochs=10)

# Parameters:

# - learning_rate: "0.100000"

# - mini_batch_size: "32"

# - patience: "3"

# - anneal_factor: "0.5"

# - max_epochs: "10"

# - shuffle: "True"

# - train_with_dev: "False"

# epoch 1 - iter 4/40 - loss 3.12352573 - time (sec): 1.06 - samples/sec: 2397.20 - lr: 0.100000

# ...

# epoch 1 - iter 4/40 - loss 3.12352573 - time (sec): 1.06 - samples/sec: 2397.20 - lr: 0.100000

# Results:

# - F-score (micro) 0.7877

# - F-score (macro) 0.6621

# - Accuracy 0.7877

# By class:

# precision recall f1-score support

# NOUN 0.7231 0.8495 0.7812 412

# PUNCT 0.9082 0.9858 0.9454 281

# VERB 0.7048 0.7403 0.7221 258

# PRON 0.9070 0.8986 0.9028 217

# ADP 0.8377 0.8791 0.8579 182

# DET 1.0000 0.8757 0.9338 169

# ADJ 0.6087 0.6490 0.6282 151

# PROPN 0.7538 0.5568 0.6405 176

# AUX 0.8077 0.8678 0.8367 121

# ADV 0.5446 0.4661 0.5023 118

# CCONJ 0.9880 0.9425 0.9647 87

# PART 0.6825 0.8600 0.7611 50

# NUM 0.7368 0.5000 0.5957 56

# SCONJ 0.6667 0.3429 0.4528 35

# INTJ 1.0000 0.4167 0.5882 12

# SYM 0.5000 0.0833 0.1429 12

# X 0.0000 0.0000 0.0000 9

# accuracy 0.7877 2346

# macro avg 0.7276 0.6420 0.6621 2346

# weighted avg 0.7854 0.7877 0.7808 2346

Summary

Flair is a modern NLP library that supports all core NLP tasks. This article showed how to apply text processing, text syntax, text semantics and document semantics tasks. Distinguished features of Flair are its multi-language support for selected tasks, for example named-entity-recognition and part-of-speech tagging, and its usage of transformer neural networks. Also, a complete feature set for model training exists, from training data preparation, model and training configuration, to training execution and metrics computation.