Hashicorp Vault is a secrets management tool. It enables encrypted storage of sensitive data like API credentials, database passwords, certificates and encryption keys. This is managed by flexible plugins called secrets engines. Once activated in a Vault instance, they provide a standard API and CLI access for creation, updating, reading and deleting secrets.

This article provides a complete overview to Vault secrets engines. It starts with a general explanation about their plugin implementation, explains the four group of engines, and then lists all engines per group as well as giving a concise tutorial for one specific example engine.

The technical context of this article is hashicorp_vault_v1.20, released 2025-06-25. All provided information and command examples should be valid with newer versions too, baring an update of the CLI commands' syntax.

The background material for this article stems from the official Hashicorp Vault documentation about Secrets engines and subsequent pages, as well as information from the binary itself.

Secrets Engine Plugins

Hashicorp Vault secrets engine are plugins that interact with the Vault storage backend and router component. They provide both CLI access, and REST API like endpoints for creating, reading, writing and deleting data.

Activating a secrets engine triggers two processes. First, at the storage layer, each engine is assigned a unique UUID, which becomes an internal file path prefix. An engine plugin only has access to their respective file path via a chroot implementation, preventing access to other engines or vault internal storage paths. Second, the engine is mounted at a specific path, and registered in the router with its sub-paths that reflects available operations.

Secrets engines differ in the nature of sensitive data they process, features how this data can be modified, and the capabilities for token-based access to the managed secrets.

Secrets engine lifecycle can be managed completely via the Vault CLI. Commands follow the same patterns for enabling, configuration, secret creation and encryption/decryption. Specific operations per engine may differ in specific paths or flags added to the commands.

Secrets Engine Groups

All engines supporting vault_v.1.20 can be grouped as follows:

- Applications and Services: Provide access and generate dynamic roles to access external applications.

- Cloud Provider: Creation of static or dynamic roles and issuing tokens with access governed by these roles on cloud platforms

- Encryption Keys: Manage RSA, AES, or even Hardware Security Module keys for general purpose encryption and signing

- Native: Special and general-purpose engines included with Vault

In Vault Enterprise, additional secret engines are supported: Key Management, KMIP and Transform. They are out of scope for this article.

Applications and Services

Engines

- Databases: Manage dynamic secrets for accessing a wide variety of databases, including MySQL, PostgreSQL, Redis, and MongoDB

- Kubernetes: Creates dynamic Kubernetes service account tokens and additional service accounts, role binding and role resources, implementing RBAC policies (Role Based Access Control)

- MongoDB Atlas: Dynamic API keys to access a MongoDB project or organization

- PKI: Create dynamic x.509 certificates

- RabbitMQ: Generate credentials for accessing a RabbitMQ event stream

Also, the following Hashicorp applications, the same company that produces Vault, are supported:

- Consul: Dynamic Consul API tokens with associated ACL policies (Access Control List)

- HCP Terraform: Access to HCP Terraform with static API tokens

- Nomad: Create dynamic access tokens based on ACL policies (Access Control List)

Operation

To enable secrets management at an external application, the same steps need to be considered. Following lifecycle phases provide a general overview.

- Activation: The secrets engine is activated by issuing

vault secrets enablewith the engine name as its parameter. The engine is mounted by convention at the very same paths as the engine name, which is the very genericdatabase. Therefore, use the-pathflag to provide a name that reflects the concrete type of database. - Authorization: Vault cannot interact with the application without being authorized. Concrete methods vary with the engine. Cloud providers can be accessed e.g. with dedicated CLI binaries or account data that contains the access credentials, while databases need a connection-string, detailing domain names or IP addresses, ports, and access credential.

- Authentication: Most application secrets engine issue dynamic secrets - leases on ephemeral accounts of the systems which are automatically deleted once the token TTL expires. For this mechanism, suitable access rights, defined via RBAC or ACLs, are required. The engines may reuse defined roles at the target system, or even create them.

- Configuration: Each engine can be configured during initialization as well as with a dedicated endpoint by using

vault write -f :engine/config/:name. Key-Value pair arguments, specific for each engine, can be passed. Some engines also support structure data formats like JSON files for configuration - Operation: The default operation is to create access tokens by executing a request with the pattern

vault read :engine/creds/:role. This will create an ephemeral lease to an account, and return the access token with defined TTL and max TTL. When the TTL expired, or the token was renewed in time until the max TTL expired, the applications account will be destroyed. Some engines expose additional operations. - Deactivation: When the secret engine is not required anymore, it can be removed by running

vault secrets disable. But beware: All secrets will be deleted immediately.

Example: Database Access for PostgreSQL

The Vault Postgres secrets engines support the creation of access tokens for static roles, dynamic roles, and rotation of credentials.

As with most other secret engines, the first step is to enable the engine:

vault secrets enable -path=postgres database

Authorization of Vault to access the concrete Postgres instance is achieved by configuring the connection string, a combination of access credentials and an URL containing the server, port, and database name.

To access a locally running database, execute the following statement:

> vault write postgres/config/vault \

plugin_name="postgresql-database-plugin" \

connection_url="postgresql://${POSTGRES_USER}:${POSTGRES_PASSWORD}@localhost:5432/vault" \

allowed_roles="vault"

# Log messages

WARNING! The following warnings were returned from Vault:

* Password found in connection_url, use a templated url to enable root

rotation and prevent read access to password information.

The warning is clear, but all my attempts to remedy this failed with the following error message:

* error creating database object: error verifying connection: ping failed: failed to connect to `host=localhost user=postgres database=vault`: failed SASL auth (FATAL: password authentication failed for user "postgres" (SQLSTATE 28P01))

The next step is to ensure correct authentication for the created database. A static role will be used, and it can be created by using the vault binary itself for sending an embedded SQL string.

export ROLE_NAME=vault

export ROLE_PASSWORD=SECRET

vault write postgres/roles/vault \

db_name="vault" \

creation_statements="CREATE ROLE ${ROLE_NAME} WITH LOGIN PASSWORD '${ROLE_PASSWORD}'; \

GRANT SELECT ON ALL TABLES IN SCHEMA public TO ${ROLE_NAME};" \

default_ttl="1h" \

max_ttl="24h"

Let’s verify that the role was created successfully.

> vault read -format=json postgres/roles/vault

# Log messages

{

"request_id": "248df158-2158-be38-4cba-5832c6e92526",

"lease_id": "",

"lease_duration": 0,

"renewable": false,

"data": {

"creation_statements": [

"CREATE ROLE vault WITH LOGIN PASSWORD 'SECRET'; GRANT SELECT ON ALL TABLES IN SCHEMA public TO vault;"

],

"credential_type": "password",

"db_name": "vault",

"default_ttl": 3600,

"max_ttl": 86400,

"renew_statements": [],

"revocation_statements": [],

"rollback_statements": []

},

"warnings": null,

"mount_type": "database"

}

With the proper authentication and authorization in place, dynamic secrets can be created. Execute the following:

> vault read postgres/creds/vault

# Log messages

Key Value

--- -----

lease_id postgres/creds/vault/czukFzEOUE7sLcacYpXS0x

lease_duration 1h

lease_renewable true

password zJq80Hifgz-4zX5KWkKI

username root-vault-lDHxShgXSYx5CZYHJ850-1754138408

The output of this command contains the clear text password and username that provides access to the database.

Note that the role name itself become a dynamic entity in the Postgres DB, valid until the lease expired. You cannot create a new role with the same name:

> vault read postgres/creds/vault

# Log messages

Error reading postgres/creds/vault: Error making API request.

URL: GET http://127.0.0.1:8230/v1/postgres/creds/vault

Code: 500. Errors:

* 1 error occurred:

* failed to execute query: ERROR: role "vault" already exists (SQLSTATE 42710)

Finally, disable the secrets engine so that all secrets will be deleted.

vault secrets disable postgres

Cloud Provider

Engines

- AliCloud: Access token generation based on RAM policies (Resource Access Management)

- AWS: Access tokens based on IAM policies (Identity and Access Management)

- Azure: Dynamic leases on service principals with group and role assignments

- Google Cloud: Dynamic Google Cloud account keys

Operation

Secrets management engines for cloud access are similar to application access: Dynamic, on-demand secrets are generated for specific access scopes defined by roles. Yet in a cloud environment these settings provide access to virtually any type of resource that can be managed.

The typical lifecycle of using such an engine is as follows.

- Activation: The chosen secrets engine is activated by running

vault secrets enable :name, and as before, its mount path defaults to the secret engines name. - Authentication: Every cloud provider has different options for authenticating a user. For AWS, it’s a combination of the region, access key and access secret. In Azure, it’s a set of environment variables reflecting the subscription, the tenant, service principal ID, and password. And to work with GCP, it is a service account credentials file created via the GCP GUI. Cloud provider secrets engine don't have a dedicated configuration endpoint to change settings - a new configuration simply overwrites old data completely.

- Authorization: Roles are cloud-specific access configurations that need to be defined in Vault. All plugins allow the creation of these roles with

vault writecommands or to reference roles already present at the cloud provider itself. As an example, for AWS, IAM role definitions are triples of the keywordsEffect,Action, andResource, while for Azure the duo ofrole_nameandscopeare required. - Operation: Token creation based on the defined roles is the major use case for this secrets engine. Additional features vary with the cloud provider. AWS and GCP also allow interaction with both pre-configured static roles and vault-defined dynamic roles. In Azure and GCP, account keys can be rotated.

- Deactivation: When the engine is not required anymore, it can be disabled with

vault secrets disable :name. This immediately remove all active leases, barring access to the cloud provider resources.

Example: AWS

The AWS secrets engine allows the creation of access tokens based on static or dynamic roles implement with AWS-specific Identity and Access Management concepts. Furthermore, the root credentials used for authentication can be rotated, and CRUD operations can be applied to roles.

The first step, as always, is to enable the secrets engine.

vault secrets enable -path=aws-cloud aws

The engine needs to be configured with access credentials to an account that provides a superset of all access permissions that individual tokens should govern. You need an access key, its password, and the region to which you want to get access.

export AWS_ACCESS_KEY=SECRET

export AWS_SECRET_KEY=SECRET

export AWS_REGION=SECRET

vault write aws/config/root \

access_key=$AWS_ACCESS_KEY \

secret_key=$AWS_SECRET_KEY \

region=$AWS_REGION

When the general access is configured, roles need to be configured. Each role details which AWS specific action on which resources, or access rights like user management, are enabled.

As an example, lets create a role that allows management of EC2 instances. The starting point for the role definition is to login into the AWS GUI, then follow the menu path IAM -> Roles -> Create role. At the last step of this dialog, a JSON declaration is shown.

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"sts:AssumeRole"

],

"Principal": {

"Service": [

"ec2.amazonaws.com"

]

}

}

]

}

This document forms the payload of a vault write command to define the role. As shown in the Hashicorp Vault documentation about AWS role setup, this command needs to include additional arguments: policy_arns, credential_type and the policy_document, the JSON document payload without the key Principal

Here is an example:

> vault write aws-cloud/roles/ec2-manager \

policy_arns=arn:aws:iam::aws:policy/AmazonEC2ReadOnlyAccess,arn:aws:iam::aws:policy/IAMReadOnlyAccess \

credential_type=iam_user \

policy_document=-<<EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"sts:AssumeRole"

],

"Resource": "*"

}

]

}

EOF

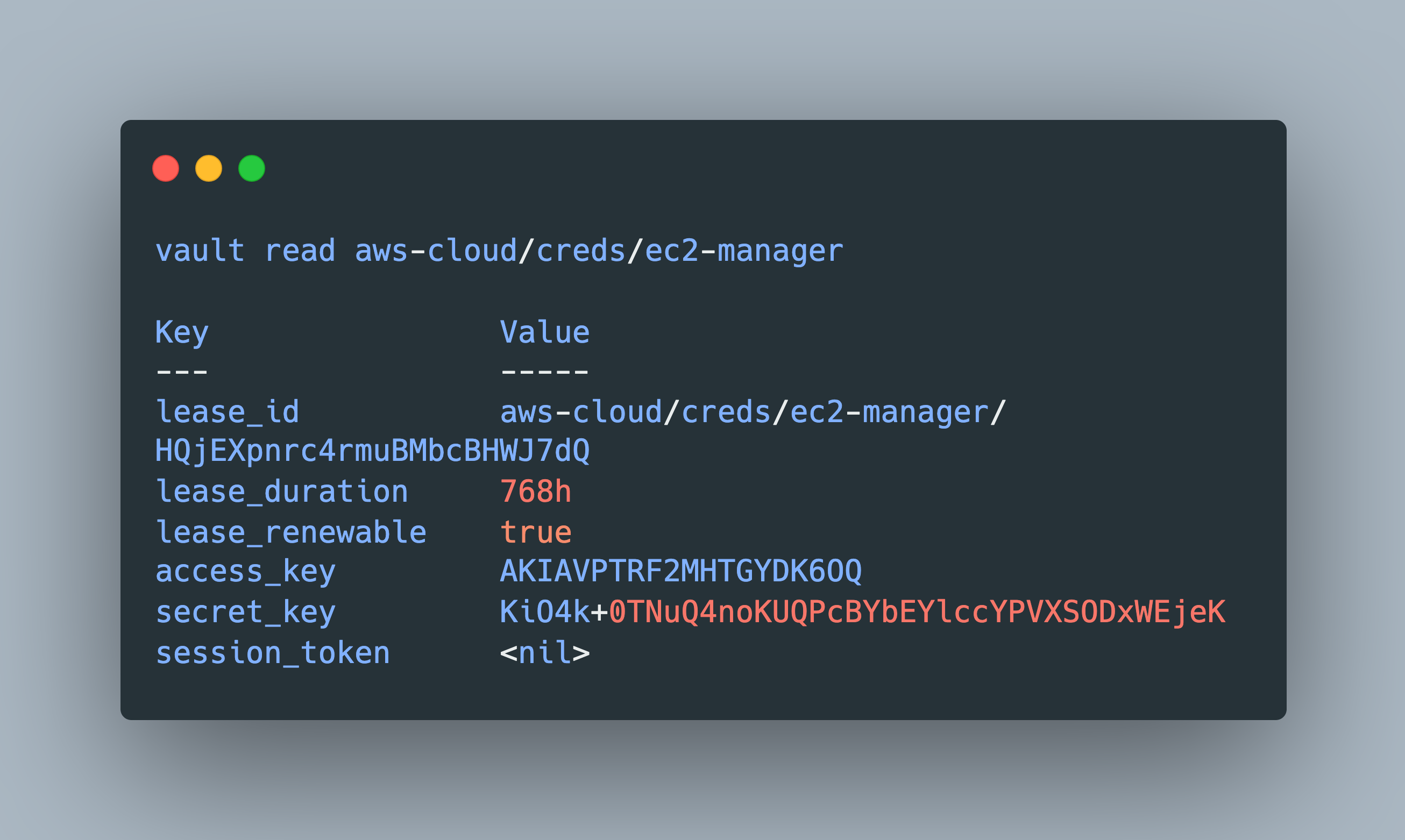

When the role is defined new tokens can be generated with a vault read command to the defined role name.

> vault read aws-cloud/creds/ec2-manager

# Log messages

Key Value

--- -----

lease_id aws-cloud/creds/ec2-manager/HQjEXpnrc4rmuBMbcBHWJ7dQ

lease_duration 768h

lease_renewable true

access_key AKIAVPTRF2MHTGYDK6OQ

secret_key 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

session_token <nil>

The returned tokens contain the access_key and secret_key with which an AWS CLI is authenticated to gain access to the intended resource.

Finally, and as the last step in the engine's lifecycle, the engine is disabled again.

> vault secrets disable aws-cloud

Encryption Key

Engines

- Google Cloud KMS: Manage encryption keys based on AES256, RSA 2048, RSA 3072, RSA 4096, EC P256, EC P384 as well as Hardware Security Module keys with FIPS 140-2 Level 3 standard

- Transit: Encryption as a service, processes a base64 encoded plaintext document and returns a cipher text. When this cipher text is sent to the same endpoint, it returns the unencrypted base64 encoded base text. The data itself is not stored in the backend

General Operation

A typical usage and the used API paths are as follows:

- Activation: The engine is enabled by running

vault secrets enable $ENGINE_NAME. Its mount point corresponds to the name, which can be customized with the--pathflag. - Creation: A secret is created by writing to the specific mount path, typically

POST /engine/keys/:name. Additional key-value arguments can be used to determine the type of secret and its specific configuration - Configuration: The configuration of a secret can be modified at

POST /transit/keys/:name/config, accepting similar key-value parameters that were used at creation time. - Encryption: With the created secret name, an encryption operation is started with

POST /transit/encrypt/:name. Provided input data can be plaintext or base64 encoded data as required by some engines. This returns an encrypted text. - Decryption: Using the same created secrets name, and providing the ciphertext as a payload, accessing

POST /transit/decrypt/:namereturn the plaintext or base64 encoded plaintext. - Other operations: Each engine provides different features that can be managed with this endpoint. For example, key rotation with

POST /transit/keys/:name/rotate, certificate chain setting withPOST /transit/keys/:name/set-certificate, certificate signing request creation with/transit/keys/:name/csr, or exporting the saved key withGET /transit/export/:key_type/:name(/:version). - Deletion: Specific secrets are deleted by

DELETE /transit/keys/:name. - Deactivation: All secrets engines are disabled by running

vault secrets disable $ENGINE_NAME. This immediately revokes all created secrets, and delete all corresponding data in the Vault storage.

Example: Transit Engine

The transit secrets engine serves as a one-shot encryption service endpoint. Any base64 encoded data is decrypted with the active, internal keyring of the engine, and returns a cipher test. Then, sending this ciphertext to the endpoint for the same encryption version will unencrypt the data.

The secrets engine needs to be activated first. Its default path transit is the same as the engine name.

vault secrets enable transit

Second, a new encryption key needs to be created. The concrete type for it can be customized. Let’s use an rsa-4096 key.

> vault write transit/keys/rsa type=rsa-4096

# Log messages

Key Value

--- -----

allow_plaintext_backup false

auto_rotate_period 0s

deletion_allowed false

derived false

exportable false

imported_key false

keys hybrid_public_key: name:rsa-4096 public_key:-----BEGIN PUBLIC KEY-----

# MIICIjANBgkqhkiG9w0BAQEFAAOCAg8AMIICCgKCAgEApaQ8Xz0KxcyKwZ0z92mx

# ...

# -----END PUBLIC KEY-----

latest_version 1

min_available_version 0

min_decryption_version 1

min_encryption_version 0

name rsa

supports_decryption true

supports_derivation false

supports_encryption true

supports_signing true

type rsa-4096

The transit secrets engine accepts any base64 encoded cleartext for encryption. This encoding can be achieved with CLI commands.

export PLAINTEXT="Hello Vault!"

export PLAINTEXT_BASE64="$(echo ${PLAINTEXT}|base64)"

The encoded Base64 text can be seen as follows:

> echo $PLAINTEXT_BASE64

# Log messages

SGVsbG8gVmF1bHQK

Forward this text to the created key name rsa as follows:

> vault write transit/encrypt/rsa plaintext="${PLAINTEXT_BASE64}"

# Log messages

Key Value

--- -----

ciphertext vault:v1:oy/0h/DpvPGVBw7F74HhTqbqroaBfePmZE8xdFK200gifW0YPis4z+hPyip9eRSO2pf+cmSH7DGvY4TlRFK5+MzevFeFL0ukDif8NqunAFyZ4RX02t3DGiq10sTKqTGLBfa75AHKJygcW7LZjaGggVUhfepkjjFuls/K2AiEQhee/dweUnLjJEQMIHgJJbwL01TbE4s1UoMv+2kiFODGc2abVIZ8aZyNuWzfMl1hK7+lnuPVbUKkoZwDuzuvDWp3bRqPikxJeldGkacgPw+AGwynX9wsWYbBfmBqZXPskZIYRsRPLfa2uwaRV/96sOso0WUIcs4qC03M3el9R6JbhgRjHuowU1PJmKOxtVqFwPYGuFRNq/B16uq5BnPVebxvsgiIi+5u97ig8BRlF25WSV2aCQ3nBYjfFFD4NSPnvY8aZHSsqsBKCFxBZIPns8Ccrx1mQiDSh1C2dsgpIjJMsTTckjkp4mukysxo8J5T9Th4bKLXssaQ6c6IACKohHcR9o1cnQysIB3N9oG1JAkzANSPrQ4K490piPHUwLcfzJKW0DhOhAnBW4BKY6qTjL7wJJJQosBZANdpiDyx6xu0kgCyN2GK9Idyx9RVTeJoyIDE2jbaprYNElwu34J7WjV/NruJfz3S4HRwNoZG2UEIvS5X781DwhmxLAFNjPP1PPs=

key_version 1

The ciphertext starts with the metainformation about the keyring version - v1 means that it is the very first version. Let’s rotate the key two times.

> vault write -f /transit/keys/rsa/rotate

# Log messages

Key Value

--- -----

allow_plaintext_backup false

auto_rotate_period 0s

deletion_allowed false

derived false

exportable false

imported_key false

keys hybrid_public_key: name:rsa-4096 public_key:-----BEGIN PUBLIC KEY-----

# MIICIjANBgkqhkiG9w0BAQEFAAOCAg8AMIICCgKCAgEApaQ8Xz0KxcyKwZ0z92mx

# ...

# -----END PUBLIC KEY-----

# certificate_chain:

# public_key:-----BEGIN PUBLIC KEY-----

# MIICIjANBgkqhkiG9w0BAQEFAAOCAg8AMIICCgKCAgEAsu2CZ6FEYaVy6aVeLjXl

# ...

# -----END PUBLIC KEY-----

# certificate_chain:

# public_key:-----BEGIN PUBLIC KEY-----

# MIICIjANBgkqhkiG9w0BAQEFAAOCAg8AMIICCgKCAgEA7u3+S3NvN104qlWk6L4J

# RDcI+u+xkHZAPGyApGRWHh0CAwEAAQ==

# -----END PUBLIC KEY-----

# ]]

latest_version 3

min_available_version 0

min_decryption_version 1

min_encryption_version 0

As you see, old keys are stored as parts of a certificate chain.

The v1 ciphertext can be decrypted with the current v3 of the keyring.

> vault write transit/decrypt/rsa ciphertext="${CIPHERTEXT}"

# Log messages

Key Value

--- -----

plaintext SGVsbG8gVmF1bHQK

> base64 --decode <<< "SGVsbG8gVmF1bHQK"

# Log messages

Hello Vault

But when the min_decryption_version is set to version 3, encryption of a v1 version is not possible. This is a safeguarding mechanism, by deprecating older key version, unintentionally leaked ciphertexts cannot be exploited anymore.

> vault write -f /transit/keys/rsa/config min_decryption_version=3

> vault write transit/decrypt/rsa ciphertext="${CIPHERTEXT}"

# Log messages

Error writing data to transit/decrypt/rsa: Error making API request.

URL: PUT http://127.0.0.1:8230/v1/transit/decrypt/rsa

Code: 400. Errors:

* ciphertext or signature version is disallowed by policy (too old)

Native

Engines

- Cubbyhole: A general purpose dynamic secret store that generates a non-renewable token with a fixed TTL

- Key Value v1: Stores simple key-value pairs in which the value can be unstructured text or a complex JSON string. Successive write operations to the same key will overwrite old data rigorously.

- Key Value v2: Successor to the v1 engine, it supports a versioned store in which the configured amount of versions can be store as well as explicitly deleting specific versions

- Identity: A default engine which cannot be disabled. Vault users are represented by one entity with different aliases, where the later refer to authentication provider like user pass, LDAP, or GitHub.

Operation

Native engines provide similar commands for the activation, initial configuration, and deactivation. A generalization of lifetime-specific operations is not possible because they provide a very specific feature set.

Example: kv-v2 Engine

For an example how to use this engine, see my older post Hashicorp Vault: An Introduction to the Secrets Management Application.

Conclusion

Secrets engines are at the heart of Hashicorp Vault. They enable the full lifecycle management of all finds of sensitive data, from credentials to encryption keys. This article completely covers secrets engines. First, it explained the plugin characteristics general to all engines. Second, all engines were grouped into a) applications and services, b) cloud provider, c) encryption keys, and d) native engines. Third, for each group, all available engines were listed, and a concise tutorial how to work with an engine given.