When you build a robot, there are basically two approaches: You can either start from scratch, get all required hardware, the chassis, sensor, actuators and microcontroller or single-board computers, and program the software. Or you buy a complete kit which includes all parts and a software stack. For the first half of 2021 I followed the first approach and build two moving robots, RADU MK1 and RADU MK2. The MK2 version also included ROS as the middleware layer, supporting the goal of semi-autonomous navigation by detecting its environment.

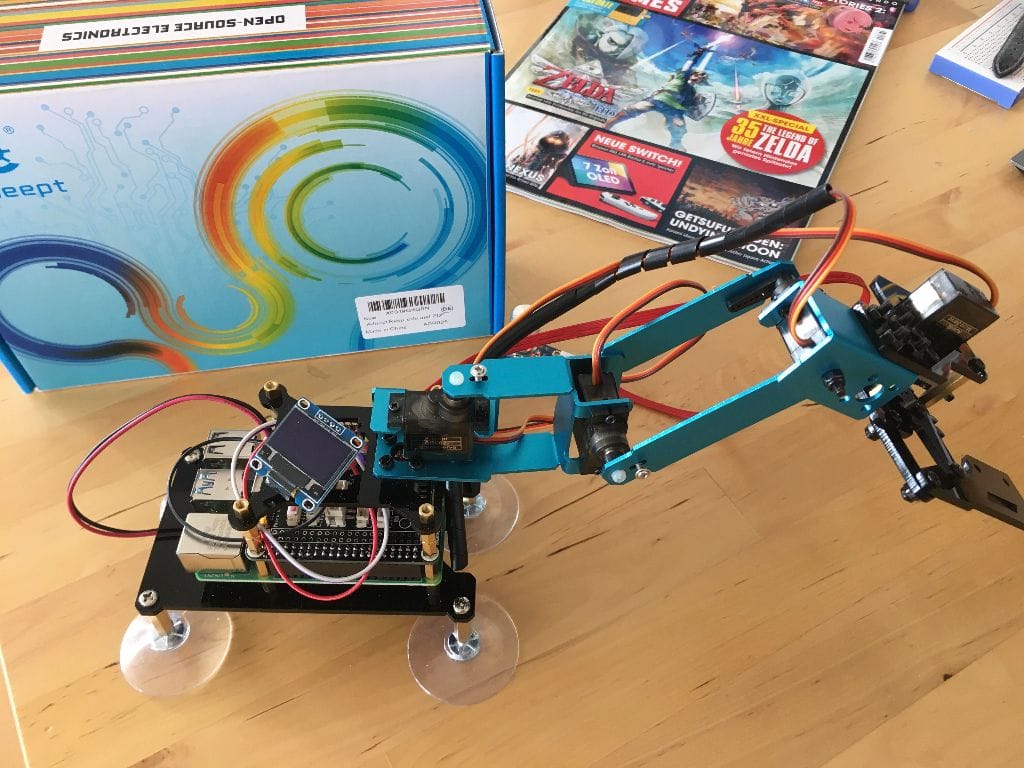

My goal with RADU is to give him the ability to grab items and move them to another place. To jumpstart my understanding and skill about robotic arms, I decided to switch to the second approach, and bought a complete robotic arm Kit. This kit is a 4 degree-of-freedom arm, mounted on a Raspberry Pi with a dedicated PCA-9685 motor shield. In this article, I explain the lessons learned in assembling this kit and about making it functional.

Assembling

Installing the OS

I like to use the most up to date software when I’m working. So naturally, I downloaded the latest Raspian OS release available at the time of writing: 2021-05-07-raspios-buster-armhf. But, with this version I could not get any of the examples to run. Since the official manual for the arm robot kit was written in 05/2020, I downloaded an earlier version of the Raspbian OS from this mirror: 2020-02-05-raspbian-buster. And with this, the examples worked flawlessly.

Raspian OS Customization

Selecting the correct OS version is the first step. Before booting, do these:

- Enable SSH: Add a file called

sshto the/bootfolder - Enabled WLAN: Add a

wpa.supplicantfile to the/bootfolder with this content:

ctrl_interface=DIR=/var/run/wpa_supplicant GROUP=netdev

country=<YOUR-COUNTRY-CODE>

update_config=1

network={

ssid="<YOUR-SSID>"

psk="<YOUR-PASSWORD>"

}

Boot the Raspberry Pi, and then connect with ssh pi@rasperrypi.local. Continue with these optimizations:

- Expand the SD-Card storage: Run

sudo raspi-config, then go to7 Advanced OptionsandA1 Expand Filesystem, confirm withYes - Enable VNC: Run

sudo raspi-config, then go to5 Interfacing OptionsandP3 VNC, confirm withYes - Allow access to I2C, SPI and GPIO by running

sudo usermod -a -G i2c,spi,gpio pi - Fix locales: Run

sudo dpkg-reconfigure localesand mark all relevant locales. Then, runsudo nano /etc/environmentand enter these lines:

LC_ALL=en_US.UTF-8

LANG=en_US.UTF-8

Reboot and be ready.

Add the ARM Source Code

The official repository contains the setup, example code and programs for remote controlling the arm. We will explore these step-by-step, but the most important thing comes first.

With setup.py, all required libraries, and a number of system configuration, like activating I2C, is done. It also installs an automatic startup script which can prevent your system from booting fully if a library is not installed. Therefore, I recommend to delete the following lines which are at the end of the script.

# SOURCE: https://github.com/adeept/adeept_rasparms/blob/master/setup.py

os.system('sudo chmod 777 //home/pi/startup.sh')

replace_num('/etc/rc.local','fi','fi\n//home/pi/startup.sh start')

try: #fix conflict with onboard Raspberry Pi audio

os.system('sudo touch /etc/modprobe.d/snd-blacklist.conf')

with open("/etc/modprobe.d/snd-blacklist.conf",'w') as file_to_write:

file_to_write.write("blacklist snd_bcm2835")

except:

pass

print('The program in Raspberry Pi has been installed, disconnected and restarted. \nYou can now power off the Raspberry Pi to install the camera and driver board (Robot HAT). \nAfter turning on again, the Raspberry Pi will automatically run the program to set the servos port signal to turn the servos to the middle position, which is convenient for mechanical assembly.')

print('restarting...')

os.system("sudo reboot")

Execute this program, then reboot.

Test Run OLED and Actuators

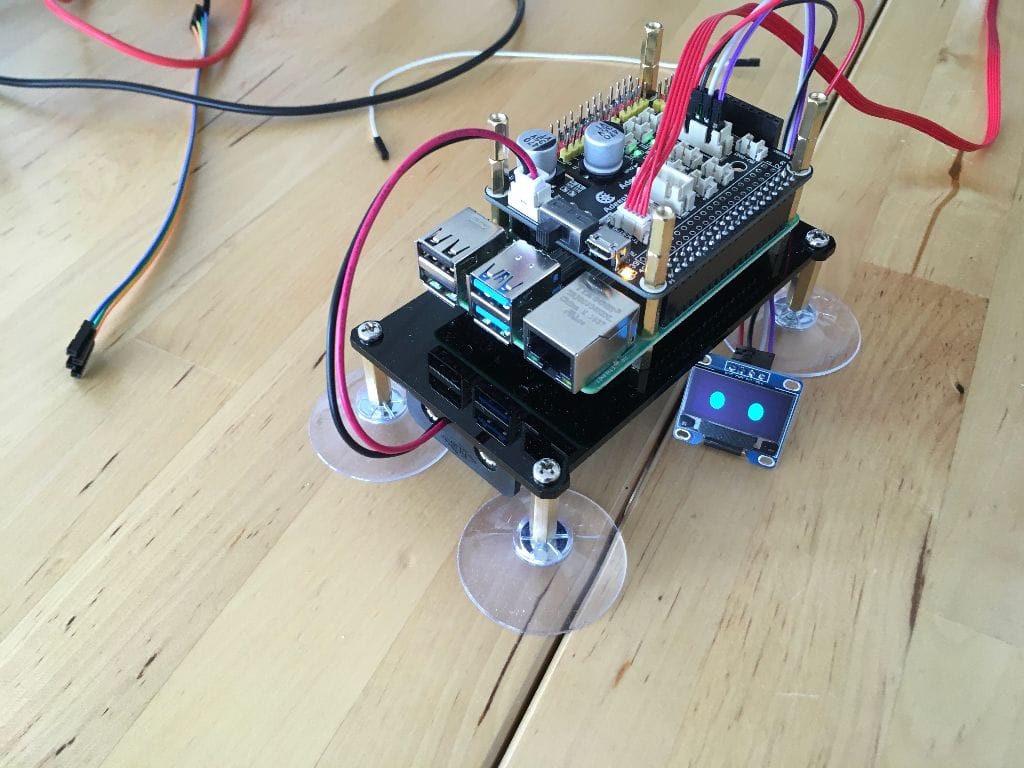

With the arm assembled, it’s time to test that the OLED display and the servo motors work as expected. For these, the official repository includes small test programs that can be used.

Let’s start with the OLED display. Its connected via dedicated I2C pins on the motor shield in this order: SDA, SCL, VCC (3.3V) and GND. Then I run this program:

#SOURCE: https://github.com/adeept/adeept_rasparms/blob/master/CourseCode/01OLEDtext/OLEDtext.py

#Import the library for controlling the OLED screen and delay time

from luma.core.interface.serial import i2c

from luma.core.render import canvas

from luma.oled.device import ssd1306

import time

#Instantiate the object used to control the OLED

serial = i2c(port=1, address=0x3C)

device = ssd1306(serial, rotate=0)

#Write the text "ROBOT" at the position (0, 20) on the OLED screen

with canvas(device) as draw:

draw.text((0, 20), "ROBOT", fill="white")

while True:

time.sleep(10)

When I run this command for the first time, this error was displayed:

Traceback (most recent call last):

File "OLEDtext.py", line 9, in <module>

device = ssd1306(serial, rotate=0)

File "/usr/local/lib/python3.7/dist-packages/luma/oled/device/__init__.py", line 190, in __init__

self._const.NORMALDISPLAY)

File "/usr/local/lib/python3.7/dist-packages/luma/core/device.py", line 48, in command

self._serial_interface.command(*cmd)

File "/usr/local/lib/python3.7/dist-packages/luma/core/interface/serial.py", line 96, in command

'I2C device not found on address: 0x{0:02X}'.format(self._addr))

luma.core.error.DeviceNotFoundError: I2C device not found on address: 0x3C

The error is clear, right? But of course, I went the “software first” way and tried other Python libraries to get it working, but to no avail. Then I checked which I2C devices are connected to the Raspberry Pi with sudo i2cdetect -y 1

0 1 2 3 4 5 6 7 8 9 a b c d e f

00: -- -- -- -- -- -- -- -- -- -- -- -- --

10: -- -- -- -- -- -- -- -- -- -- -- -- -- -- -- --

20: -- -- -- -- -- -- -- -- -- -- -- -- -- -- -- --

30: -- -- -- -- -- -- -- -- -- -- -- -- -- -- -- --

40: 40 -- -- -- -- -- -- -- -- -- -- -- -- -- -- --

50: -- -- -- -- -- -- -- -- -- -- -- -- -- -- -- --

60: -- -- -- -- -- -- -- -- -- -- -- -- -- -- -- --

70: 70 -- -- -- -- -- -- --

Ok. What happens if I connect the working OLED display of my RADU robot and scan again?

0 1 2 3 4 5 6 7 8 9 a b c d e f

00: -- -- -- -- -- -- -- -- -- -- -- -- --

10: -- -- -- -- -- -- -- -- -- -- -- -- -- -- -- --

20: -- -- -- -- -- -- -- -- -- -- -- -- -- -- -- --

30: -- -- -- -- -- -- -- -- -- -- -- -- 3c -- -- --

40: 40 -- -- -- -- -- -- -- -- -- -- -- -- -- -- --

50: -- -- -- -- -- -- -- -- -- -- -- -- -- -- -- --

60: -- -- -- -- -- -- -- -- -- -- -- -- -- -- -- --

70: 70 -- -- -- -- -- -- --

Ah! The hardware is broken! Changing to a new OLED display, the software is working and I could get the screen running:

Testing the Servos

Moving the servos is a very simple script.

#SOURCE: https://github.com/adeept/adeept_rasparms/blob/master/CourseCode/03Servo180/180Servo_01.py

import Adafruit_PCA9685

import time

pwm = Adafruit_PCA9685.PCA9685()

pwm.set_pwm_freq(50)

while 1:

pwm.set_pwm(3, 0, 300)

time.sleep(1)

pwm.set_pwm(3, 0, 400)

time.sleep(1)

This code is straightforward. With the help of the PCA9685 library, we create PWM object. This object can access all of the servo ports 1-15. It’s configured with a default PWM, and then sets the duty cycle for any port.

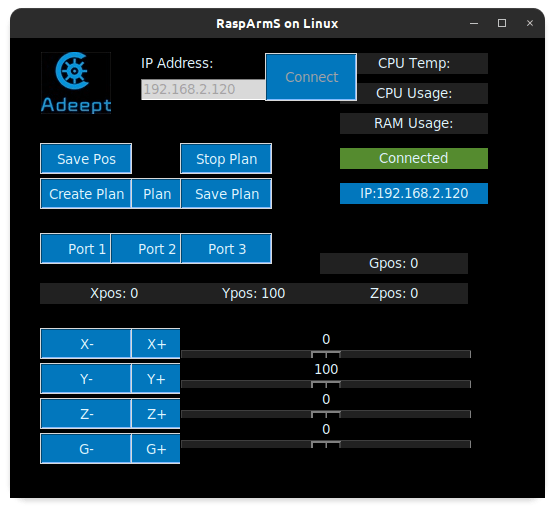

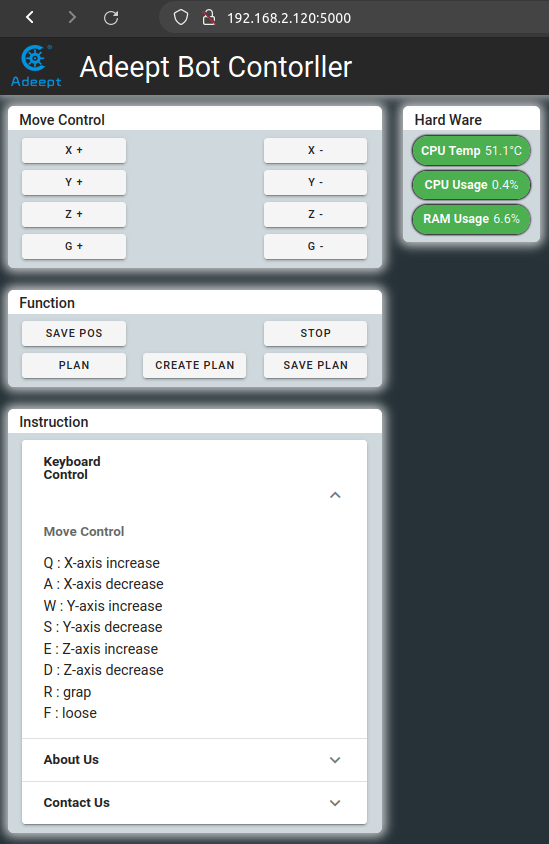

Starting the main program

When all the tests are successful, we can finish the arm assembly and start the control programs. On the official directory, there are two options: Start a TCP Socket on the ARM, then start a TK based GUI application that connects to the server. Or start a Flask web application on the ARM, and connect via any browser. Both options have the same interface options: Moving the arm directly, recording a movement plan, and play the recorded plan. You can see both interfaces in the following images.

The hard part is to get the arm operational is the setup with the default positions of each servo. Both interfaces are hard coded with initial values, but dependent on how often you turned the servos during assembly, this may lead to a very awkward position: My version always bended right back on itself.

But this challenge will be addressed in the next article.

Conclusion

This article showed you how to setup the RASP Arm, a robotic arm Kit for the Raspberry Pi. Once the arm is assembled, you need to setup the Raspberry Pi with the appropriate OS. Do not use the latest Raspian OS - I could not get the Python libraries for the arm running. Instead, use the older Raspian, ideally the version raspbian-2020-02-07. Start the Raspberry Pi, configure WLAN, SSH, and some optimization, but do not update the package. Next, clone the official repository edit the provided setup.py, and then run it. After rebooting, all Python libraries are available and support the OLED and the Servos. Then start the TCP server or the Webserver, connect to it from a local computer or the Raspberry Pi itself, and use the arm to pickup and move objects.