RGB Depth Camera in Robotics: Starting with the Realsense R435 SDK

A self-navigating robot needs a model of its surroundings. For this, several visual sensors can be used. Following an earlier article about an overview of visual sensors, I decided to use the Intel Real Sense D435 camera. This RGB depth camera can provide stereo pictures and point cloud data, has a small factor, and is actively support by its manufacturer to provide support for the robot operating system.

Let’s start from scratch and see the capabilities of its SDK. This article shows to install the RealSense SDK and show example Python Programs with which the camera can be used to make and transform pictures.

The technical context of this article is Ubuntu Server 20.04 LTS and Librealsense v2.45.0. The steps in this article should be compatible with other Debian based Linux systems and future versions of the SDK.

Installing the RealSense SDK

To install the Intel Real Sense SDK, there are two options: Installation from scratch, or using the prebuilt Debian packages. We will use the later approach because it simplifies the overall installation significantly. If you want to use the latest features of the SDK, then check my earlier article for a detailed step-by-step explanation for the manual compilation and installation.

We will follow the official documentation for librealsense.

First, we need an additional repository for our OS packages sources and its appropriate GPG key.

sudo apt-key adv --keyserver keys.gnupg.net --recv-key F6E65AC044F831AC80A06380C8B3A55A6F3EFCDE || sudo apt-key adv --keyserver hkp://keyserver.ubuntu.com:80 --recv-key F6E65AC044F831AC80A06380C8B3A55A6F3EFCDE

sudo add-apt-repository "deb https://librealsense.intel.com/Debian/apt-repo focal main" -u

Then, update the local packages:

apt-get update

Finally, we can install the precompiled lib

sudo apt-get install librealsense2-dkms librealsense2-utils librealsense2-dev

If all went well, connect the camera, preferable to a USB3.0 port, and run the following command.

dmesg

[ 245.117583] usb 2-1: new SuperSpeed Gen 1 USB device number 2 using xhci_hcd

[ 245.138470] usb 2-1: New USB device found, idVendor=8086, idProduct=0b07, bcdDevice=50.ce

[ 245.138485] usb 2-1: New USB device strings: Mfr=1, Product=2, SerialNumber=3

[ 245.138499] usb 2-1: Product: Intel(R) RealSense(TM) Depth Camera 435

[ 245.138511] usb 2-1: Manufacturer: Intel(R) RealSense(TM) Depth Camera 435

[ 245.138523] usb 2-1: SerialNumber: 020223022670

[ 245.178153] uvcvideo: Unknown video format 00000050-0000-0010-8000-00aa00389b71

[ 245.178354] uvcvideo: Found UVC 1.50 device Intel(R) RealSense(TM) Depth Camera 435 (8086:0b07)

[ 245.182825] input: Intel(R) RealSense(TM) Depth Ca as /devices/platform/scb/fd500000.pcie/pci0000:00/0000:00:00.0/0000:01:00.0/usb2/2-1/2-1:1.0/input/input0

[ 245.183250] uvcvideo: Unknown video format 36315752-1a66-a242-9065-d01814a8ef8a

[ 245.183265] uvcvideo: Found UVC 1.50 device Intel(R) RealSense(TM) Depth Camera 435 (8086:0b07)

[ 245.187161] usbcore: registered new interface driver uvcvideo

[ 245.187169] USB Video Class driver (1.1.1)

[ 250.017248] usb 2-1: USB disconnect, device number 2

[ 250.297627] usb 2-1: new SuperSpeed Gen 1 USB device number 3 using xhci_hcd

[ 250.322570] usb 2-1: New USB device found, idVendor=8086, idProduct=0b07, bcdDevice=50.ce

[ 250.322588] usb 2-1: New USB device strings: Mfr=1, Product=2, SerialNumber=3

[ 250.322603] usb 2-1: Product: Intel(R) RealSense(TM) Depth Camera 435

[ 250.322617] usb 2-1: Manufacturer: Intel(R) RealSense(TM) Depth Camera 435

[ 250.322630] usb 2-1: SerialNumber: 020223022670

[ 250.328686] uvcvideo: Unknown video format 00000050-0000-0010-8000-00aa00389b71

[ 250.328907] uvcvideo: Found UVC 1.50 device Intel(R) RealSense(TM) Depth Camera 435 (8086:0b07)

[ 250.335195] input: Intel(R) RealSense(TM) Depth Ca as /devices/platform/scb/fd500000.pcie/pci0000:00/0000:00:00.0/0000:01:00.0/usb2/2-1/2-1:1.0/input/input1

[ 250.336253] uvcvideo: Unknown video format 36315752-1a66-a242-9065-d01814a8ef8a

[ 250.336268] uvcvideo: Found UVC 1.50 device Intel(R) RealSense(TM) Depth Camera 435 (8086:0b07)

Now we can start one of the built-in tools to see that the library is working. Most simple is to show all connected devices.

rs-enumerate-devices

Device info:

Name : Intel RealSense D435

Serial Number : 018322070534

Firmware Version : 05.12.14.50

Recommended Firmware Version : 05.12.15.50

Physical Port : 2-1-5

Debug Op Code : 15

Advanced Mode : YES

Product Id : 0B07

Camera Locked : YES

Usb Type Descriptor : 3.2

Product Line : D400

Asic Serial Number : 020223022670

Firmware Update Id : 020223022670

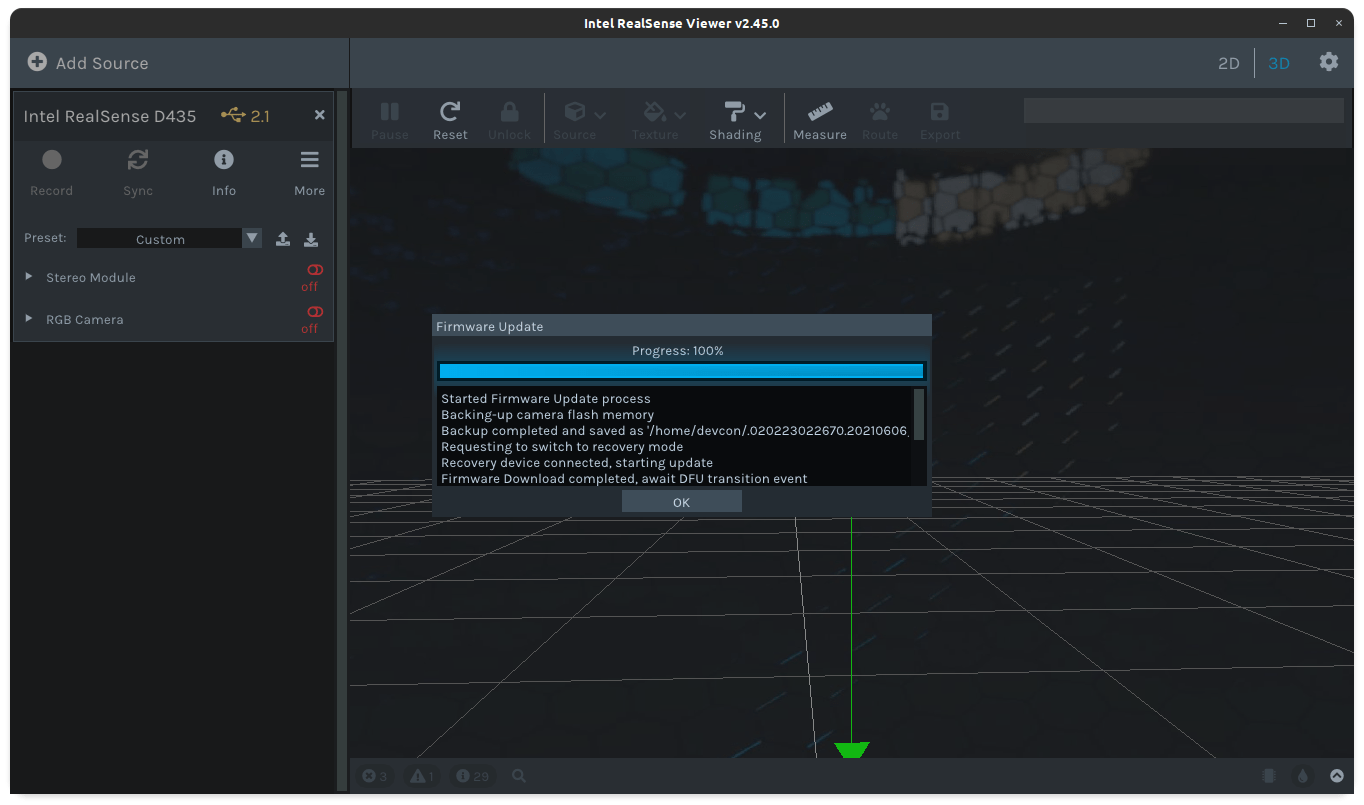

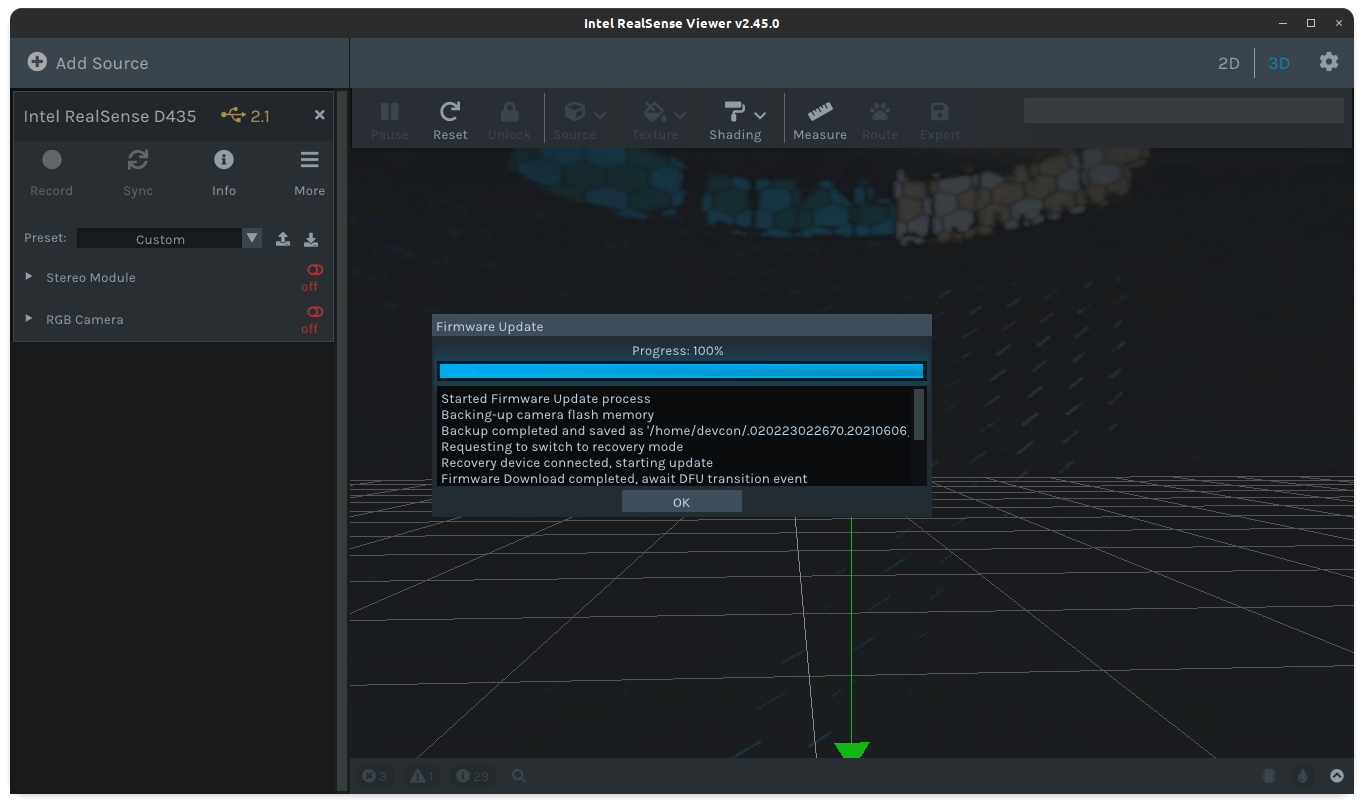

When the device on which you installed the SDK also has a graphical environment, you can also execute rs-viewer.

Additional System Configuration

When the SDK is installed and the librealsense helper tools are working, we just need to make a few more steps for full system setup.

A. Add the path in which the librealsense SDK is installed - defaults to /usr/local/lib - to the environment variable PYTHONPATH:

export PYTHONPATH=$PYTHONPATH:/usr/local/lib

B. Install the following additional libraries so that the examples are working: Numpy is a library for scientific computing, and OpenCV provides several computer vision algorithms.

python3 -m pip install opencv-python numpy

C. In all Python scripts, import the library as follows (as suggested in a Github issue

import pyrealsense2.pyrealsense2 as rs

Now we can write Python Scripts to use the camera directly.

Step-By-Step Example for Image Processing

To get started, let’s take a step-by-step look at the official example.

The first part of this example imports the pyrealsense2 SDK bindings, and the two Python libraries numpy and cv2.

import pyrealsense2.pyrealsense2 as rs

import numpy as np

import cv2

Then, two objects from the pyrealsense2 are created. The config determines various parameters and capabilities of the connected camera, The pipeline object represents the overall configuration and sequence of computer vision modules that are applied to the continuous image stream that the camera creates.

config = rs.config()

pipeline = rs.pipeline()

The example code then determines various characteristics of the connected devices, and probes for a specific device type. This happens because the examples should support a wide variety of devices, but you can omit them in your own examples if you work with a specific model.

pipeline_wrapper = rs.pipeline_wrapper(pipeline)

pipeline_profile = config.resolve(pipeline_wrapper)

device = pipeline_profile.get_device()

device_product_line = str(device.get_info(rs.camera_info.product_line))

found_rgb = False

for s in device.sensors:

if s.get_info(rs.camera_info.name) == "RGB Camera":

found_rgb = True

break

if not found_rgb:

print("The demo requires Depth camera with Color sensor")

exit(0)

config.enable_stream(rs.stream.depth, 640, 480, rs.format.z16, 30)

if device_product_line == "L500":

config.enable_stream(rs.stream.color, 960, 540, rs.format.bgr8, 30)

else:

config.enable_stream(rs.stream.color, 640, 480, rs.format.bgr8, 30)

When the configuration is finished, we can start the pipeline.

pipeline.start(config)

And then use the OpenCV library to process the image stream in several steps:

A. Assemble and image in which the color frame and the depth frame are present.

```python

aligned_image = false

while not aligned_image:

# Wait for a coherent pair of frames: depth and color

frames = pipeline.wait_for_frames()

depth_frame = frames.get_depth_frame()

color_frame = frames.get_color_frame()

if not depth_frame or not color_frame:

continue

else:

aligned_image = true

```

B. The image is then converted to an array representation ...

```python

depth_image = np.asanyarray(depth_frame.get_data())

color_image = np.asanyarray(color_frame.get_data())

```

C. ... and to an 8-bit pixel map.

```python

# Apply colormap on depth image (image must be converted to 8-bit per pixel first)

depth_colormap = cv2.applyColorMap(

cv2.convertScaleAbs(depth_image, alpha=0.03), cv2.COLORMAP_JET

)

```

D. Before using the image representation, a sanity check is performed to ensure the color and depth frames have the same resolution - if not, they are applied immediately.

```python

depth_colormap_dim = depth_colormap.shape

color_colormap_dim = color_image.shape

if depth_colormap_dim != color_colormap_dim:

resized_color_image = cv2.resize(

color_image,

dsize=(depth_colormap_dim[1], depth_colormap_dim[0]),

interpolation=cv2.INTER_AREA,

)

images = np.hstack((resized_color_image, depth_colormap))

else:

images = np.hstack((color_image, depth_colormap))

```

E. Once the image representation is ready, we can use other OpenCV functions such as saving the image...

```python

print(images)

print(dir(images))

cv2.imwrite("test.png", images)

```

F. ... or even opening a graphical window that shows the camera stream.

```python

cv2.namedWindow("Live Stream", cv2.WINDOW_FULLSCREEN)

cv2.imshow("Live Stream", images)

cv2.waitKey(1)

```

G. The final step in the example is to close the pipeline object.

```python

pipeline.stop()

```

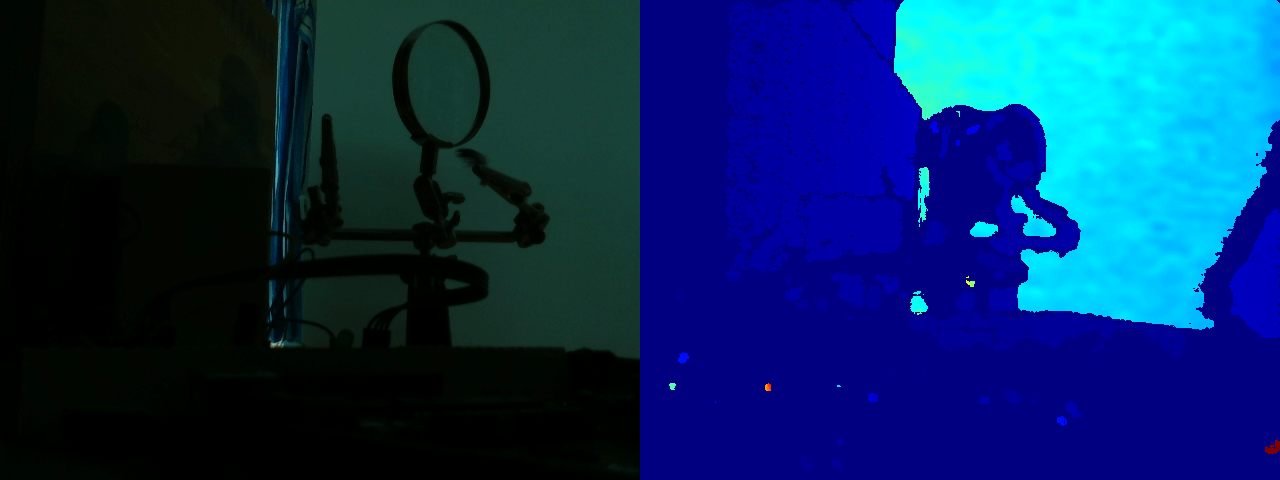

To give you an impression, here is an example of the stored image

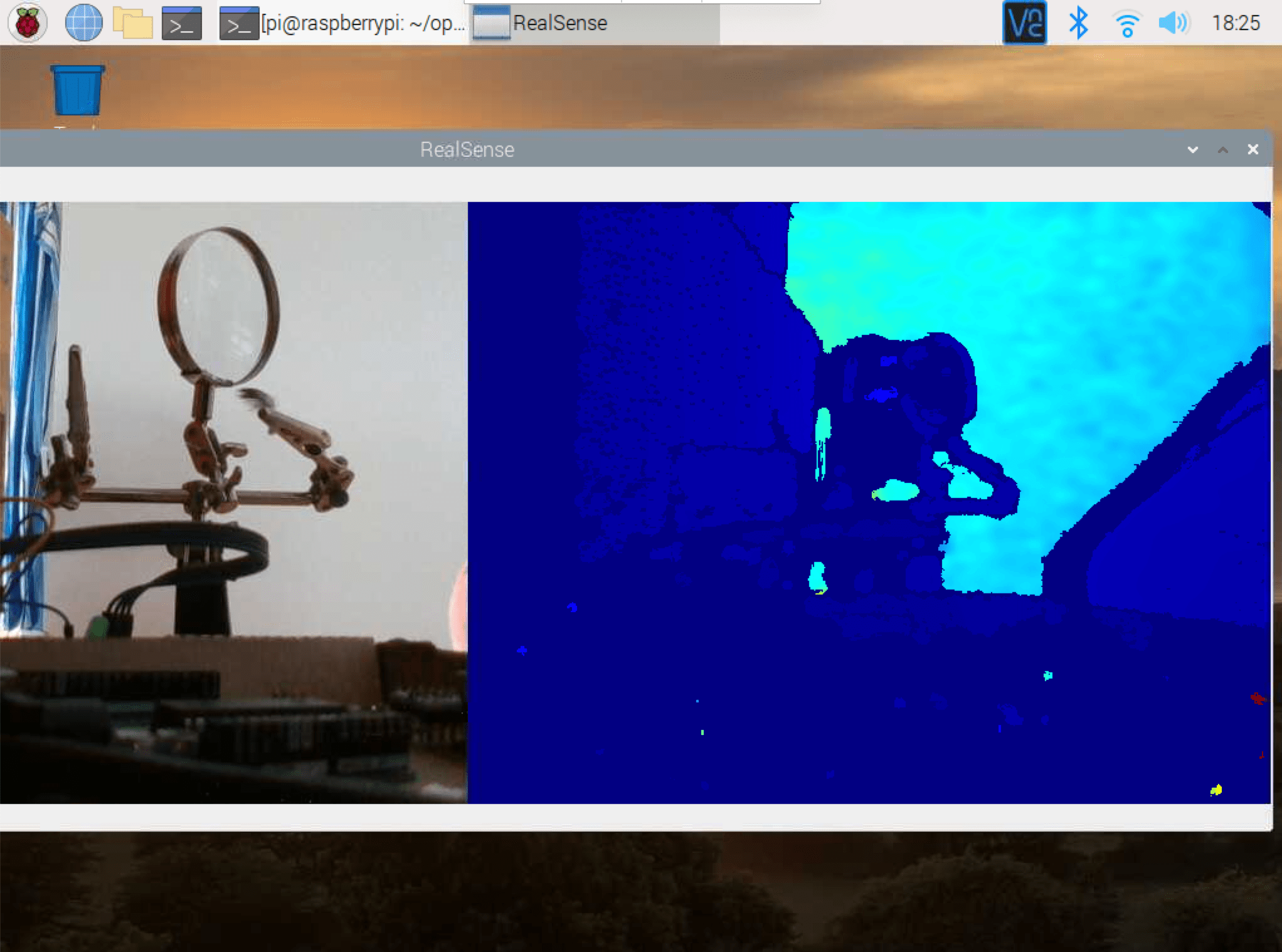

... and here the graphical window with the live video stream:

Conclusion

The Librealsense SDK provides Python bindings to configure a connected Realsense camera and start streaming images. Following the official example, this stream can be processed with the Python libraries Numpy and OpenCV. We learned from the official example the necessary steps: a) Create Python objects that represent the configuration of the device, b) create an Python object representing the image processing pipeline, c) aligning depth and color data, d) transforming the image to an array representation, and e) use OpenCV utility functions to save the image or open a graphical window to show the live video stream.

From here, how to continue on your own? I suggest to spend some time reading the pyrealsense bindings to understand the Python objects that are provided by the SDK, and to take a look at more official examples. To truly master it, you need to invest also time into learning about OpenCV, for example from this written tutorial or the official video course.