A robot can move by processing movements commands, blindly executing them until it hits an obstacle. In contrast to this, navigation is the ability to make autonomous choices how to move to reach a goal. Fpr this, a robot needs three distinct capabilities: First, to localize itself with respect to its surroundings. Second, to build and parse a map, an abstract representation of its environment. Third, the ability to devise and execute a path plan how to reach a designated target from its current location.

Robotics navigation is a broad field with different aspects to consider: The robots primary movement type and environment (ground, sea, air), its localization, visualization and distance sensors, and the concrete algorithms that access sensor data to govern navigation goals.

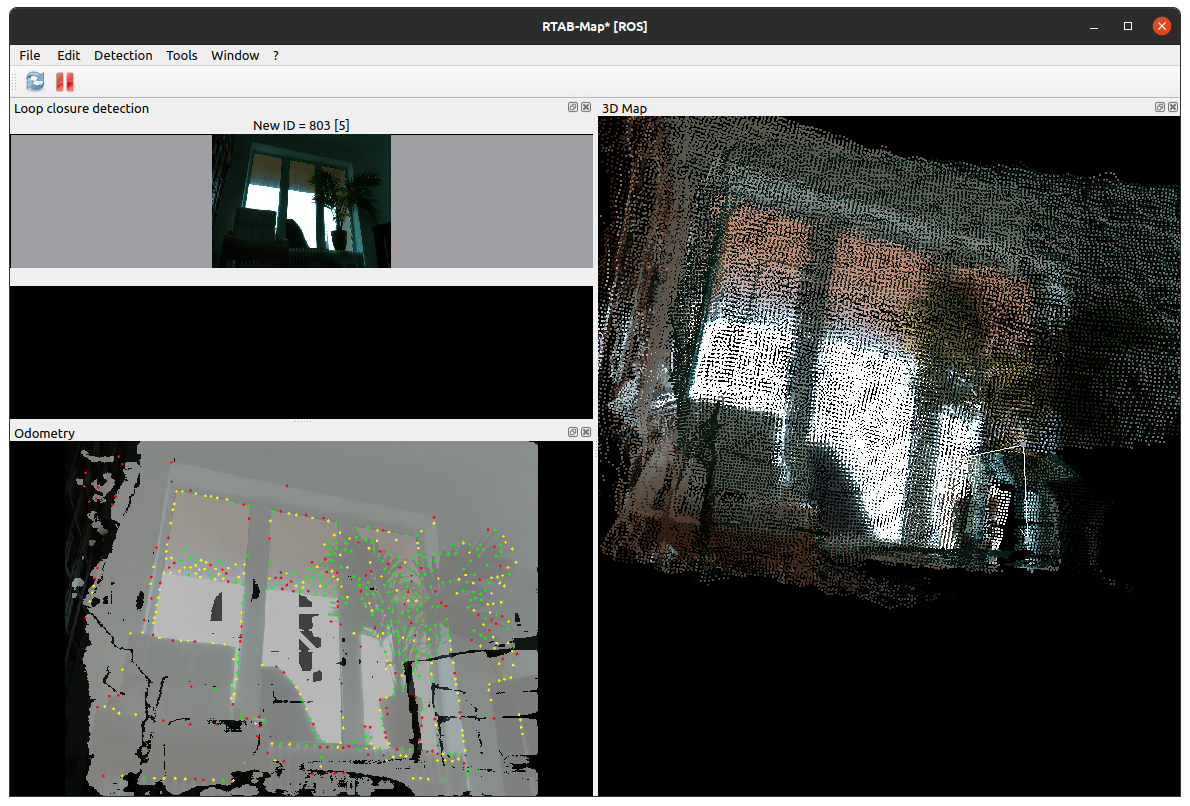

This article helps you in your search for effective navigation with ROS. In essence, you will learn about sensor types and technologies, then bridge this to the world of ROS with concrete libraries and algorithms. And form this, I will explain my methods how find a combination of sensor and library with which a robot can achieve simultanous localization and mapping.

Sensor Types

A robot’s sensors are the primary means with which to get any representation of its environments, and are therefore necessary to perform localization and navigation. The different sensors can be separated into 4 groups: location, vision, 2d distance, 3d distance.

Location Sensors

Location sensor determine a robot’s absolute position. A very common one is GPS is a satellite-based localization system that determines the current position as a coordinate set of longitude and latitude values. When navigation happens inside buildings, it is common to add additional local data sources, for example RFID tags that are attached to the ceiling or the ground. Based on an absolute point of reference, an IMU can be used to continously update the current postion. These systems combine accelerometers and gyroscopes. They report the devices current force, angular rate and sometimes the orientation. IMUS are mostly used in aircraft and unmanned aerial vehicles. Recently, GPS with integrated IMU are produced to provide localization capabilities when GPS is not available.

Vision Sensors

Vision sensors process the surroundings by measuring the visible or invisible light and forming graphical representation. The most commont technology are monocular cameras, the classical photography camereas that we all use on a daily basis with our smartphones. By combining two or more camera lenses, stereo cameras are create. They record images with a spherical distortion that the human observer - when watched from a very specific angle - sees as a 3-dimensional pictures. Concerning the viewpoint, omni-directional cameras have a 360-degree view range and capture it all in one 2D image. These cameras are often used in cars to provide superior image recording and sensing. When leaving the human visible light, infrared cameras create infrared light to measure the surface temperature of objects, which is then captured as an image showing the heat distribution.

Distance Sensors

The distance sensor category encompasses specific devices that are used in vehicles and robotics to obtain a constant representation of their envirionment. The basic types are ultrasonic and sonar. Both sensors emitted sound, receive the sounds refelection, and measure the distance to objects by intrapolating the time it took between sending and receiving the signal. They differ in the medium: Ultrasonic travels through the air, while sonar is used only underwater. Laser scanners, also called laser distance sensors, use laser signals to determine the distance to objects. There are three different techniques: Time of flight measures the difference between the lasers sending and its reflection on an object, multiple frequency phase shift sends multiple lasers at a time, determines their phase shift, and calculates the distance ffrom all of these measurements, and Interferometry that is used to track moving objects.

3D Sensors

The final sensor type is concerned with making 3D point clouds to represent the environment. These sensors are used in different fields, from autonomous cars to satelite images. Lidar is based on lasers that are continously scanning the environment and use similiar techniques as laser scanners to measure the distance of all objects in their environment. RGB-D, also called depth cameras, use vision sensors and therefire light impulses to produce the 3D point clouds. The most common technique is stereo cameras becaus they do not need to add extra illumination to the onbserved environemnt. Other techniques are for example structured light, or time-of-flight and interferometry that are also used by laser scanners.

3D Sensor Hardware

In current robotic projects, navigation is enabled by 3D sensors. When making a selection and you know you want to work with ROS, then check this list of 3D sensors[^1]. The currently available ROS-supported 3D sensors are shown in the following table.

| Name | Type | ROS Lib | Price |

|---|---|---|---|

| Kinect v1 | RGB-D | libfreenect | $125 (used) |

| Kinect v2 | RGB-D | libfreenect2 | $150 (used) |

| Azure Kinect | RGB-D | Azure Kinect | $280 |

| RPLidar A1M8 | LIDAR | rplidar | $100 |

| RPLidar A2 | LIDAR | rplidar | $319 |

| RPLiDAR A1M8 360 | LIDAR | rplidar | $109 |

| Real Sense D415 | RGB-D | RealSense | $175 (used) |

| Real Sense D435 | RGB-D | RealSense | $190 (used) |

| Velodyne LP16 | LIDAR | velodyne | $2000(used) |

| TFmini-s | LIDAR | TFmini-ROS | $39(used) |

Conclusion

This article presented the landscape of localization and mapping sensors. We learned about the different groups of sensors - location, vision, 2D and 3D distance - and detailed sensor technologies. For navigating a robot, 3D sensors are state of the art. Therefore, I showed the currently available ROS-supported 3D sensor.

Footnotes

[^1]: For all other sensors, check ROS-supported sensors.