Natural Language Processing, or short NLP, is the computer science discipline of processing and transforming texts. It consists of several tasks that start with tokenization, separating a text into individual units of meaning, applying syntactic and semantic analysis to generate an abstract knowledge representation, and then to transform this representation into text again for purposes such as translation, question answering or dialogue.

This article is a brief introduction to natural language processing. It starts with a brief historic overview about the science discipline, explaining the different periods and approaches that were used, continues with an explanation of the various NLP tasks, grouped into coherent areas of interest, and then gives an overview about current NLP programs. Over the course of the next weeks, additional articles investigate the Python libraries and concrete projects for NLP.

Origins of Natural Language Processing

Sources: natural language processing, linguistics, machine learning, artificial intelligence

Natural Language Processing is a computer science discipline that dates to 1950. It’s a synthesis of several scientific fields from which goals, paradigms, and approaches are synergized and made concretes. These fields are Linguistics, Machine Learning, and Artificial Intelligence. A brief overview of these fields helps to understand how NLP approached language and how this discipline evolved in different eons during which advances and insights from the other disciplines were merged.

Linguistics is the classic study of language and its different aspects, like syntax, semantics, morphology and phonology. It tries to create a universal framework how language is created and works. Especially in theoretical linguistics, different types of logic were introduced and researched for reflecting how information is represented and inferred from expressions.

Machine Learning is concerned with designing algorithms that process structured or unstructured data to recognize patterns and to evaluate other or newer data with respect to these patterns. Data is represented numerically or transformed to a numerical representation, and then different mathematical models and algorithms are applied. Machine learning also has several goals: clustering, detect separate groups of related data, anomaly detection, finding data that does not belong to known patterns, or regression, creating functions that approximate complex inputs to generate an output value.

Artificial Intelligence is concerned with the general question how intelligence can be built or represented by machines. It’s a discipline that spans mathematical algorithms, computer programs, data structures, electronics, and robotics. It formulates the following research areas that represent a separate goal in itself. In Reasoning and problem solving, programs should work on symbolic representations of concepts and perform computations that support a program’s goal or agenda. With knowledge representation, programs need the capability to represent knowledge about their application domain, for example with ontologies, network-like data structures that connect concepts and express their relationships. Finally, learning and perception are required for automatic and autonomous data processing that increase the capacity of knowledge and deduction, especially by processing data from many different domains such as text, pictures, video and sound

If all these disciplines are put together, this picture evolves. The far-fetched goals of Artificial Intelligence shape the horizon of NLP research: To create programs that understand, process, and reason language, to converse with humans or to form knowledge about documents. Machine learning provides the mathematical foundation with its algorithms that work on numerical data. By representing text as numerical data, texts can be classified, categorized, compared. And to some degree, from a texts numerical representations, facts and syllogisms can be interfered. Finally, Linguistics shapes the heritage of the foundational structure of language, separating language into syntax, semantics and more. This separation forms early NLP methods tremendously, as programs were designed to process texts stepwise by creating a syntactic model, applying heuristics to the tokens, using a semantic representation that is finally converted to a knowledge representation.

History of Natural Language Processing

Source: natural language processing

NLP progressed in three distinct eras: symbolic, statistic, and neural. By following the above considerations, it’s clear that goals and methods in each era reflect the body of knowledge of heir neighboring discipline.

The first era is called the symbolic NLP. Language was considered through its syntax and semantics. Language expressions are ordered sequences of words, where word represent a concepts and where fine-grained inner structure and word relationships shape the overall meaning. Reducing a sentence to its different words, and the words to their lemma, their inflected word origin, expresses the content of expressions. Yet only by considering its syntax and grammar, the higher-order relationship of words emerges and a sentence true meaning is realized. NLP systems of this era were concerned to create complex rules that both process and represent language in their syntactic and semantic form. These rule-based systems were and are used for several NLP tasks up to today.

The second era is called the statistical NLP. The increasing availability of computers both for research and in the industry, as well as an ever-increasing amount of data readable by computers, resulted in proposing statistical methods to process language. By using this advanced processing power, several texts and documents could be processed in parallel, and then advanced machine learning algorithms were applied to detect patterns in language. Typical machine learning models from this era are Bayesian networks, hidden Markov models, and support vector machines which are still used for NLP tasks. Applying statistics lead to several insights about the nature of language that even reflected back into theoretical linguistics. This culminated into expert systems, concerned with decision making, and knowledge systems, concerned with representing and reasoning about symbolic information.

The third era is called neural NLP, for its usage of vast and complex neural network. A neural network is a complex structure consisting of individual neurons, also called percepton. The concept of a percepton can be traced back to the 1950, where it was used to model functions that perform simple input-output transformations. Just as in the second era, the increase in computing processing power, and especially in graphical processing units that are capable of performing mathematical functions on huge amounts of data, lead to ever more complexity. Neural networks were used in difficult machine learning tasks because they showed a promising characteristics: Instead of manually creating the important features, the pattern of data input on which an algorithm works, neural networks learned features by themselves. The more data they were trained on, the more robust the features and the results became. When applied to process numerical representation of texts, the same effects were considered. Networks learned the structure of language, and very quickly surpassed established benchmarks in several NLP tasks. Today, neural network-based NLP is becoming the dominant form of NLP research.

Goals of Natural Language Processing

NLP Tasks

Sources: Harnessing the Power of LLMs in Practice: A Survey on ChatGPT and Beyond, Natural language processing: state of the art, current trends and challenges, 3: A Survey of the Usages of Deep Learning for Natural Language Processing, Wikipedia Natural Language Processing

Combining scientific papers, Wikipedia, and commonplace NLP projects in Python, the list of NLP tasks is quiete astonishing. The following list is not exhaustive, its focused on those tasks that are supported by Python NLP libraries, and also the grouping differs from that of Wikipedia.

Two main groups need to be separated: Core NLP tasks, structured into segments of classical linguistics that operate mostly on tokens and sentences, and advanced NLP tasks that use computers and their combined skills of classical NLP tasks top operate on and generate arbitrary amounts of texts.

Core NLP tasks

- Text Processing - Determine and Analyze individual token of a sentence

- Tokenization: Separate a sentence into individual tokens or groups of tokens called chunks, e.g. by checking the punctuation of the raw text, or using rules/heuristics to group related words. Also called chunking and word segmentation.

- Lemmatization: Identify the lemma, a words core form by applying rules and heuristics

- Stemming: Reduce inflected words to their core form by using a dictionary of the language Syntactic Analysis - Identify and represent the syntax of a sentence.

- Text Syntax

- Parsing: Determine the grammatical structure of a sentence, with either dependency parsing, considering the relationships of words, or constituency parsing, using a probabilistic approach

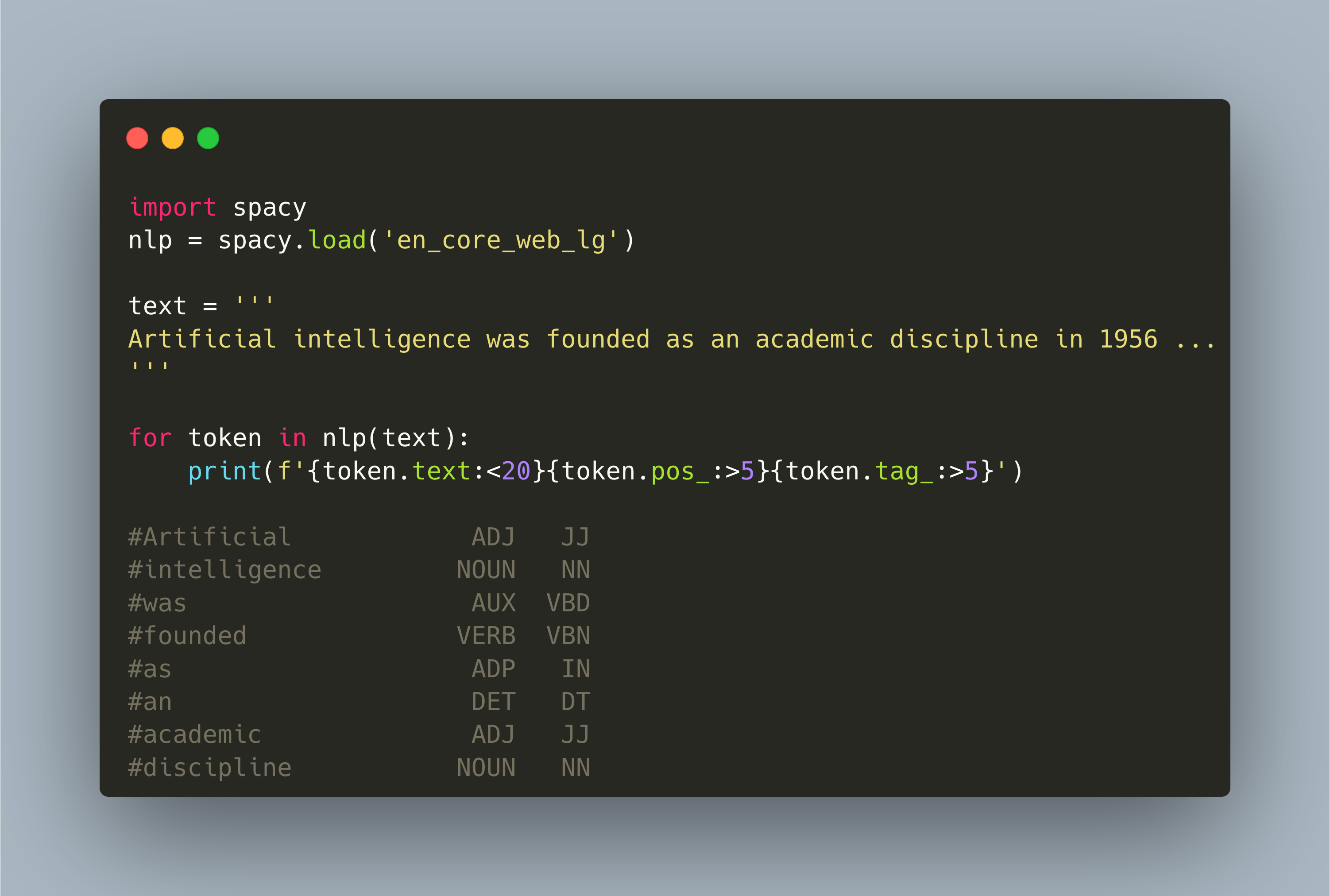

- Parts of Speech tagging: Apply the pars tree and tag the tokens in a sentence as nouns, verbs, adjective, punctuation etc.

- Text Semantics - Identify the meanings of words inside a sentence

- Named Entity Recognitions (NER): Recognize entitles, such as persons, cities, or titles

- Word sense disambiguation: Identify and resolve the synonymy, polysemy, hyponymy, and hypernymy characteristics of words in the given context

- Semantic Role Labeling: Identifies the roles of nouns in the sentence, such as the agents that perform an action, the theme or location the actions happen in, and more. Also see semantic role

- Document Semantics - Identify the meaning of paragraphs and complete texts

- Text classification: Define distinct categories to which a text belongs, used for example in spam filtering.

- Topic Modelling: Identify topics of different documents automatically.

- Sentiment analysis: Calculate the polarity of text as being positive, neutral, or negative about a topic

- Toxicity recognition: A nuanced interpretation of a text that identifies negative aspects of language uses.

Advanced NLP Tasks

- Text Generation - Create meaningful texts for an intended topic

- Spelling Correction: Correct the spelling of individual words inside a sentence

- Text Summarization: Compress a document to its key ideas, which can be further specified as either generic or query based

- Machine translation: Translate a text from one natural language to another

- Question Answering: From a given text, identify the relevant parts that pertain to a language (extractive model), or generate a new text that structures the contained facts into a new statement (generative model). It is also distinguished whether he question is closed to a domain, or open domain and therefore encompassed all external contexts as well.

- Knowledge & Interference - Extracts Facts from a text and conduct logical interference to discover new facts.

- Open Question / Reasoning: In an open context, identify and resolve questions.

- Information Extraction: From a given text, identify information relevant to external criteria, then store this information in an external format

- Natural Language Understanding - Define an abstract representation of a language as a whole

- Morphology: The ruleset how words change to represent temporal, gender, or inter-word relationships inside a group of words

- Grammar: The ruleset how to form coherent structured and meaningful groups of words that communicate a meaning

- Semantics: inherent meaning of words, the concepts that they represent.

- Language Modelling: Determine the probability of the next word given its previous word in a group of words, sentence, or even groups of paragraphs

The Natural Language Processing Programming Pipeline

Typical NLP projects follow the same preprocessing and transformation steps that make texts applicable for algorithms. These steps are preprocessing, statistical/semantic information gathering, numerical representation transformation, and target task application.

During preprocessing, the text is separated into meaningful units. Often, these units are further reduced too to reduce the amount of information handled by downstream tasks.

- Tokenizing: Separate sentences into individual tokens.

- Stemming: Remove inflectional endings of the tokens and provide the base word based on rulesets.

- Lemmatization: Remove inflectional endings of the tokens and provide the base word based on a dictionary.

- Chunking: Identify related group of words that form a semantic unit, for example recognizing the first, middle and family name of a person instead of three individual nouns.

To apply any NLP algorithm, machine learning model, or to input text into a neural network, the text needs to be converted to a numerical representation. Typically, this representation considers not only an individual text, but a group of related texts coined as the corpus. For a corpus of texts, the following techniques can be used for generating a numerical representation:

- Bag of Words: A data structure consisting of word/number-of-occurrences pairs for each word.

- One-Hot Encoding: A binary identification whether a word exists in a document or not for each word.

- TFID: A metric that combines the absolute occurrence of a word within a text and the inverse frequency of words in the overall campus, balancing the occurrence of very frequently and seldom used words.

- Word Embeddings: A multi-dimensional vector representing the relative meaning of a word, produced by processing large amounts of texts and analyzing the occurrences of related words. Word embeddings are very effective tools to measure the similarity of words. This idea can be upscaled to build vectors for sentences, paragraphs, or even whole documents for finding similarity of different levels in the corpus.

And from here on, the classical NLP tasks, starting with syntax and semantics, and the advanced NLP tasks are feasible.

Summary

Natural Language Processing is a computer science discipline that shares the heritage, goals and methods of linguistics, machine learning and artificial intelligence. Venturing through the three eras of symbolic, statistical, and neural processing, a complex understanding and extensive list of NLP tasks was created. Today, performing syntactic and semantic analysis switched from explicit crated rule-systems to learned feature representations in neural networks. And from this, advanced NLP tasks like text translation, question answering, reasoning and finally text generation becomes feasible. In this article, you learned about the eras, the NLP tasks, and the steps for a typical NLP project. The next article shows concrete Python libraries for various NLP tasks.