Kubernetes service account define fine grained access to any Kubernetes resource, including namespaces, pods and log data. In this article I explain how service accounts work by using curl statements against the API. It was a great educational experience! Read what I learned.

In my last article, I showed how to develop the KubeLogExporter, a tool that collects log data from a set of pods. So far the exporter relies on full access to the Cluster by using the local .kubeconfig file. If we want the exporter to run as a cron job inside the cluster, it needs to have suitable access rights. Originally I wanted to just write about the cron job implementation, but found that investigating how Kubernetes access rights work to be very educational. That’s why it became the article that you are reading now.

Essential Kubernetes Concepts

When you run a Pod inside a Kubernetes cluster, several default configurations and security aspects are already made by default. To determine the access right to the rich Kubernetes API, the essential resources are ServiceAccount, Role and RoleBindings.

Let’s understand these concepts by considering how the cron job to read pod logs works. When the job runs, it needs read access to namespaces and pods. This access is defined in a Role or ClusterRole. The Role is limited to one namespace only, so we will use the ClusterRole. When a pod is created, it is given the default system account and the default system account token to access the K8S API. However, this account does not have the required access rights, so we need to define a custom ServiceAccount. The final piece is the RoleBinding or ClusterRoleBinding: It connects the ClusterRole with the ServiceAccount.

K8S API: Direct Access

To see how those concepts are applied when working with Kubernetes, I followed this excellent article in which the API is accessed directly with curl.

Let’s start simple by creating the api-explorer pod that by writing the api-explorer-pod.yaml file with the following content.

apiVersion: v1

kind: Pod

metadata:

name: api-explorer

spec:

containers:

- name: alpine

image: alpine

args: ['sleep', '3600']

Then we create the container and wait until it is started.

> kubectl create -f api-explorer-pod.yaml

pod/api-explorer created

Then we jump into the container, and install the curl package.

> kubectl api-explorer -it sh

> apk add curl

To access the Kubernetes API, we always need to send a valid security token. This token is stored inside the pod at the location /run/secrets/kubernetes.io/serviceaccount/token. With this token, we can make the API request.

> TOKEN=$(cat /run/secrets/kubernetes.io/serviceaccount/token)

> curl -H "Authorization: Bearer $TOKEN" https://kubernetes/api/v1/namespaces/default/pods/ --insecure

{

"kind": "Status",

"apiVersion": "v1",

"metadata": {

},

"status": "Failure",

"message": "pods is forbidden: User \"system:serviceaccount:default:default\" cannot list resource \"pods\" in API group \"\" in the namespace \"default\"",

"reason": "Forbidden",

"details": {

"kind": "pods"

},

"code": 403

}

But as we see, we cannot access the pod at all because the default service account does not have the correct access rights.

Defining a Custom Service Account

So we need to have a properly configured ServiceAccount that grants us a token with which the Kubernetes API can be accessed.

Create the file pod-read-access-service-account.yaml and put the ServiceAccount definition on top. This resource is basically only metadata.

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: log-exporter-sa

namespace: default

labels:

app: log-exporter

---

The next thing is the ClusterRole definition. Inside its spec block, we define which apiGroups and resources we want to access. The core API group is denoted by "", and under resources we list pods. Finally, the verbs determine which action we want to apply to the resources: In our case, its reading and listing.

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: log-exporter-cr

labels:

app: log-exporter

rules:

- apiGroups:

- ''

resources:

- pods

- pods/log

- namespaces

verbs:

- get

- list

---

Finally we create the RoleBinding resource to combine the SeviceAccount and the ClusterRole.

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: log-exporter-rb

roleRef:

kind: ClusterRole

name: log-exporter-cr

apiGroup: rbac.authorization.k8s.io

subjects:

- kind: ServiceAccount

name: log-exporter-sa

namespace: default

---

Now we create all resources.

> kubectl create -f pod-read-access-service-account.yaml

serviceaccount/log-exporter-sa created

clusterrole.rbac.authorization.k8s.io/log-exporter-cr created

rolebinding.rbac.authorization.k8s.io/log-exporter-rb created

Some more details: As you see, the ServiceAccount is explicitly created in the default namespace. Be careful about the ClusterRoleBinding as it needs to reference this ServiceAccount in its defined namespace as well, or it will not work correctly.

K8S API: Access with Custom Service Account

To use the newly created ServiceAccount, we define that the pod uses the new role. Going back to the api-explorer-pod.yaml file, we add the new configuration item spec.serviceAccountName.

apiVersion: v1

kind: Pod

metadata:

name: api-explorer

spec:

serviceAccountName: log-exporter-sa

containers:

- name: alpine

image: alpine

args: ['sleep', '3600']

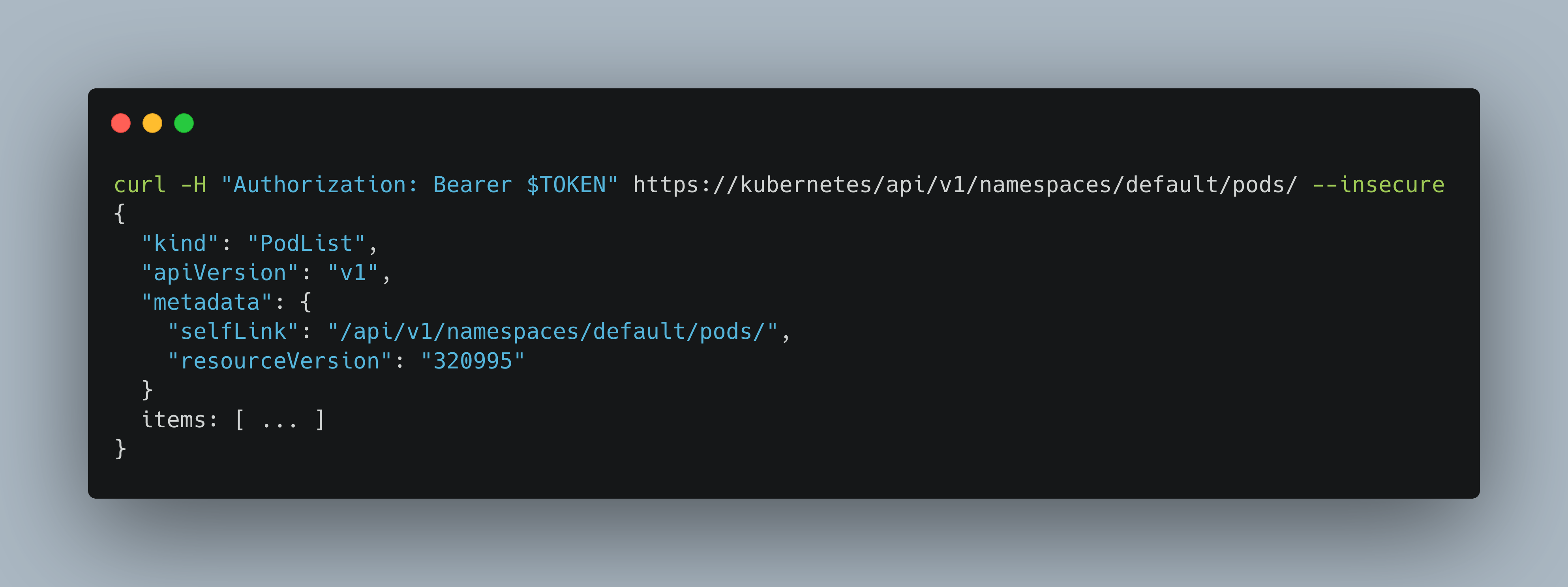

Back in the container, we grab the token to make the request - and it works!

curl -H "Authorization: Bearer $TOKEN" https://kubernetes/api/v1/namespaces/default/pods/ --insecure

{

"kind": "PodList",

"apiVersion": "v1",

"metadata": {

"selfLink": "/api/v1/namespaces/default/pods/",

"resourceVersion": "320995"

},

"items": [

{

"metadata": {

"name": "api-explorer2",

"namespace": "default",

"selfLink": "/api/v1/namespaces/default/pods/api-explorer2",

"uid": "343aaf7e-1be5-45da-aadb-e83ee329a7fd",

"resourceVersion": "320976",

"creationTimestamp": "2020-05-24T10:16:58Z"

},

...

Now as the final proof of concepts, let’s try to read logs from a different pod in a different namespace. We grab the coredns pod from the kube-system namespace.

kb get pods -n kube-system

NAME READY STATUS RESTARTS AGE

metrics-server-6d684c7b5-6ww29 1/1 Running 7 8d

coredns-d798c9dd-pdswq 1/1 Running 7 8d

The url to access this pod is composed as this: /api/v1/namespaces/{namespace}/pods/{name}/log. So, we need the exact namespace and the exact pod name for this request to work. Get back into the api-explorer pod and access the log files.

> curl -H "Authorization: Bearer $TOKEN" https://kubernetes/api/v1/namespaces/kube-system/pods/coredns-d798c9dd-pdswq/log --insecure

[INFO] plugin/reload: Running configuration MD5 = 4665410bf21c8b272fcfd562c482cb82

______ ____ _ _______

/ ____/___ ________ / __ \/ | / / ___/ ~ CoreDNS-1.6.3

/ / / __ \/ ___/ _ \/ / / / |/ /\__ \ ~ linux/arm, go1.12.9, 37b9550

/ /___/ /_/ / / / __/ /_/ / /| /___/ /

\____/\____/_/ \___/_____/_/ |_//____/

We are happy to see that it works as intended.

Conclusion

In this article, we learned about the essential Kubernetes resources for enabling to access pods and their log files in any namespace. A ClusterRole defines which resources and which actions on those resources should be given. These access rights are bound with a ClusterRoleBinding to a ServiceAccount. Then we use this ServiceAccount to provide the access rights to a Pod. We showed how the Kubernetes API can be access from within a pod by using the curl command. In the next article we will see how to implement a cron job that uses this service account to export the log files.