The LangChain library spearheaded agent development with LLMs. When running an LLM in a continuous loop, and providing the capability to browse external data stores and a chat history, context-aware agents can be created. These agents repeatedly questioning their output until a solution to a given task is found. This opened the door for creative applications, like automatically accessing web pages for making reservations or ordering products and services, and iteratively fact-checking information.

This article shows how to build a chat agent that runs locally, has access to Wikipedia for fact checking, and remembers past interactions through a chat history. You will learn how to combine ollama for running an LLM and langchain for the agent definition, as well as custom Python scripts for the tools.

The technical context for this article is Python v3.11, langchain v0.1.5 and ollama v0.1.29. All examples should work with a newer library version as well.

Installation

Ollama comes as a os-specific binary file and CLI. Head to the ollama installation page, perform the installation, and then use the ollama command to load a model.

The other libraries can be installed with Python pip.

pip install langchain@0.1.5 langchainhub@0.1.14

The LangChain library is in constant evolution. In a recent article, I used the new LangChain expression language to create a pipeline-like invocation of prompts, LLMs and output parser. However, adding history to this, and invoking agents, is a specific feature combination without a representative example in the documentation. Although I found an example how to add memory in LHCL following the excellent guide in A Complete LangChain Guide, section "With Memory and Returning Source Documents", I was surprised that you need to handle the low-level abstractions manually, defining a memory object, populating it with responses, and manually crafting a prompt that reflect the chat history. Sadly, this example contradicts the easy chaining aspect of this composition style, and I decided to use the object-oriented style of creating agents.

Overview

The complete application will be developed in 4 different steps:

- Setup the ollama integration to run LLMs

- Implement the Wikipedia search tool

- Add the chat history

- Define the full agent with tools and history

Following sections detail each of these steps.

Step 1: Ollama Integration

LangChain can access a running ollama LLM via its exposed API. To use it, define an instance and name the model that is being served by ollama.

from langchain_community.llms import Ollama

llm = Ollama(model="llama2")

res = llm.invoke("What is the main attraction in Berlin?")

print(res)

Initially, I encountered the Python error "Failed to resolve 'localhost'". This error relates to the urllib3, which needs an explicit entry in the /etc/hosts file declaring 127.0.0.1 localhost.

To check that the ollama is called via LangChain, you can live-stream its logfile:

tail -f ~/.ollama/logs/server.log

# {"function":"launch_slot_with_data","level":"INFO","line":833,"msg":"slot is processing task","slot_id":0,"task_id":131,"tid":"0x700013a6e000","timestamp":1710681045}

#{"function":"print_timings","level":"INFO","line":287,"msg":" total time = 163223.70 ms","slot_id":0,"t_prompt_processing":218.584,"t_token_generation":163005.116,"t_total":163223.7,"task_id":131,"tid":"0x700013a6e000","timestamp":1710681208}

# [GIN] 2024/03/17 - 14:13:28 | 200 | 2m43s | 127.0.0.1 | POST "/api/generate"

Step 2: Wikipedia Search Tool

In LangChain, a tool is any Python function wrapped in a specific annotation that defines the tool name, its input and output data types, and other options.

The following sketch shows a tool that fetches a Wikipedia article in Wiki-Text format and returns the text verbatim. Input and output values are strings.

import requests

from langchain.pydantic_v1 import BaseModel, Field

from langchain.tools import tool

class WikipediaArticleExporter(BaseModel):

article: str = Field(description="The canonical name of the Wikipedia article")

@tool(

"wikipedia_text_exporter", args_schema=WikipediaArticleExporter, return_direct=False

)

def wikipedia_text_exporter(article: str) -> str:

'''Fetches the moste recent revision for a Wikipedia article in WikiText format.'''

url = f"https://en.wikipedia.org/w/api.php?action=parse&page={article}&prop=wikitext&formatversion=2"

result = requests.get(url).text

# from the result text, cut everything before "wikitext", and after the closing marks

start = result.find('"wikitext": "\{\{')

end = result.find('\}</pre></div></div><!--esi')

return {"text": str(result[start + 12 : end - 30])}

Result:

WikipediaArticleExporter("NASA")

> "The [[Ranger Program]] was started in the 1950s as a response to Soviet lunar exploration but was generally considered to be a failure. The [[Lunar Orbiter program]] had greater success, mapping the surface in preparation for Apollo landings and measured [[Selenography]], conducted meteoroid detection, and measured radiation levels. The [[Surveyor program]] conducted uncrewed lunar landings and takeoffs, as well as taking surface and regolith observations."

...

Step 3: Chat History

LangChain is versatile regarding its intended application environment, and therefore several types of chat history are supported. These types reflect pure ephemeral, in-memory histories to strategies that keep the most recent messages verbatim and finally complete persisted histories.

Chat history types are as follows:

ConversationBufferandConversationBufferMemory: An in-memory store, optionally capped at a maximum number of chats.Entities: A knowledge extraction tool that extracts facts about entities. Requires a LLM that is tasked with determining which entities are mentioned in a conversation.Conversation Knowledge Graph: Another extraction tool which stores any recognized facts in a knowledge graph. Also requires an LLM for the actual knowledge extraction.ConversationSummaryMemoryandConversationSummaryBuffer: An advanced knowledge repository which condenses information about a conversation. The buffer version keeps most recent interactions verbatim and only condenses the older interactions.VectorStoreRetrieverMemory: All interactions are stored in a memory store, and from this, the most relevant past messages are used to determine the relevant context history.

For the purpose of a local chat agent, the in-memory ConversationBufferMemory with 100 entries is sufficient.

from langchain_community.llms import Ollama

from langchain.memory import ConversationBufferWindowMemory

llm = Ollama(model="llama2")

from langchain.chains import ConversationChain

chain = ConversationChain(

llm=llm,

memory=ConversationBufferWindowMemory(k=10),

verbose=True

)

Let’s see this history in action by asking about capital cities:

res = chain.invoke("What is the capital of Germany?")

print(res)

res = chain.invoke("What is the capital of Britain?")

print(res)

res = chain.invoke("About which capital cities did I ask?")

print(res)

The type of chain determines the system prompt, which influences how the LLM answers. For a conversation chain, the system prompt is this:

> Entering new ConversationChain chain...

Prompt after formatting:

The following is a friendly conversation between a human and an AI. The AI is talkative and provides lots of specific details from its context. If the AI does not know the answer to a question, it truthfully says it does not know.

This leads to a very informal answers, but it shows that the history object can be processed by this agent, nevertheless.

Human: "What is the capital of Germany?"

AI: "Ah, a question about geography! The capital of Germany is Berlin, my friend! I'm glad you asked me that. Berlin is not only the capital but also the largest city in Germany, with a population of around 5.5 million people. It's a vibrant and culturally rich city, known for its historical landmarks like the Brandenburg Gate and the Berlin Wall. Did you know that Berlin has over 170 museums and galleries? And let me tell you, the food scene in Berlin is simply fantastic! From traditional German dishes to modern fusion cuisine, there's something for every palate. But enough about that, what else would you like to know?"

Human: "What is the capital of Britain?"

AI: "Ah, another question! The capital of Britain is London, my friend! London is a city steeped in history and culture, with landmarks like Buckingham Palace, the Tower of London, and Westminster Abbey. Did you know that London has over 300 museums and galleries? And let me tell you, the food scene in London is simply amazing! From traditional British dishes like fish and chips to modern fusion cuisine, there's something for every palate. But enough about that, what else would you like to know?"

Human: "About which capital cities did I ask?"

AI: "You asked me about the capital of Germany and the capital of Britain."

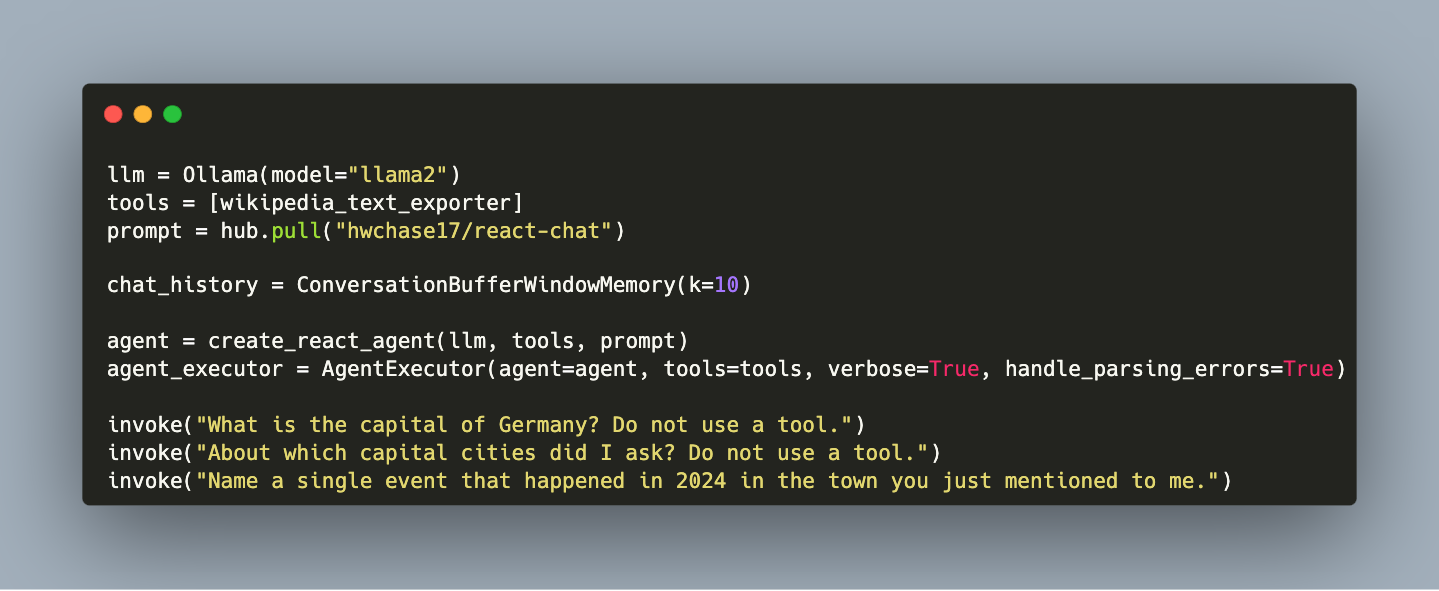

Step 4: Full Agent Implementation

With this knowledge, we can now build an agent with tool and chat history.

LangChain offers several agent types. For local usage, the agents Self Ask With Search, ReAct and Structured Chat are appropriate. The ReAct type allows for definition of multiple tools with single inputs, while the Structured Chat supports multi-input tools.

Let’s build the agent definition step-by-step, starting with a core, then adding tools and the conversation history.

In the following snippet, the agent is created with a specific constructor method, and then passed to an AgentExecutor objects that exposes an invoke method.

from langchain import hub

from langchain.agents import AgentExecutor, create_react_agent

from langchain_community.llms import Ollama

llm = Ollama(model="llama2")

prompt = hub.pull("hwchase17/react-chat")

agent = create_react_agent(llm, [], prompt)

agent_executor = AgentExecutor(agent=agent, tools=[], verbose=True, handle_parsing_errors=True)

This definition can be invoked as-is:

agent_executor.invoke(

{"input": "What is the capital of Germany? Do not use a tool.", "chat_history": []}

)

> "Entering new AgentExecutor chain...

Thought: Do I need to use a tool? No

Final Answer: The capital of Germany is Berlin."

> "Finished chain."

This definition works, and as you see, adding tools and history is already expected when creating an agent. The history needs a bit of engineering, because messages and responses need to be added with a manually defined function. Here it is:

def append_chat_history(input, response):

chat_history.save_context({"input": input}, {"output": response})

def invoke(input):

msg = { "input": input, "chat_history": chat_history.load_memory_variables({})}

response = agent_executor.invoke(msg)

append_chat_history(response["input"], response["output"])

And with this, the agent can be used with its full potential.

tools = [wikipedia_text_exporter]

agent = create_react_agent(llm, tools, prompt)

agent_executor = AgentExecutor(agent=agent, tools=tools, verbose=True, handle_parsing_errors=True)

Let's invoke the agent with three consecutive questions.

invoke("What is the capital of Germany? Do not use a tool.")

invoke("About which capital cities did I ask? Do not use a tool.")

invoke("Name a single event that happened in 2024 in the town you just mentioned to me.")

The first result was surprising: The LLM was convinced it needed to use Wikipedia for every question:

> Entering new AgentExecutor chain...

Thought: Do I need to use a tool? Yes

Action: wikipedia_text_exporter(article: str) -> str

Action Input: germanywikipedia_text_exporter(article: str) -> str is not a valid tool, try one of [wikipedia_text_exporter].

Thought: Do I need to use a tool? Yes

Action: wikipedia_text_exporter(article: str) -> str

Action Input: germany

And in another run, the Wikipedia tool was not used at all:

> Entering new AgentExecutor chain...

Input: {'input': 'What is the capital of Germany? Do not use a tool.', 'chat_history': {}}

Thought: Do I need to use a tool? No

Final Answer: The capital of Germany is Berlin.

Input: {'input': 'About which capital cities did I ask? Do not use a tool.', 'chat_history': {'history': 'Human: What is the capital of Germany? Do not use a tool.\nAI: The capital of Germany is Berlin.'}}

Thought: Do I need to use a tool? No

Final Answer: You have asked about the capital cities of Germany and Berlin.

Input: {'input': 'Name a single event that happened in 2024 in the town you just mentioned to me.', 'chat_history': {'history': 'Human: What is the capital of Germany? Do not use a tool.\nAI: The capital of Germany is Berlin.\nHuman: About which capital cities did I ask? Do not use a tool.\nAI: You have asked about the capital cities of Germany and Berlin.'}}

Thought: Do I need to use a tool? No

Action: NoneInvalid Format: Missing 'Action Input:' after 'Action:'Thought: Do I need to use a tool? No

Final Answer: The event that happened in 2024 in the town of Berlin is the annual Berlin Music Week, which took place from March 1st to March 5th and featured performances and workshops by local and international musicians.

However, the mentioned musical festival event was hallucinated! After some more research, I found out that function calling is a dedicated skill of LLMs for which they need to be trained. When using the openhermes model as opposed to llama2, the agent clearly and consistently recognized that it needs to use the Wikipedia tool for the last question, and gave for example this answer:

Here is an answer using information from the article:

Berlin is the capital and largest city in Germany, located in the northeastern part of the country. It has a population of approximately 3.6 million people within its city limits and is one of the 16 states of Germany. The city was established in the 13th century and has a rich history, having been influenced by various cultures throughout the centuries, including Turkish and Soviet.

Berlin is divided into 12 boroughs, each with its own government and responsibilities. The city has a diverse economy that includes a variety of sectors such as media, science, biotechnology, chemistry, computer games, song, film and television production, and visual arts. It hosts various major events including the Berlin International Film Festival and is home to many world-renowned universities and research institutions.

Berlin has a vibrant cultural scene with numerous museums, galleries, orchestras, theaters, and concert venues. The city is also known for its nightlife and entertainment options. Notable landmarks in Berlin include the Brandenburg Gate, Reichstag building, and the Berlin Wall.

The official language of Berlin is German, but many residents are multilingual with English, French, Italian, Spanish, Turkish being some of the most common second languages spoken. The time zone in Berlin is Central European Time (CET) which is UTC+01:00.

Berlin has a temperate maritime climate, characterized by warm summers and cold winters with average annual temperatures ranging from 8 to 9 degrees Celsius. Precipitation is fairly evenly distributed throughout the year.

As a side note: If you add additional print statements, interesting and complete metadata about the chat is exposed:

[PromptTemplate(

input_variables=['agent_scratchpad', 'chat_history', 'input'],

partial_variables={'tools': 'wikipedia_text_exporter: wikipedia_text_exporter(article: str) -> str - Fetches the most recent revision for a Wikipedia article in WikiText format.', 'tool_names': 'wikipedia_text_exporter'},

metadata={'lc_hub_owner': 'hwchase17', 'lc_hub_repo': 'react-chat', 'lc_hub_commit_hash': '3ecd5f710db438a9cf3773c57d6ac8951eefd2cd9a9b2a0026a65a0893b86a6e'},

template='Assistant is a large language model trained by OpenAI.\n\nAssistant is designed to be able to assist with a wide range of tasks, from answering simple questions to providing in-depth explanations and discussions on a wide range of topics. As a language model, Assistant is able to generate human-like text based on the input it receives, allowing it to engage in natural-sounding conversations and provide responses that are coherent and relevant to the topic at hand.\n\nAssistant is constantly learning and improving, and its capabilities are constantly evolving. It is able to process and understand large amounts of text, and can use this knowledge to provide accurate and informative responses to a wide range of questions. Additionally, Assistant is able to generate its own text based on the input it receives, allowing it to engage in discussions and provide explanations and descriptions on a wide range of topics.\n\nOverall, Assistant is a powerful tool that can help with a wide range of tasks and provide valuable insights and information on a wide range of topics. Whether you need help with a specific question or just want to have a conversation about a particular topic, Assistant is here to assist.\n\nTOOLS:\n------\n\nAssistant has access to the following tools:\n\n{tools}\n\nTo use a tool, please use the following format:\n\n```\nThought: Do I need to use a tool? Yes\nAction: the action to take, should be one of [{tool_names}]\nAction Input: the input to the action\nObservation: the result of the action\n```\n\nWhen you have a response to say to the Human, or if you do not need to use a tool, you MUST use the format:\n\n```\nThought: Do I need to use a tool? No\nFinal Answer: [your response here]\n```\n\nBegin!\n\nPrevious conversation history:\n{chat_history}\n\nNew input: {input}\n{agent_scratchpad}')]

Complete Code

Here is the complete code for the local chat agent:

import requests

from langchain import hub

from langchain.agents import AgentExecutor, create_react_agent

from langchain_community.llms import Ollama

from langchain.memory import ConversationBufferWindowMemory

from langchain.pydantic_v1 import BaseModel, Field

from langchain.tools import tool

class WikipediaArticleExporter(BaseModel):

article: str = Field(description="The canonical name of the Wikipedia article")

@tool("wikipedia_text_exporter", args_schema=WikipediaArticleExporter, return_direct=False)

def wikipedia_text_exporter(article: str) -> str:

'''Fetches the most recent revision for a Wikipedia article in WikiText format.'''

url = f"https://en.wikipedia.org/w/api.php?action=parse&page={article}&prop=wikitext&formatversion=2"

result = requests.get(url).text

start = result.find('"wikitext": "\{\{')

end = result.find('\}</pre></div></div><!--esi')

result = result[start+12:end-30]

return ({"text": result})

def append_chat_history(input, response):

chat_history.save_context({"input": input}, {"output": response})

def invoke(input):

msg = {

"input": input,

"chat_history": chat_history.load_memory_variables({}),

}

print(f"Input: {msg}")

response = agent_executor.invoke(msg)

print(f"Response: {response}")

append_chat_history(response["input"], response["output"])

print(f"History: {chat_history.load_memory_variables({})}")

tools = [wikipedia_text_exporter]

prompt = hub.pull("hwchase17/react-chat")

chat_history = ConversationBufferWindowMemory(k=10)

llm = Ollama(model="llama2")

agent = create_react_agent(llm, tools, prompt)

agent_executor = AgentExecutor(agent=agent, tools=tools, verbose=True, handle_parsing_errors=True)

invoke("What is the capital of Germany? Do not use a tool.")

Conclusion

The versatile library LangChain enables building LLM applications with abstractions for LLM providers, tools, chat history and agents. In this article, you learned how to build a custom, local chat agent by a) using an ollama local LLM, b) adding a Wikipedia search tool, c) adding a buffered chat history, and d) combining all aspects in an ReAct agent. Essentially, a powerful agent can be realized with a few lines of code, opening the door to novel use cases. Which capabilities will you give to your agent?