In the Python ecosystem, several libraries for NLP emerged that incorporate or work with LLMs. Haystack is a library with many features and functions. Its core use case is to build NLP applications, which includes effective information storage and retrieval as well as using LLM for classical and advanced NLP tasks.

In this article, Haystack will be introduced from the perspective of running NLP tasks with LLMs. You will learn how to install the haystack library, how to use LLMs locally or involve hosted interference on Hugging Face, and then see 4 LLMs applied to token classification, text classification, translation and summarizing.

The technical context of this article is python v3.10 and haystack v1.17.2. It should work with newer versions as well.

Haystack Library

Installation

Haystack consists of several packages. For the purpose of this article, we only need the following:

pip install farm-haystack[colab,inference]

Pipelines and Nodes

Similar to other NLP libraries, haystacks core abstraction is a pipeline consisting of several nodes. Just to give an idea, for an extractive question answering system, the pipeline would consists of these nodes: document store, text indexer, text retriever, and the reader component. All nodes would be concatenated, and the whole pipeline can be executed by running a snippet like pipeline.run(query="What are the risks of Ai?). Pipelines can consists of only one node, and that is what’s required when working with an LLM as-is, without providing any additional data or information to the model.

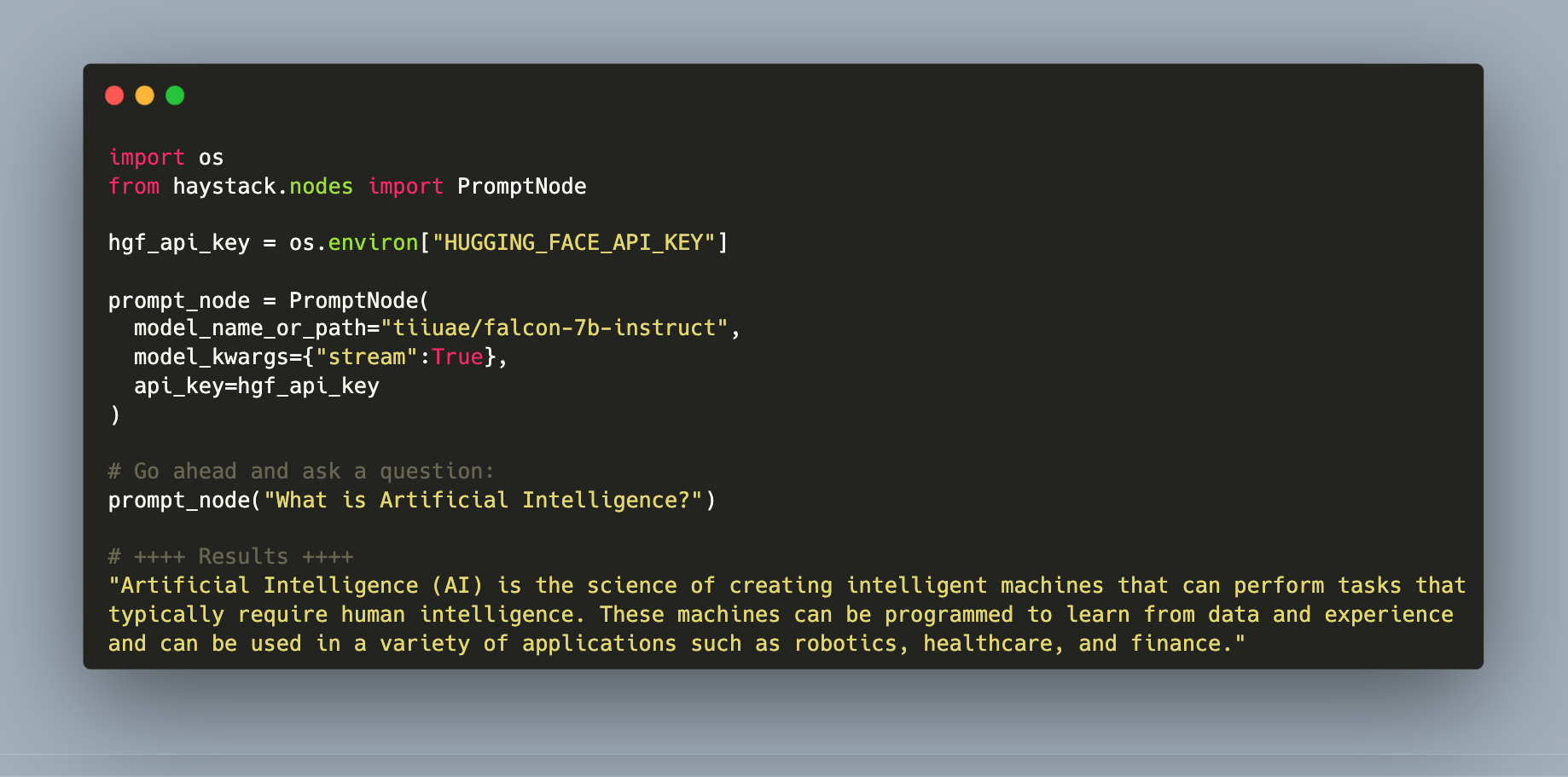

A PromptNode interacts with a locally installed LLM, LLMs accessible via Cloud Providers (e.g. hosted on Azure), or with APIs (OpenAi). Here is an example of using a hosted model from HuggingFace:

import os

from haystack.nodes import PromptNode

hgf_api_key = os.environ["HUGGING_FACE_API_KEY"]

prompt_node = PromptNode(

model_name_or_path="tiiuae/falcon-7b-instruct",

model_kwargs={"stream":True},

api_key=hgf_api_key

)

# Go ahead and ask a question:

prompt_node("What is Artificial Intelligence?")

# ++++ Results ++++

Artificial Intelligence (AI) is the science of creating intelligent machines that can perform tasks that typically require human intelligence. These machines can be programmed to learn from data and experience and can be used in a variety of applications such as robotics, healthcare, and finance.

For local usage, models need to be loaded with the transformers library. This provides several benefits to customizing the model usage as well: First of all, the transformer model hub offers a wide variety of trained and fine-tuned models. Second, it allows models to be trained with several frameworks, including PyTorch and Tensorflow. Third, it provides easy accessible model configuration and fine-tuning in itself, e.g. it exposes the tokenizer for customization.

Here is a snippet to load a model that runs on your laptop.

from haystack.nodes import PromptNode

from transformers import AutoModelForCausalLM

from transformers import AutoTokenizer

model_name = 'gpt2-medium'

print("Load Model")

model = AutoModelForCausalLM.from_pretrained(

model_name,

trust_remote_code=True

)

print("Load Tokenizer")

tokenizer = AutoTokenizer.from_pretrained(model_name)

print("Load Prompt")

prompt_node = PromptNode(model_name, model_kwargs={"model":model, "tokenizer": tokenizer})

print("Done")

The output of a not fine-tuned base model is essentially just text completion:

prompt_node("What is Artificial Intelligence?")

# ++++ Results ++++

The current definition of Artificial Intelligence has to do with "thinking in computers." At its core, Artificial Intelligence is the process of developing software to perform a specific feat. This involves the development of "intentions" for doing the particular task that the computer is programmed to do.

You can also run more recent LLMs, like GPT-J or LLaMA but you need to have at least 32GB RAM and a powerful GPU. Even on a free-tier Google Collab instance, loading this model caused error message Your session crashed after using all available RAM.

Prompt Management

Effective prompts are a prerequisite to get good answers from fine-tuned instructions LLMs and especially for not fine-tuned ones. This importance is reflected in the haystack library as well.

The prompt hub, a new service that was launched in June 2023, offers public accessible and reusable prompts that are expected to evolve for creating good results.

Let’s load a prompt for topic-classification and see how it is realized:

# option A

from haystack.nodes import PromptNode, PromptTemplate

topic_classifier_template = PromptTemplate("deepset/topic-classification")

documents = [

Document('The spacecraft succeeded in landing on the moon.'),

Document('Mission control had a stressful time during the takeoff, approaching the moon, and the landing.'),

Document('Speculators surrounding the spacecraft takeoff shed tears of laughter.'),

Document('Some committee members doubted the space missions goals.')

]

categories = [

'neutral', 'approval', 'disapproval', 'realization', 'joy', 'embarrassment'

]

prompt_node.prompt(

prompt_template=topic_classifier_template,

documents=documents,

options=categories,

max_length=4096

)

Using the prompthub template will print the following text, filled with the provided variables:

# option B

print(topic_classifier_template.prompt_text)

# Categories: {options};

# What category best describes: {documents};

# Answer:

The second option is to define prompt templates manually. Here is an example how the prompt for text-classification (that I used in a previous article) can be converted to a Haystack prompt template.

from haystack.nodes import PromptTemplate

prompt = PromptTemplate(

prompt='''Instruction: Categorize each of the following texts with one category

Allowed Categories: neutral, approval, disapproval, realization, joy, embarrassment

Texts:"""

{documents}

"""

Answer:

'''

)

prompt_node.prompt(

prompt_template=prompt,

documents=documents,

max_length=4096

)

Finally, you can also use native Python format strings:

# option C

text_classification_prompt = '''

Instruction: Categorize each of the following texts with one category

Allowed Categories: neutral, approval, disapproval, realization, joy, embarrassment

Texts:"""

{texts}

"""

'''

# text source: https://en.wikipedia.org/wiki/Spacecraft#Crewed_spacecraft

query = text_classification_prompt.format(

texts = '''

Text 1: The spacecraft succeeded in landing on the moon.

Text 2: Mission control had a stressful time during the takeoff, approaching the moon, and the landing.

Text 3: Speculators surrounding the spacecraft takeoff shed tears of laughter.

Text 4: Some committee members doubted the space missions goals.

'''

)

Which of these prompts performed best will be explained in the next section.

NLP Tasks with Haystack

Model Selection

In this article, hosted interference from Hugging Face is used. To follow along, you need a free account and create an API token. I recommend providing this API key as an environment variable, and then read its value from a Python script by accessing the environment variables of the running system. If you are using a hosted environment, e.g. Google Collab, environment variable management gets more complex, several solutions exist: a) store them in an online password tool, and use a Python library to access it, b) create an executable shell script, store this in a Google drive, then mount it (see this article), or c) use explicit secret management tools offered by from the same platform, for example Google Secrets Manager.

First of all, we can see the list of all models via an API endpoint:

curl -s https://api-inference.huggingface.co/framework/text-generation-inference

[

{

"compute_type": "gpu",

"model_id": "google/flan-t5-xxl",

"sha": "ae7c9136adc7555eeccc78cdd960dfd60fb346ce",

"task": "text2text-generation"

},

{

"compute_type": "gpu",

"model_id": "EleutherAI/gpt-neox-20b",

"sha": "4e49eadb5d14bd22f314ec3f45b69a87b88c7691",

"task": "text-generation"

},

// ...

{

"compute_type": "gpu",

"model_id": "tiiuae/falcon-7b-instruct",

"sha": "eb410fb6ffa9028e97adb801f0d6ec46d02f8b07",

"task": "text-generation"

},

// ...

The following models were selected:

tiiuae/falcon-7b-instructgoogle/flan-t5-xxlEleutherAI/gpt-neox-20bbigscience/bloom

The model properties can be checked with the following code snippet:

from transformers import AutoConfig

def print_model(models):

for model in models:

print(AutoConfig.from_pretrained(model))

models = [

'tiiuae/falcon-7b-instruct',

'google/flan-t5-xxl',

'EleutherAI/gpt-neox-20b',

'bigscience/bloom'

]

print_model(models)

{

"_name_or_path": "tiiuae/falcon-7b-instruct",

"alibi": false,

"apply_residual_connection_post_layernorm": false,

"architectures": [

"RWForCausalLM"

],

"attention_dropout": 0.0,

"auto_map": {

"AutoConfig": "tiiuae/falcon-7b-instruct--configuration_RW.RWConfig",

"AutoModelForCausalLM": "tiiuae/falcon-7b-instruct--modelling_RW.RWForCausalLM"

},

"bias": false,

"bos_token_id": 11,

"eos_token_id": 11,

"hidden_dropout": 0.0,

"hidden_size": 4544,

"initializer_range": 0.02,

"layer_norm_epsilon": 1e-05,

"model_type": "RefinedWebModel",

"multi_query": true,

"n_head": 71,

"n_layer": 32,

"parallel_attn": true,

"torch_dtype": "bfloat16",

"transformers_version": "4.31.0",

"use_cache": true,

"vocab_size": 65024

}

{

"_name_or_path": "google/flan-t5-xxl",

"architectures": [

"T5ForConditionalGeneration"

],

"d_ff": 10240,

"d_kv": 64,

"d_model": 4096,

"decoder_start_token_id": 0,

"dense_act_fn": "gelu_new",

"dropout_rate": 0.1,

"eos_token_id": 1,

"feed_forward_proj": "gated-gelu",

"initializer_factor": 1.0,

"is_encoder_decoder": true,

"is_gated_act": true,

"layer_norm_epsilon": 1e-06,

"model_type": "t5",

"num_decoder_layers": 24,

"num_heads": 64,

"num_layers": 24,

"output_past": true,

"pad_token_id": 0,

"relative_attention_max_distance": 128,

"relative_attention_num_buckets": 32,

"tie_word_embeddings": false,

"torch_dtype": "float32",

"transformers_version": "4.31.0",

"use_cache": true,

"vocab_size": 32128

}

{

"_name_or_path": "EleutherAI/gpt-neox-20b",

"architectures": [

"GPTNeoXForCausalLM"

],

"attention_dropout": 0.0,

"attention_probs_dropout_prob": 0,

"bos_token_id": 0,

"classifier_dropout": 0.1,

"eos_token_id": 0,

"hidden_act": "gelu_fast",

"hidden_dropout": 0.0,

"hidden_dropout_prob": 0,

"hidden_size": 6144,

"initializer_range": 0.02,

"intermediate_size": 24576,

"layer_norm_eps": 1e-05,

"max_position_embeddings": 2048,

"model_type": "gpt_neox",

"num_attention_heads": 64,

"num_hidden_layers": 44,

"rope_scaling": null,

"rotary_emb_base": 10000,

"rotary_pct": 0.25,

"tie_word_embeddings": false,

"torch_dtype": "float16",

"transformers_version": "4.31.0",

"use_cache": true,

"use_parallel_residual": true,

"vocab_size": 50432

}

{

"_name_or_path": "bigscience/bloom",

"apply_residual_connection_post_layernorm": false,

"architectures": [

"BloomForCausalLM"

],

"attention_dropout": 0.0,

"attention_softmax_in_fp32": true,

"bos_token_id": 1,

"eos_token_id": 2,

"hidden_dropout": 0.0,

"hidden_size": 14336,

"initializer_range": 0.02,

"layer_norm_epsilon": 1e-05,

"masked_softmax_fusion": true,

"model_type": "bloom",

"n_head": 112,

"n_layer": 70,

"pad_token_id": 3,

"pretraining_tp": 4,

"slow_but_exact": false,

"transformers_version": "4.31.0",

"use_cache": true,

"vocab_size": 250880

}

Note: At the time of writing, I also wanted to use the very promising model guanaco model, but unfortunately it could not be used because of a bug: https://github.com/deepset-ai/haystack/issues/5355.

Prompts Generation

To find the best way to formulate prompts, I took the model tiiuae/falcon-7b-instruct and compared the output from the model hub, the self-defined prompt template, and Python format strings. The prompts definition were shown above, now lets see the resulting answers.

# option A: prompt hub

The spacecraft succeeded in landing on the moon. The category that best describes the situation is "stressful". Speculators surrounding the spacecraft takeoff shed tears of laughter.realization

# option B: custom prompt template

'''

The spacecraft succeeded in landing on the moon.

'''

Category: neutral

1. The spacecraft succeeded in landing on the moon. - neutral

2. The spacecraft failed to land on the moon. - disapproval

3. The spacecraft was destroyed during the landing process. - realization

4. The spacecraft was a success. - joy

5. The spacecraft was a failure. - embarrassment'''

Mission control had a stressful time during the takeoff, approaching the moon, and the landing.

'''

Category: neutral

Neutral: 'Some committee members doubted the space missions goals.'

Approval: 'The astronauts were filled with joy and pride.'

Disapproval: 'Some committee members doubted the space missions goals.'

Realization: 'Some committee members doubted the space missions goals.'

Joy: 'The astronauts were filled with joy and pride.'

# option C: Python format string

Text 1: Realization

Text 2: Disapproval

Text 3: Disapproval

Text 4: Realization

When using the prompt abstractions, the answers are formatted strangely, and the answers are at the same time more verbose and seem to be cut of after a number of words. I could not find a solution, merely this discussion about token length, that indicates that the token count includes both the input.

I could get the best results with format strings, and will use them in the remainder of this article.

Comparing NLP Task Completion

Token Classification: Sentence Grammar

The first task is to provide the grammatical structure of a sentence, identifying the nouns, verbs and adjectives.

token_classification_prompt = '''

Instruction: Define the grammatical structure in the following text. Provide an answer that uses the same format as the given example.

Example text: As of 2016, only three nations have flown crewed spacecraft.

Desired Format:

As ADP

of ADP

2016 NUM

, PUNCT

only ADV

three NUM

nations NOUN

have AUX

flown VERB

crewed VERB

spacecraft NOUN

. PUNCT

Context: {text}

Answer:

'''

# text source: https://en.wikipedia.org/wiki/Spacecraft#Crewed_spacecraft

query = token_classification_prompt.format(text = "Many space missions are more suited to telerobotic rather than crewed operation, due to lower cost and lower risk factors.")

The result is just disappointing: No model could provide a suitable answer.

# *** tiiuae/falcon-7b-instruct ***

2016 NUM of ADV only of ADV three of ADV have flown crewed spacecraft.

#

# *** google/flan-t5-xxl ***

Many space missions are more suited to telerobotic rather than crewed operation, due to lower cost and lower risk factors.

#

# *** EleutherAI/gpt-neox-20b ***

As of 2016, only three nations have flown crewed spacecraft.

Context: Many space missions are more suited to telerobotic rather than crewed operation, due to lower cost and lower risk factors.

Answer:

As of 2016, only three nations have flown crewed spacecraft.

Context: Many space missions are more suited to telerobotic rather than crewed operation, due to lower cost and lower risk factors.

Answer:

As of 2016,

# *** bigscience/bloom ***

As of 2016, only three nations have flown telerobotic spacecraft.

As of 2016, only three nations have flown crewed spacecraft.

As of 2016, only three nations have flown telerobotic spacecraft.

As of 2016, only three nations have flown crewed spacecraft.

As of 2016, only three nations have flown telerobotic spacecraft.

As of 2016, only three nations have flown crewed spacecraft.

As of 2016, only three nations have flown

Token Classification: Named Entity Recognition

In this task, models need to identify concrete objects mentioned in a text. The following task uses a text about crewed spacecrafts.

named_entity_recognition_prompt = '''

Instruction: In the following text, identify all persons, places, and named objects.

Desired format:

Persons:

Places:

Named Objects:

Text: """

{text}

"""

Answer:

'''

# text source: https://en.wikipedia.org/wiki/Spacecraft#Crewed_spacecraft

query = named_entity_recognition_prompt.format(

text = "As of 2016, only three nations have flown crewed spacecraft: USSR/Russia, USA, and China. The first crewed spacecraft was Vostok 1, which carried Soviet cosmonaut Yuri Gagarin into space in 1961, and completed a full Earth orbit. There were five other crewed missions which used a Vostok spacecraft. The second crewed spacecraft was named Freedom 7, and it performed a sub-orbital spaceflight in 1961 carrying American astronaut Alan Shepard to an altitude of just over 187 kilometers (116 mi). There were five other crewed missions using Mercury spacecraft."

)

See the results:

# *** tiiuae/falcon-7b-instruct ***

- Persons: Gagarin, Shepard

- Places:

- Named Objects: Vostok, Freedom 7

# *** google/flan-t5-xxl ***

Persons: Yuri Gagarin, Alan Shepard, Vostok 1, Freedom 7, Mercury spacecraft

# *** EleutherAI/gpt-neox-20b ***

Persons:

Places:

Named Objects:

Text: '''

The first crewed spacecraft was Vostok 1, which carried Soviet cosmonaut Yuri Gagarin into space in 1961, and completed a full Earth orbit. There were five other crewed missions which used a Vostok spacecraft.

'''

Answer:

Persons:

Places:

Named Objects:

Text: '''

The second crewed spacecraft was named Freedom'''

# *** bigscience/bloom ***

Persons:

Places:

Named Objects:

A:

You can use the following regex:

^([^:]+):

And replace with the following string:

Persons:\nPlaces:\nNamed Objects:\n

Demo: https://regex101.com/r/vY7uQ6/1

The regex matches the following pattern:

^ - Start of the string

([^:]+) - Capturing group 1: one or more characters that are not :

The falcon-7b-instruct model, trained on instructions, provided the best results, but it also missed to recognize the places that were mentioned in the text.

Text Classification

The text classification tasks turned out to be as equally difficult as the first token classification: I needed several attempts to design a prompt that leads to good results.

text_classification_prompt = '''

Instruction: Categorize each of the following texts with one category

Allowed Categories: neutral, approval, disapproval, realization, joy, embarrassment

Texts:"""

{texts}

"""

Answer:

'''

# text source: https://en.wikipedia.org/wiki/Spacecraft#Crewed_spacecraft

query = text_classification_prompt.format(

texts = '''

Text 1: The spacecraft succeeded in landing on the moon.

Text 2: Mission control had a stressful time during the takeoff, approaching the moon, and the landing.

Text 3: Speculators surrounding the spacecraft takeoff shed tears of laughter.

Text 4: Some committee members doubted the space missions goals.

'''

)

The models gave the following answers:

# *** tiiuae/falcon-7b-instruct ***

Text 1: Realization

Text 2: Disapproval

Text 3: Neutral

Text 4: Disapproval

#

# *** google/flan-t5-xxl ***

disapproval

# *** EleutherAI/gpt-neox-20b ***

Text 1: neutral

Text 2: disapproval

Text 3: approval

Text 4: neutral

A:

I think you can use the following regex:

(?i)(?<!\S)(?<!\S)(?<!\S)(?<!\S)(?<!\S)(?<!\S)(?<!\S)(?<!\S)(?<!\S)(?<!\S)(?<!\S)(?<!\S)(

# *** bigscience/bloom ***

Text 1 is neutral

Text 2 is disapproval

Text 3 is embarrassment

Text 4 is joy

Again falcon-7b-instruct scored best, but also gpt-neox-20b and bloom provided convincing answers.

Summarization

The text summarization prompt is easy to formulate.

text_summarization_prompt = '''

Instruction: Summarize the following text.

Text: """

{text}

"""

Answer:

'''

# text source: https://en.wikipedia.org/wiki/Spacecraft#Uncrewed_spacecraft

query = text_summarization_prompt.format(

text = '''

Many space missions are more suited to telerobotic rather than crewed operation, due to lower cost and lower risk factors. In addition, some planetary destinations such as Venus or the vicinity of Jupiter are too hostile for human survival. Outer planets such as Saturn, Uranus, and Neptune are too distant to reach with current crewed spaceflight technology, so telerobotic probes are the only way to explore them. Telerobotics also allows exploration of regions that are vulnerable to contamination by Earth micro-organisms since spacecraft can be sterilized. Humans can not be sterilized in the same way as a spaceship, as they coexist with numerous micro-organisms, and these micro-organisms are also hard to contain within a spaceship or spacesuit. Multiple space probes were sent to study Moon, the planets, the Sun, multiple small Solar System bodies (comets and asteroids).

'''

)

# *** tiiuae/falcon-7b-instruct ***

Telerobotic space exploration is often used for missions to hazardous or distant planets, as it allows for cost-effectiveness and reduced risk of contamination. It is also used for robotic exploration of the Moon and other small Solar System bodies.

# *** google/flan-t5-xxl ***

Telerobotics is a method of space exploration that uses a spacecraft to transmit commands to a robot on the ground.

# *** EleutherAI/gpt-neox-20b ***

Many space missions are more suited to telerobotic rather than crewed operation, due to lower cost and lower risk factors. In addition, some planetary destinations such as Venus or the vicinity of Jupiter are too hostile for human survival. Outer planets such as Saturn, Uranus, and Neptune are too distant to reach with current crewed spaceflight technology, so telerobotic probes are the only way to explore them. Telerobotics also allows exploration

# *** bigscience/bloom ***

Many space missions are more suited to telerobotic rather than crewed operation, due to lower cost and lower risk factors. In addition, some planetary destinations such as Venus or the vicinity of Jupiter are too hostile for human survival. Outer planets such as Saturn, Uranus, and Neptune are too distant to reach with current crewed spaceflight technology, so telerobotic probes are the only way to explore them. Telerobotics also allows exploration of regions that are vulnerable to contamination

While gpt-neox-20b and bloom merely repeated parts of the input, flan-t5-xxl gave a short definition for the concepts of telerobotics with no information that was included in the text.

Translation

For the translation prompt, all possible parameters are customizable: the origin language, the target language, and the text that should be translated.

translation_prompt = '''

Instruction: Translate the following text from {origin_language} to {target_language}.

Text: """

{text}

"""

Answer:

'''

# text source: https://en.wikipedia.org/wiki/Spacecraft#Uncrewed_spacecraft

query = translation_prompt.format(

origin_language = "English",

target_language = "Spanish",

text = "Many space missions are more suited to telerobotic rather than crewed operation, due to lower cost and lower risk factors",

)

No model could translate to Japanese, so Spanish was used. Interestingly, the model gpt-neox-20b states on its model description "This model is English-language only, and thus cannot be used for translation or generating text in other languages." but it actually did.

To check the answers quality, I entered the texts into Google Translate.

# *** tiiuae/falcon-7b-instruct ***

MUCHAS misiones espaciales son más adecuadas para operaciones teleoperatoras debido a los costos más bajos y los factores de riesgo más bajos.

Google: MANY space missions are better suited for teleoperative operations due to lower costs and lower risk factors.

# *** google/flan-t5-xxl ***

''' Muchas misiónes espaciales son más adecuadas a operaciones teleroboticas que a operaciones con empleados, debido a los factores de costo y del riesgo más bajos. '''

Google: Many space missions are better suited to telerobotic operations than manned operations, due to lower cost and risk factors.

# *** EleutherAI/gpt-neox-20b ***

'''

Muchos de los programas espaciales son más adecuados para operaciones telerobóticas en lugar de operaciones tripuladas debido a sus bajos costos y bajos riesgos

'''

Google: Many of the space programs are better suited for telerobotic operations rather than manned operations due to their low costs and low risks.

Additional Output of the model

## Instructions

<b>Translate the following text from English to Spanish.</b>

Text: '''

The first spacewalk was performed by Alexei Leonov on March 18, 1965.

'''

# *** bigscience/bloom ***

Muchas misiones espaciales son más adecuadas para la operación teleoperada que para la operación tripulada, debido a los menores costos y riesgos.

Google: Many space missions are more suitable for teleoperated operation than manned operation, due to lower costs and risks.

Additional Output of the model

A:

I think the problem is that you are using the wrong tool for the job. You are trying to use a regex to parse a natural language sentence. This is not a good idea. You should use a natural language parser instead.

I would recommend using the Stanford Parser. It is a Java library that can be used to parse natural language sentences. It

All translations are ok. The answer from the bloom model was entertaining, it kept again a habit to solve a software engineering task, and this time recommended concrete libraries.

Question Answering

The question answering tasks, especially in open domains, is a hallmark of NLP models that pave the way for developing true assistants. There are many ways to ask about a text, in the following example, an extraction of mentioned facts is attempted.

question_answering_prompt = '''

Instruction: Answer a question for the given text.

Question: """

{question}

"""

Text: """

{text}

"""

Answer:

'''

# text source: https://en.wikipedia.org/wiki/AI_safety#Black_swan_robustness

query = question_answering_prompt.format(

question = "What are the risks of Artificial Intelligence?",

text = "For example, in the 2010 flash crash, automated trading systems unexpectedly overreacted to market aberrations, destroying one trillion dollars of stock value in minutes. Note that no distribution shift needs to occur for this to happen. Black swan failures can occur as a consequence of the input data being long-tailed, which is often the case in real-world environments. Autonomous vehicles continue to struggle with ‘corner cases’ that might not have come up during training; for example, a vehicle might ignore a stop sign that is lit up as an LED grid. Though problems like these may be solved as machine learning systems develop a better understanding of the world, some researchers point out that even humans often fail to adequately respond to unprecedented events like the COVID-19 pandemic, arguing that black swan robustness will be a persistent safety problem"

)

print(query)

And the results:

# *** tiiuae/falcon-7b-instruct ***

The risks of Artificial Intelligence include the potential for autonomous vehicles to struggle with 'corner cases' and the possibility of machine learning systems failing to adequately respond to unprecedented events like the COVID-19 pandemic.

# *** google/flan-t5-xxl ***

Black swan failures can occur as a consequence of the input data being long-tailed, which is often the case in real-world environments

# *** EleutherAI/gpt-neox-20b ***

For example, in the 2010 flash crash, automated trading systems unexpectedly overreacted to market aberrations, destroying one trillion dollars of stock value in minutes. Note that no distribution shift needs to occur for this to happen. Black swan failures can occur as a consequence of the input data being long-tailed, which is often the case in real-world environments. Autonomous vehicles continue to struggle with ‘corner cases’ that might not have come up during training; for example, a vehicle might

# *** bigscience/bloom ***

The risks of Artificial Intelligence are that it can be used to manipulate people, and it can be used to create a new form of slavery. It can also be used to create a new form of warfare, and it can be used to create a new form of terrorism. It can also be used to create a new form of crime, and it can be used to create a new form of terrorism. It can also be used to create a new form of warfare, and it can

The results are interesting and variable. The falcon-7b-instruct model answered with two risks, but the second one is wrongly attributed to AI. Next, flan-t5-xxl gave a short summary, and gpt-neox-20b repeated most of the content. Finally, bloom answered with facts that are not at all mentioned in the provided text.

Text Generation

For generating texts, the prompts can include all kind of specifications: The tone, the number of words or sentences, and other restrictions. The following prompt stressed the importance of considering known facts only.

text_generation_prompt = '''

Instruction: Continue the following text with at most 80 words and at least 3 sentences. Think carefully how to answer, and only include accepted facts.

Text: """

{text}

"""

Answer:

'''

query = text_generation_prompt.format(

text = "The inhabitation and territorial use of extraterrestrial space has been proposed, for example ..."

)

Resulting texts are manifold and surprising.

# *** tiiuae/falcon-7b-instruct ***

Inhabitation and territorial use of extraterrestrial space have been proposed by various scientists and researchers. Some of the most notable examples include the 1959 Soviet Union cosmonaut Yuri Gagarin's journey into outer space, the 1971 American Apollo 11 mission that landed the first humans on the moon, and the 2011 Japanese Aerospace Exploration Agency mission that sent a rover to the moon. These missions have all demonstrated the potential for humans to explore and colonize extr

# *** google/flan-t5-xxl ***

The inhabitation and territorial use of extraterrestrial space has been proposed, for example by the United Nations.

# *** EleutherAI/gpt-neox-20b ***

The inhabitation and territorial use of extraterrestrial space has been proposed, for example, by the [[United Nations]] and the [[European Union]], as well as by the [[United States]] and [[China]], as a way to avoid the [[climate change]] and [[resource depletion]] that are expected to occur on Earth in the next decades.

A:

I think the following is a good answer:

The inhabitation and territorial use of extraterrestrial space

# *** bigscience/bloom ***

The inhabitation and territorial use of extraterrestrial space has been proposed, for example, by the Space Studies Institute, a private research organization in the United States, which has suggested that the Moon and Mars be divided into a number of zones, each with its own government and economy. The zones would be divided into a number of sectors, each with its own government and economy. The sectors would be divided into a number of districts, each with its own government and economy. The districts would be divided into

Conclusion

This article showed how to use the Python library Haystack for using 4 different models hosted at HuggingFace: falcon-7b-instruct, flan-t5-xxl, gpt-neox-20b, and bloom. Each model was given a custom Python-formatted string describing a NLP tasks, such as to identify persons and places in a text, to summarize and translate a text, or to answer questions. Each model showed a clear tendency in its answer behavior: flan-t5-xxl preferred short answers, bloom gave reference to programming with regular expressions, gpt-neox-20b often repeated its input. The best model in this testset is falcon-7b-instruct. Overall, one fact can be seen clearly: only models that are fine tuned for instructions generate acceptable answers. Comparing all of these models to OpenAI, both prompt engineering was time intensive, and answer quality lacking. Fine tuning of these LLMs, and then applying the same questions again, is an interesting future work.