OpenShift is platform for running containerized application workloads. It’s been in development for over 10 years, and the recent version supports Kubernetes as the default orchestration mode.

This article is a hands-on tutorial on how to install an OpenShift Kubernetes Cluster on Google Cloud. You will learn how to install the required binaries, how to create a GC account with the sufficient access rights, how to draft a basic configuration file, and finally how to install the cluster. In addition, you will see how to access cluster via kubectl and SSH, and how to upgrade the Kubernetes version.

Prerequisites

Google Kubernetes Engine, called GKE from here on, requires a fully registered and activated Google account. You also need to provide billing information, such as a credit card, and be willing to pay the cost of hosting nodes and pods. If you register a new account, you will get a free budget pf $300 for using all Google cloud services. During my trial, operating the default configured cluster was about $16 per day.

The cluster installation is coordinated from a dedicated computer, which is named the GKE controller in this article. On the GKE controller, you will install the gcloud binary and an OpenShift binary. The gcloud binary provides extensive tools for everything starting from authentication, IAM user creation to resource creation and maintenance. In direct comparison with AWS, I found the Google cloud management console better structured and easier to use. It’s helpful to take some time reading the GKE introduction documentation for getting to know how a Kubernetes cluster on Google cloud is operated. The OpenShift binary oc provides facilitates cluster creation and maintenance tasks, and it provides deep integration with other Kubernetes abstractions.

Like in my other articles, I wanted to automize as many steps as possible with the help of Terraform. This means to provide all necessary Google Cloud resources automatically, and then just perform a cluster installation command with the OpenShift binary. However, during try out, I ran into many obstacles, the final one being that service account authentication requires a very specific key format which can simply not be created with Terraform, but requires interaction with the Google Cloud console. After learning this, I rewrote the article from the beginning, and using many hints and commands from an article about OKD on GCP. Alas, the installation requires juggling between gcloud and OpenShift binaries, manual operations on the Google Cloud console, and bash scripts.

Part 1: Open Shift Overview

There are several OpenShift releases, targeting different audiences:

- OpenShift Container Platform: The on-premise enterprise version, which requires VMs or bare metal servers that run RedHat Enterprise Linux or Red Hat Enterprise Linux CoreOS

- OKD: The upstream, community developed OpenShift version that is used in the other open shift distributions. Its source code is available on Github. The v4 Version uses the CRIO container engine. It also has a built-in image repository.

- OpenShift Online: A public cloud service that runs on AWS or Google Cloud to deploy OpenShift.

- OpenShift Dedicated: The managed private cluster equivalent, also runs on AWS, Google Cloud, Azure, and the IBM Cloud

This article uses the OKD distribution.

OKD is the Open Shift distribution of my choice for this article. It provides several installation methods, including bare metal and cloud environments such as AWS, Google Cloud, Azure, Alibaba and many more. In general, for all these methods, the installation happens either on a-priori user-provisioned infrastructure, or the infrastructure is installer-provisioned.

From all these methods, I decide to use installer-provisioned infrastructure on Google Cloud. Browsing the GCP installation manual, you can get a general overview about the installation steps (GCP project creation, service account setup, enabling APIS) as well as the very important resource requirements. The requirements took me by surprise during the installation attempt, so be warned: A newly created GCP account only has 500GB out of required 750GB, and only 24 CPUs out of required 28CPUs. You need to increase the available resources via the Google management console by writing a small support ticket. (During the cluster installation, I created the tickets and they were approved within mere minutes, but better plan this sometime ahead.)

Part 2: Tool Installation

gcloud

The gcloud binary is available for all platforms, either as a direct download or even packaged. On the release page, select the appropriate platform and start the download.

Assuming you use Linux, just run the following commands to get started:

$> curl -O https://dl.google.com/dl/cloudsdk/channels/rapid/downloads/google-cloud-cli-409.0.0-linux-x86_64.tar.gz

$> tar -xzf google-cloud-cli-409.0.0-linux-x86.tar.gz

$> mv ./google-cloud-sdk ~/.google-cloud-sdk

$> ~/.google-cloud-sdk/install.sh

Welcome to the Google cloud CLI!

#...

$> export PATH=$PATH:~/.google-cloud-sdk/bin

OKD Binaries

An OKD release provides the binaries openshift-installer, oc, and kubectl. They are versioned both for a specific open shift and Kubernetes version. The oc tool is a binary that provides extended commands to interact with the cluster, including basic management tasks and the creation of Kubernetes resources. It is used for everything but the installation, which is done instead with openshift-installer.

On the release page, select the version of your choice, then download the binaries that are compatible with your platform. When using Linux, run these commands:

mkdir ~/openshift

cd ~/openshift

export OKD_VERSION=4.11.0-0.okd-2022-11-05-030711

wget "https://github.com/okd-project/okd/releases/download/${OKD_VERSION}/openshift-client-linux-${OKD_VERSION}.tar.gz"

wget "https://github.com/okd-project/okd/releases/download/${OKD_VERSION}/openshift-install-linux-${OKD_VERSION}.tar.gz"

tar xvf "openshift-client-linux-${OKD_VERSION}.tar.gz"

tar xvf "openshift-install-linux-${OKD_VERSION}.tar.gz"

chmod +x oc

chmod +x kubectl

chmod +x openshift-installer

export PATH=$PATH:~/openshift

Part 3: Google Resource Creation

Overview

For a new OpenShift cluster, we need to perform the following actions. Most of these can be done with the gloud binary, and all can be done with the management console. I preferred to use the binary when possible (and when I had enough information or knowledge about it).

- Create a Google cloud project and account

- Activate the OKD-required APIs

- Compute Engine API: compute.googleapis.com

- Cloud Resource Manager API: cloudresourcemanager.googleapis.com

- Google DNS API: dns.googleapis.com

- IAM Service Account Credentials API: iamcredentials.googleapis.com

- Identity and Access Management (IAM) API: iam.googleapis.com

- Create a service account

- Provide the following roles to the service account

- roles/compute.instanceAdmin

- roles/compute.networkAdmin

- roles/compute.securityAdmin

- roles/storage.admin

- roles/iam.serviceAccountUser

- roles/compute.viewer

- roles/storage.admin

- Create a JSON-based access key for the service account

- Create a DNS record, and configure the correct namespaces

Let’s detail these steps.

3.1 Google Cloud Project & Account Creation

We will create a new gcloud configuration and create the cloud project too. All of these steps are done as an interactive "dialog" with the binary. In my case, the steps are these:

gcloud init

Welcome! This command will take you through the configuration of gcloud.

#..

Pick configuration to use:

[1] Re-initialize this configuration [default] with new settings

[2] Create a new configuration

Please enter your numeric choice: 2

Enter configuration name

> gke-openshift

Choose the account you would like to use to perform operations

> REDACTED

Pick cloud project to use:

[1] coral-marker-368316

[2] Enter a project ID

[3] Create a new project

> 3

Enter a Project ID. Note that a Project ID CANNOT be changed later.

Project IDs must be 6-30 characters (lowercase ASCII, digits, or

hyphens) in length and start with a lowercase letter.

> ocd-cluster

Do you want to configure a default Compute Region and Zone? (Y/n)? Y

Which Google Compute Engine zone would you like to use as project default?

If you do not specify a zone via a command line flag while working with

Compute Engine resources, the default is assumed.

# ...

[21] europe-west3-a

# ...

Please enter numeric choice or text value (must exactly match list item): 21

Your project default Compute Engine zone has been set to [europe-west3-a].

You can change it by running [gcloud config set compute/zone NAME].

Your project default Compute Engine region has been set to [europe-west3].

You can change it by running [gcloud config set compute/region NAME].

Your Google Cloud SDK is configured and ready to use!

Ok, the account is created and active.

3.2 Activate Required APIs

The account needs access privileges to several APIs for creating the required cloud computing resources. I used a handy bash script from this article to configure it.

export CLOUDSDK_CORE_PROJECT='okd-cluster'

# while read service; do

gcloud services enable $service

done <<EOF

compute.googleapis.com

cloudapis.googleapis.com

cloudresourcemanager.googleapis.com

dns.googleapis.com

iamcredentials.googleapis.com

iam.googleapis.com

servicemanagement.googleapis.com

serviceusage.googleapis.com

storage-api.googleapis.com

storage-component.googleapis.com

EOF

3.3 Service Account Creation and Permission Management

I did this step entirely via the Google cloud console. Select the project, then click on + Create a Service Account. Fill in the details. In step 2, you can either give the account the exactly required access rights according to the OKD documentation, or you can give it the role of a project owner which means all possible access rights. Because this is a trial installation, I used the latter option.

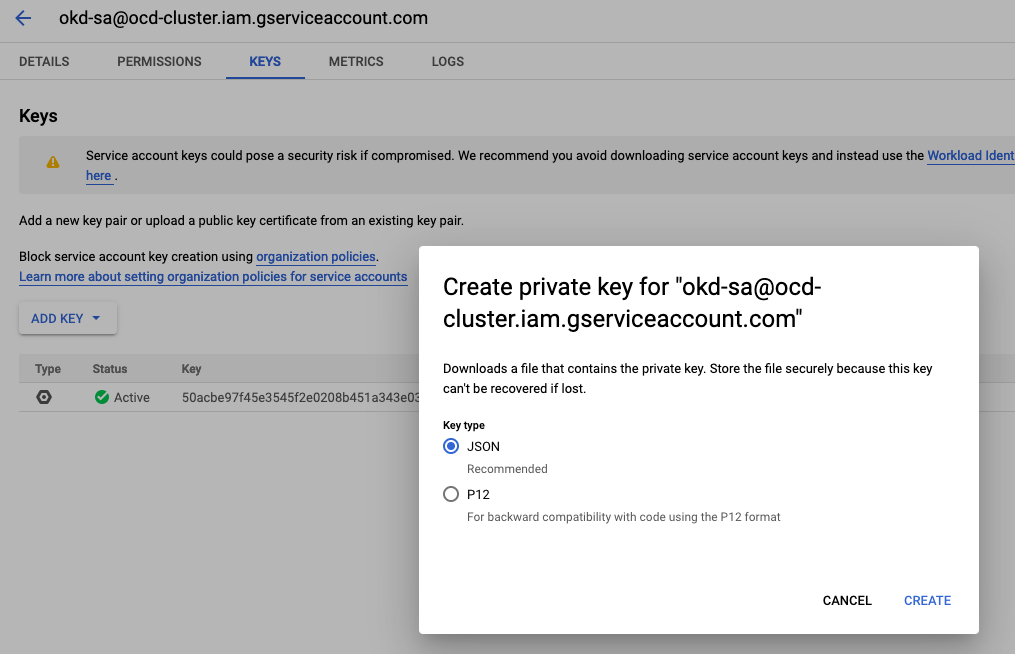

3.4 Create a Service Account Access Key

When the account is created, in the overview, click on the dots and select to create a JSON keyfile as shown in the following screenshot. This file is essential! To the best of my knowledge, the OKD binaries do not work with any other authorization scheme (and this was the reason why I abandoned using Terraform for the installation).

3.5 DNS Zone Creation

The DNS zone can be created with the gcloud binary too.

gcloud dns managed-zones create okd-dns \

--description='k3s.admantium.com' \

--dns-name='k3s.admantium.com' \

--visibility=public

Then, take a look at this object’s configuration, and enter the nameserver information at yours domains registrar.

gcloud dns managed-zones describe okd-dns

cloud dns managed-zones describe okd-dns

cloudLoggingConfig:

kind: dns#managedZoneCloudLoggingConfig

creationTime: '2022-11-20T12:18:00.683Z'

description: k3s.admantium.com

dnsName: k3s.admantium.com.

id: '7629478534122787776'

kind: dns#managedZone

name: okd-dns

nameServers:

- ns-cloud-e1.googledomains.com.

- ns-cloud-e2.googledomains.com.

- ns-cloud-e3.googledomains.com.

- ns-cloud-e4.googledomains.com.

visibility: public

Part 4: Cluster Creation

When all Google resources are created, we can continue with the cluster creation.

The OKD installation uses a service account key file expected at a very specific location. Copy the JSON key as shown:

mkdir ~/.gcp

cp ocd-cluster-46f2d60f5bcf.json ~/.gcp/sServiceAccount.json

Then, to be sure that all other glcoud commands you issue from here on are also done with the same account, activate it:

gcloud auth activate-service-account --key-file ocd-cluster-46f2d60f5bcf.json

Activated service account credentials for: [okd-sa@ocd-cluster.iam.gserviceaccount.com]

You can deploy the cluster directly, or fist create the manifests files and configure them. I choose the latter.

export =~/openshift/gke-cluster

./openshift-install create manifests --dir=$GKE_PROJECT_DIR

? SSH Public Key <none>

? Platform gcp

INFO Credentials loaded from file "~/.gcp/osServiceAccount.json"

? Project ID ocd-cluster (ocd-cluster)

? Region europe-west4

? Base Domain k8s.admantium.com

? Cluster Name ocd-cluster

? Pull Secret [? for help] *****

X Sorry, your reply was invalid: json: cannot unmarshal number into Go value of type validate.imagePullSecret

? Pull Secret [? for help] **********************************

INFO Manifests created in: ocd-cluster-config/manifests and ocd-cluster-config/openshift

There are several configuration files editable - check the official documentation for the details.

ocd-cluster-config

├── manifests

│ ├── cloud-controller-uid-config.yml

│ ├── cloud-provider-config.yaml

│ ├── cluster-config.yaml

│ ├── cluster-dns-02-config.yml

│ ├── cluster-infrastructure-02-config.yml

│ ├── cluster-ingress-02-config.yml

│ ├── cluster-network-01-crd.yml

│ ├── cluster-network-02-config.yml

│ ├── cluster-proxy-01-config.yaml

│ ├── cluster-scheduler-02-config.yml

│ ├── cvo-overrides.yaml

│ ├── kube-cloud-config.yaml

│ ├── kube-system-configmap-root-ca.yaml

│ ├── machine-config-server-tls-secret.yaml

│ └── openshift-config-secret-pull-secret.yaml

└── openshift

├── ....

Afterwards we start the installation:

./openshift-install create cluster \

--dir "${GKE_PROJECT_DIR}" \

--log-level=info

The installation process can take around 40 minutes. Several log messages will inform you about the progress:

openshift-install create cluster --dir ocd-cluster-config

? SSH Public Key ~/.ssh/eksctl_rsa.pub

? Platform gcp

INFO Credentials loaded from file "~/.gcp/osServiceAccount.json"

? Project ID ocd-cluster (ocd-cluster)

? Region europe-west4

? Base Domain k8s.admantium.com

? Cluster Name cde-cluster

? Pull Secret [? for help] ******************************************

WARNING the following optional services are not enabled in this project: deploymentmanager.googleapis.com

WARNING Following quotas compute.googleapis.com/cpus (europe-west4) are available but will be completely used pretty soon.

INFO Creating infrastructure resources...

INFO Waiting up to 20m0s (until 1:38PM) for the Kubernetes API at https://api.cde-cluster.k8s.admantium.com:6443...

INFO API v1.24.0-2566+5157800f2a3bc3-dirty up

INFO Waiting up to 30m0s (until 1:51PM) for bootstrapping to complete...

W1119 13:33:30.858853 85275 reflector.go:324] k8s.io/client-go/tools/watch/informerwatcher.go:146: failed to list *v1.ConfigMap: Get "https://api.cde-cluster.k8s.admantium.com:6443/api/v1/namespaces/kube-system/configmaps?fieldSelector=metadata.name%3Dbootstrap&resourceVersion=12196": dial tcp 34.147.22.124:6443: connect: connection refused

E1119 13:33:30.859777 85275 reflector.go:138] k8s.io/client-go/tools/watch/informerwatcher.go:146: Failed to watch *v1.ConfigMap: failed to list *v1.ConfigMap: Get "https://api.cde-cluster.k8s.admantium.com:6443/api/v1/namespaces/kube-system/configmaps?fieldSelector=metadata.name%3Dbootstrap&resourceVersion=12196": dial tcp 34.147.22.124:6443: connect: connection refused

W1119 13:33:33.176373 85275 reflector.go:324] k8s.io/client-go/tools/watch/informerwatcher.go:146: failed to list *v1.ConfigMap: Get "https://api.cde-cluster.k8s.admantium.com:6443/api/v1/namespaces/kube-system/configmaps?fieldSelector=metadata.name%3Dbootstrap&resourceVersion=12196": dial tcp 34.147.22.124:6443: connect: connection refused

E1119 13:33:33.176464 85275 reflector.go:138] k8s.io/client-go/tools/watch/informerwatcher.go:146: Failed to watch *v1.ConfigMap: failed to list *v1.ConfigMap: Get "https://api.cde-cluster.k8s.admantium.com:6443/api/v1/namespaces/kube-system/configmaps?fieldSelector=metadata.name%3Dbootstrap&resourceVersion=12196": dial tcp 34.147.22.124:6443: connect: connection refused

INFO Destroying the bootstrap resources...

INFO Waiting up to 40m0s (until 2:21PM) for the cluster at https://api.cde-cluster.k8s.admantium.com:6443 to initialize...

INFO Waiting up to 10m0s (until 2:03PM) for the openshift-console route to be created...

INFO Install complete!

INFO To access the cluster as the system:admin user when using 'oc', run

INFO export KUBECONFIG=/Users/work/terraform/openshift/ocd-cluster-config/auth/kubeconfig

INFO Access the OpenShift web-console here: https://console-openshift-console.apps.cde-cluster.k8s.admantium.com

INFO Login to the console with user: "REDACTED

INFO Time elapsed: 40m20s

Part 5: Cluster Access

When the installation is complete, you can already see that the kubeconfig file was copied to your system. Use it to explore the cluster:

kubectl get nodes

NAME STATUS ROLES AGE VERSION

cde-cluster-wcx99-master-0 Ready master 36m v1.24.6+5157800

cde-cluster-wcx99-master-1 Ready master 36m v1.24.6+5157800

cde-cluster-wcx99-master-2 Ready master 37m v1.24.6+5157800

cde-cluster-wcx99-worker-a-h4vd2 Ready worker 17m v1.24.6+5157800

cde-cluster-wcx99-worker-b-4d9qc Ready worker 18m v1.24.6+5157800

cde-cluster-wcx99-worker-c-tkd95 Ready worker 19m v1.24.6+5157800

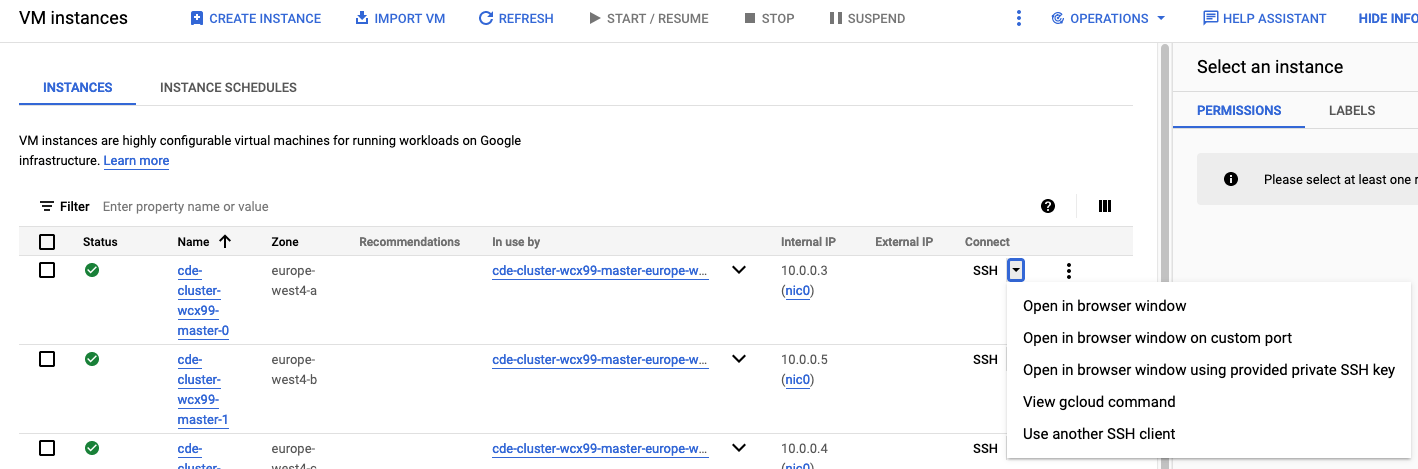

For accessing the nodes, you can use the Google management console to grab an gcloud command (amongst other options)

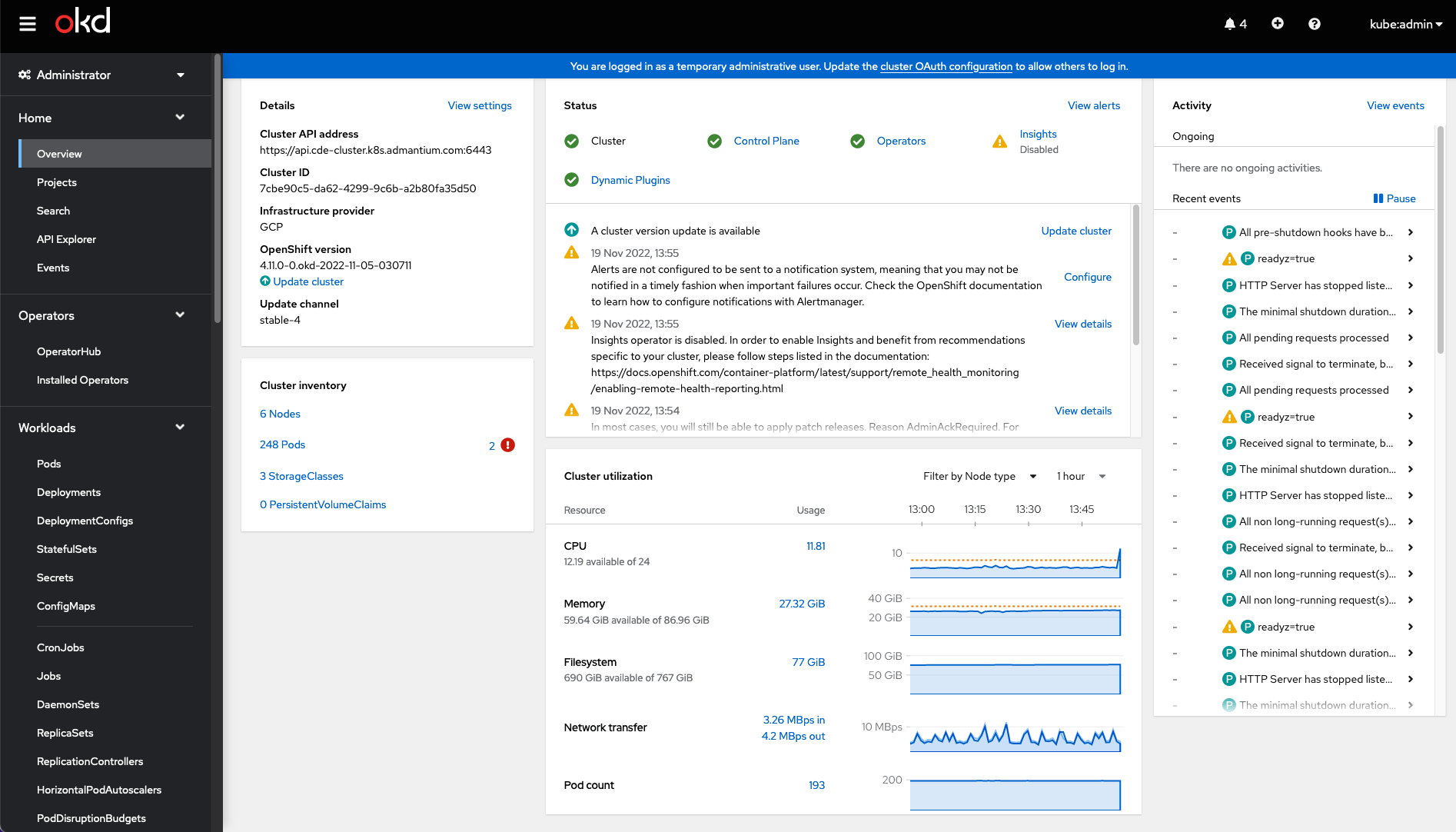

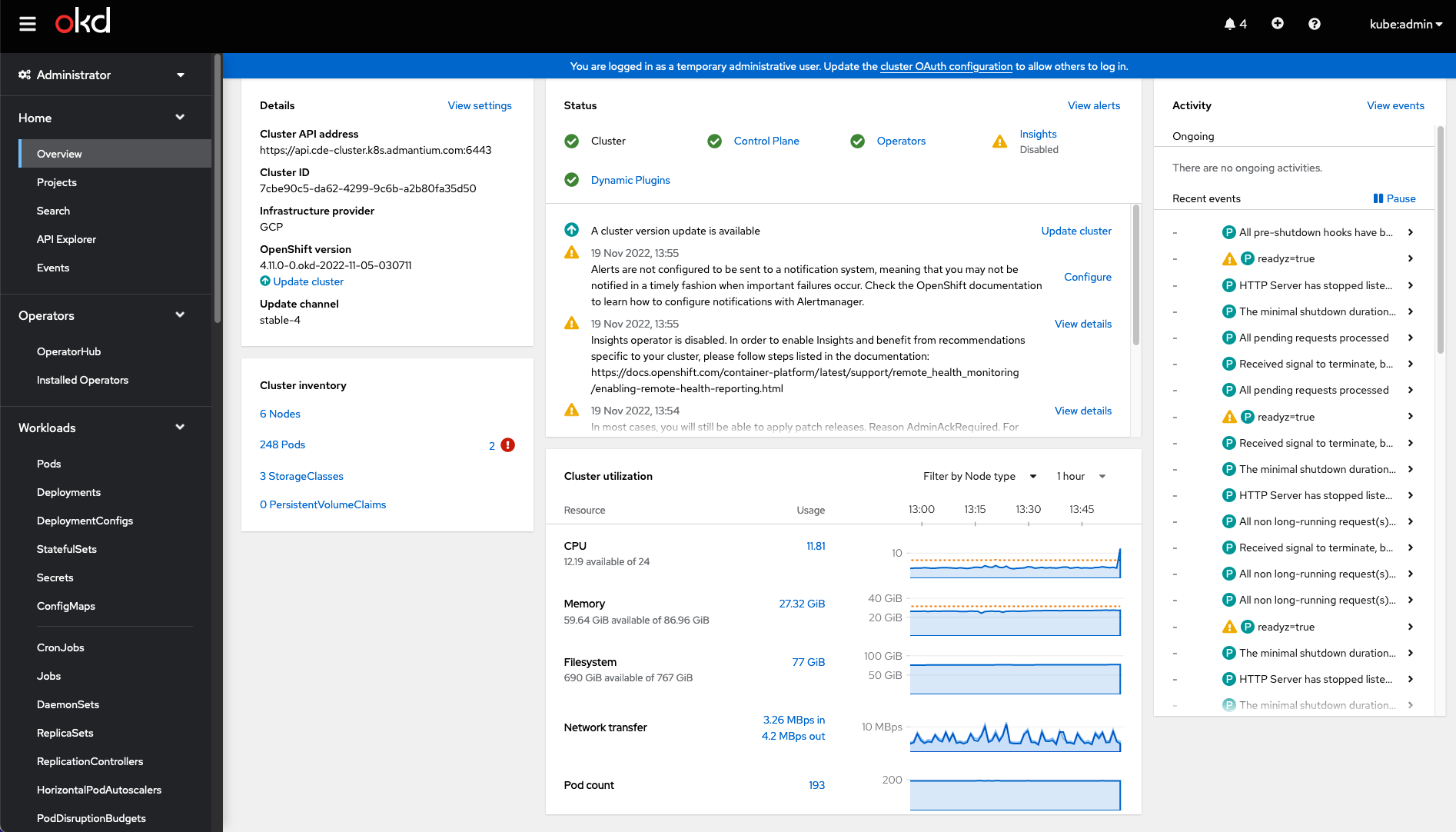

And finally, OpenShift provides a handy dashboard as well:

Finally, you can also delete the cluster completely:

openshift-install destroy cluster --dir ocd-cluster-config

INFO Credentials loaded from file "~/.gcp/osServiceAccount.json"

INFO Stopped instance cde-cluster-wcx99-worker-a-h4vd2

#...

INFO Deleted IAM project role bindings

INFO Deleted service account projects/ocd-cluster/serviceAccounts/cde-cluster--openshift-c-9z6n2@ocd-cluster.iam.gserviceaccount.com

#...

INFO Deleted disk cde-cluster-wcx99-worker-b-4d9qc

#...

INFO Deleted instance group cde-cluster-wcx99-master-europe-west4-b

INFO Time elapsed: 5m8s

Part 6: OpenShift Cluster Internals

I was curious to find out which Kubernetes components the cluster uses. Running kubectl get all -A revealed these facts:

- Linux distribution: Fedora CoreOS

- Container runtime: containerd

- Control Plane Storage: openshift-etcd

- Logging / Metrics: node-exporter, prometheus,openshift-state-metrics

- Network communication: openshift-dns, openshift-multus (a CNI plugin that loads other plugins), ovnkube-node

- Storage: gcp-pd-csi-driver-controller

- Ingress: openshift-ingress

- Misc: openshift-cluster-node-tuning-operator (tuning Kernel parameters of the VMs), openshift-image-registry (container registry), cluster-autoscaler-operator (autoscaling options)

Conclusion

OpenShift provides a managed Kubernetes cluster which runs on several VM-based infrastructures and cloud environments. It provides enterprise level cluster architecture for deploying production workloads. In this article, you completely learned how to install an OpenShift Cluster on the Google cloud: a) Download the gcloud and oc binaries, b) configure a Google Cloud project and account, c) provide the correct API access for this account, d) create a service account with the correct permission, e) draft and edit a cluster configuration, f) create the cluster. You also learned how to access the cluster via kubectl and SSH, and saw which Kubernetes components an OpenShift cluster provides.